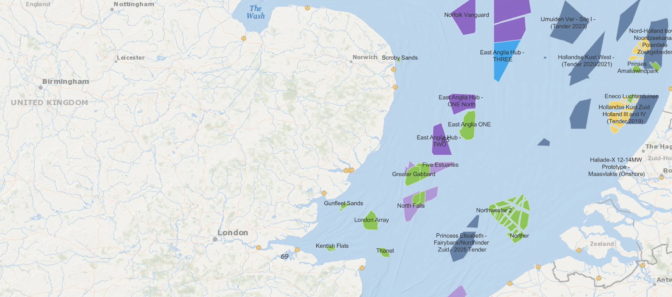

A hundred and forty turbines in the North Sea — and some GPUs in the cloud — pumped wind under the wings of David Standingford and Jamil Appa’s dream.

As colleagues at a British aerospace firm, they shared a vision of starting a company to apply their expertise in high performance computing across many industries.

They formed Zenotech and coded what they learned about computational fluid dynamics into a program called zCFD. They also built a tool, EPIC, that simplified running it and other HPC jobs on the latest hardware in public clouds.

But getting visibility beyond their Bristol, U.K. home was a challenge, given the lack of large, open datasets to show what their tools could do.

Harvesting a Wind Farm’s Data

Their problem dovetailed with one in the wind energy sector.

As government subsidies for wind farms declined, investors demanded a deeper analysis of a project’s likely return on investment, something traditional tools didn’t deliver. A U.K. government project teamed Zenotech with consulting firms in renewable energy and SSE, a large British utility willing to share data on its North Sea wind farm, one of the largest in the world.

Using zCFD, Zenotech simulated the likely energy output of the farm’s 140 turbines. The program accounted for dozens of wind speeds and directions. This included key but previously untracked phenomena in front of and behind a turbine, like the combined effects the turbines had on each other.

“These so-called ‘wake’ and ‘blockage’ effects around a wind farm can even impact atmospheric currents,” said Standingford, a director and co-founder of Zenotech.

The program can also track small but significant terrain effects on the wind, such as when trees in a nearby forest lose their leaves.

SSE validated that the final simulation came within 2 percent of the utility’s measured data, giving zCFD a stellar reference.

Accelerated in the Cloud

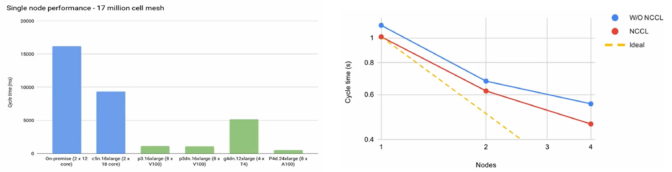

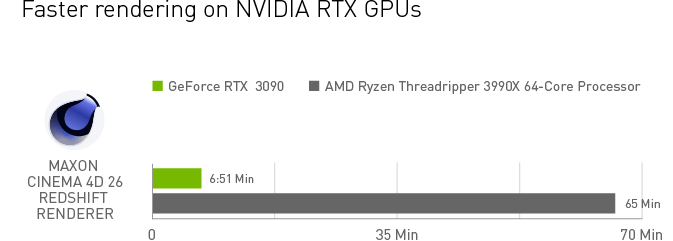

Icing the cake, Zenotech showed cloud GPUs delivered results fast and cost effectively.

For example, the program ran 43x faster on NVIDIA A100 Tensor Core GPUs than CPUs at a quarter of the CPUs’ costs, the company reported in a recent GTC session (viewable on-demand). NVIDIA NCCL libraries that speed communications between GPU systems further boosted results up to 15 percent.

As a result, work that took more than five hours on CPUs ran in less than 50 minutes on GPUs. The ability to analyze nuanced wind effects in detail and finish a report in a day “got people’s attention,” Standingford said.

“The tools and computing power to perform high-fidelity simulations of wind projects are now affordable and accessible to the wider industry,” he concluded in a report on the project.

Lowering Carbon, Easing Climate Change

Wind energy is among the largest, most cost-effective contributors to lowering carbon emissions, notes Farah Hariri, technical lead for the team helping NVIDIA’s customers manage their transitions to net-zero emissions.

“By modeling both wake interactions and the blockage effects, zCFD helps wind farms extract the maximum energy for the minimum installation costs,” she said.

This kind of fast yet detailed analysis lowers risk for investors, making wind farms more economically attractive than traditional energy sources, said Richard Whiting, a partner at Everose, one of the consultants who worked on the project with Zenotech.

Looking forward, Whiting estimates more than 2,100 gigawatts of wind farms could be in operation worldwide by 2030, up 3x from in 2020. It’s a growing opportunity on many levels.

“In future, we expect projects will use larger arrays of larger turbines so the modeling challenge will only get bigger,” he added.

Less Climate Change, More Business

Helping renewable projects get off the ground also put wind in Zenotech’s sails.

Since the SSE analysis, the company has helped design wind farms or turbines in at least 10 other projects across Europe and Asia. And half of Zenotech’s business is now outside the U.K.

As the company expands, it’s also revisiting its roots in aerospace, lifting the prospects of new kinds of businesses for drones and air taxis.

A Parallel Opportunity

For example, Cardiff Airport is sponsoring live trials where emerging companies use zCFD on cloud GPUs to predict wind shifts in urban environments so they can map safe, efficient routes.

“It’s a forward-thinking way to use today’s managed airspace to plan future services like air taxis and automated airport inspections,” said Standingford.

“We’re seeing a lot of innovation in small aircraft platforms, and we’re working with top platform makers and key sites in the U.K.”

It’s one more way the company is keeping its finger to the wind.

The post Answers Blowin’ in the Wind: HPC Code Gives Renewable Energy a Lift appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)