NVIDIA DRIVE Hyperion and DRIVE Orin are gaining ground in the industry.

At NVIDIA GTC, BYD, the world’s second-largest electric vehicle maker, announced it is building its next-generation fleets on the DRIVE Hyperion architecture. This platform, based on DRIVE Orin, is now in production, and powering a wide ecosystem of 25 EV makers building software-defined vehicles on high-performance, energy-efficient AI compute.

A wave of innovative startups also joined the DRIVE Hyperion ecosystem this week, including DeepRoute, Pegasus, UPower and WeRide, while luxury EV maker Lucid Motors announced its automated driving system is built on NVIDIA DRIVE.

All together, this growing ecosystem makes up an automotive pipeline that exceeds $11 billion.

The open DRIVE Hyperion 8 platform allows these companies to individualize this programmable architecture to their needs, leveraging end-to-end solutions to accelerate autonomous driving development.

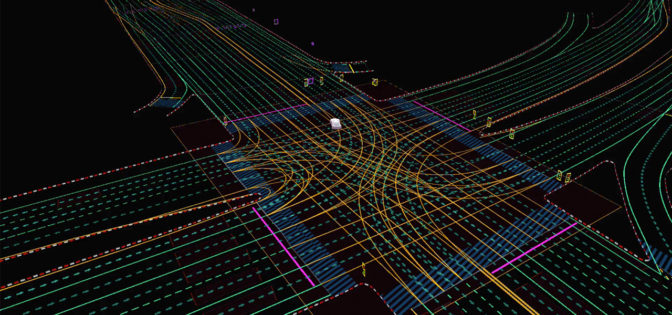

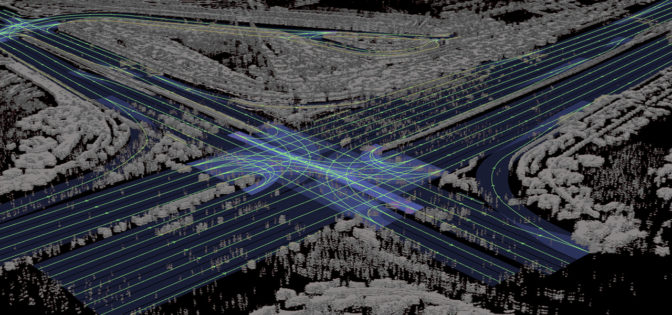

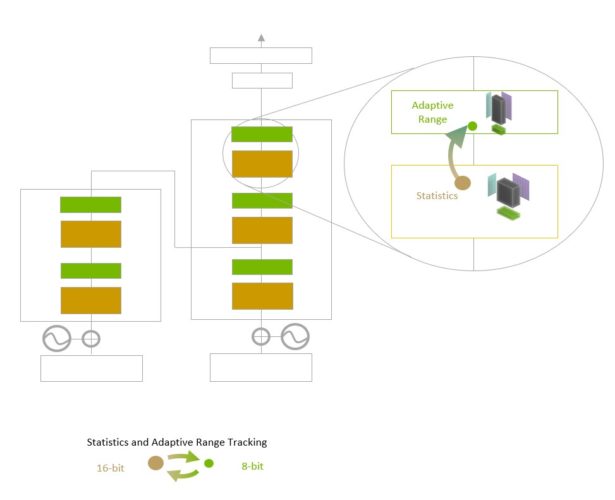

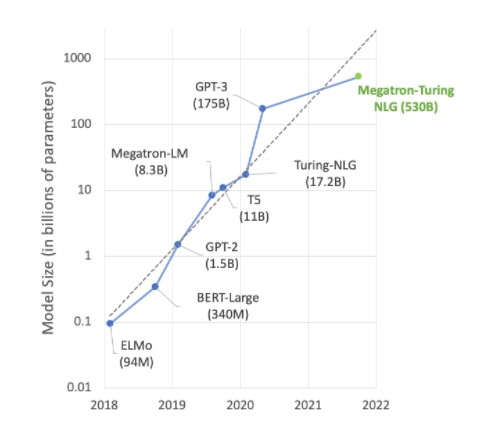

The NVIDIA DRIVE Orin system-on-a-chip achieves up to 254 trillions of operations per second (TOPS) and is designed to handle the large number of applications and deep neural networks (DNNs) that run simultaneously in autonomous vehicles, with the ability to achieve systematic safety standards such as ISO 26262 ASIL-D.

Together, DRIVE Hyperion and DRIVE Orin act as the nervous system and brain of the vehicle, processing massive amounts of sensor data in real time to safely perceive, plan and act.

The World Leader in NEV

New energy vehicles are disrupting the transportation industry. They’re introducing a novel architecture that is purpose-built for software-defined functionality, enabling continuous improvement and exciting business models.

BYD is an NEV pioneer, leveraging its heritage as a rechargeable battery maker to introduce the world’s first plug-in hybrid, the F3, in 2008.

The F3 became China’s best-selling sedan the following year, and since then BYD has continued to push the limits of what’s possible for alternative powertrains, with more than 780,000 BYD electric vehicles in operation.

And now, it’s adding software-defined to the BYD fleet resume, building its coming generation of EVs on DRIVE Hyperion 8.

These vehicles will feature a programmable compute platform based on DRIVE Orin for intelligent driving and parking.

Even More Intelligent Solutions

In addition to automakers, autonomous driving startups are developing on DRIVE Hyperion to deliver software-defined vehicles.

DeepRoute, a self-driving company building robotaxis, said it is integrating DRIVE Hyperion into its level 4 system. The automotive-grade platform is key to the company’s plans to bring its production-ready vehicles to market next year.

Self-driving startup Pegasus Technology is also developing intelligent driving solutions for taxis, trucks and buses on DRIVE Hyperion to seamlessly operate on complex city roads. Its autonomous system is designed to handle lane changes, busy intersections, roundabouts, highway entrances and exits, and more.

UPower is a startup dedicated to streamlining the EV development process with its Super Board skateboard chassis. This next-generation foundation for electric vehicles will include DRIVE Hyperion for automated and autonomous driving capabilities.

Autonomous driving technology company WeRide has been building self-driving platforms for urban transportation on NVIDIA DRIVE since 2017. During GTC, the startup announced it will develop its coming generation of intelligent driving solutions on DRIVE Hyperion.

As the DRIVE Hyperion ecosystem expands, software-defined transportation will become more prevalent around the world, delivering safer, more efficient driving experiences.

The post NVIDIA DRIVE Continues Industry Momentum With $11 Billion Pipeline as DRIVE Orin Enters Production appeared first on NVIDIA Blog.