CES has long been a showcase on what’s coming down the technology pipeline. This year, NVIDIA is showing the radical innovation happening now.

During a special virtual address at the show, Ali Kani, vice president and general manager of Automotive at NVIDIA, detailed the capabilities of DRIVE Hyperion and the many ways the industry is developing on the platform. These critical advancements show the maturity of autonomous driving technology as companies begin to deploy safer, more efficient transportation.

Developing autonomous vehicle technology requires an entirely new platform architecture and software development process. Both the hardware and software must be comprehensively tested and validated to ensure they can handle the harsh conditions of daily driving with the stringent safety and security needs of automated vehicles

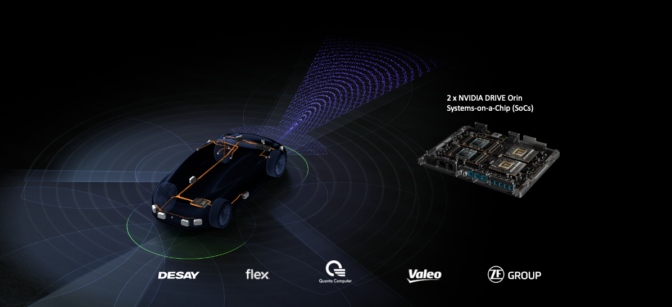

This is why NVIDIA has built and made open the DRIVE Hyperion platform, which includes a high-performance computer and sensor architecture that meets the safety requirements of a fully autonomous vehicle. DRIVE Hyperion is designed with redundant NVIDIA DRIVE Orin systems-on-a-chip for software-defined vehicles that continuously improve and create a wide variety of new software- and service-based business models for vehicle manufacturers.

The latest-generation platform includes 12 state-of-the-art surround cameras, 12 ultrasonics, nine radar, three interior sensing cameras and one front-facing lidar. It’s architected to be functionally safe, so that if one computer or sensor fails, there is a back up available to ensure that the AV can drive its passengers to a safe place.

The DRIVE Hyperion architecture has been adopted by hundreds of automakers, truck makers, tier 1 suppliers and robotaxi companies, ushering in the new era of autonomy.

Innovation at Scale

Bringing this comprehensive platform architecture to the global automotive ecosystem requires collaboration with leading tier 1 suppliers.

Desay, Flex, Quanta, Valeo and ZF are now DRIVE Hyperion 8 platform scaling partners, manufacturing production-ready designs with the highest levels of functional safety and security.

“We are excited to work with NVIDIA on their DRIVE Hyperion platform,” said Geoffrey Buoquot, CTO and vice president of Strategy at Valeo. “On top of our latest generation ultrasonic sensors providing digital raw data that their AI classifiers can process and our 12 cameras, including the new 8-megapixel cameras, we are now also able to deliver an Orin-based platform to support autonomous driving applications with consistent performance under automotive environmental conditions and production requirements.”

“Flex is thrilled to collaborate with NVIDIA to help accelerate the deployment of autonomous and ADAS systems leveraging the DRIVE Orin platform to design solutions for use across multiple customers,” said Mike Thoeny, president of Automotive at Flex.

The breadth and depth of this supplier ecosystem demonstrate how DRIVE Hyperion has become the industry’s most open and adopted platform architecture. These scaling partners will help make it possible for the platform to start production as early as this year.

New Energy for a New Era

The radical transformation of the transportation industry extends from compute power to powertrains.

Electric vehicles aren’t just better for the environment, they also fundamentally improve the driving experience for consumers. With a quieter and more sustainable profile, EVs will begin to make up the majority of cars sold over the next several decades.

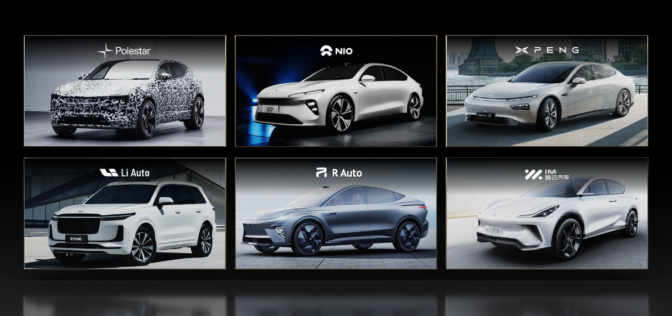

This evolution has given way to dozens of new energy vehicle startups. They have reimagined the car, starting with a new vehicle architecture based on programmable, software-defined compute. These NEVs will continuously improve over time with over-the-air updates.

Many leading NEV makers are adopting DRIVE Hyperion as the platform to develop these clean, intelligent models. From the storied performance heritage of Polestar to the breakthrough success of IM Motors, Li Auto, NIO, R Auto and Xpeng, these companies are reinventing the personal transportation experience.

These companies are benefiting from new, software-driven business models. With a centralized compute architecture, automakers can deliver fresh services and advanced functions throughout the life of the vehicle.

Truly Intelligent Logistics

AI isn’t just transforming personal transportation, it’s also addressing the rapidly growing challenges faced by the trucking and logistics industry.

Over the past decade, and accelerated by the pandemic, consumer shopping has dramatically shifted online, resulting in increased demand for trucking and last-mile delivery. This trend is expected to continue, as experts predict that 170 billion packages will be shipped worldwide this year, climbing to 280 billion in 2027.

At the same time, the trucking industry has been experiencing significant driver shortages, with the U.S. expected to be in need of more than 160,000 drivers by 2028.

TuSimple is facing these challenges head-on with its scalable Autonomous Freight Network. This network uses autonomous trucks to safely and efficiently move goods across the country. And today, the company announced these intelligent trucks will be powered by NVIDIA DRIVE Orin.

The advanced SoC will be at the center of TuSimple’s autonomous domain controller (ADC), which serves as an autonomous truck’s central compute unit that processes hundreds of trillions of operations per second, including mission-critical perception, planning and actuation functions.

As a result, TuSimple can accelerate the development of a high-performance, automotive-grade and scalable ADC that can be integrated into production trucks.

Everyone’s Digital Assistant

Kani also demonstrated the NVIDIA DRIVE Concierge platform, which delivers intelligent services that are always on.

DRIVE Concierge combines NVIDIA Omniverse Avatar, DRIVE IX, DRIVE AV 4D perception, Riva GPU-accelerated speech AI SDK and an array of deep neural networks to delight customers on every drive.

Omniverse Avatar connects speech AI, computer vision, natural language understanding, recommendation engines and simulation. Avatars created on the platform are interactive characters with ray-traced 3D graphics that can see, speak, converse on a wide range of subjects, and understand naturally spoken intent.

During the CES special address, a demo showed highlights of a vehicle equipped with DRIVE Concierge making the trip to Las Vegas, helping the driver prepare for the trip, plan schedules and, most importantly, relax while the car was safely piloted by the DRIVE Chauffeur.

These important developments in intelligent driving technology, as well as innovations from suppliers, automakers and trucking companies all building on NVIDIA DRIVE, are heralding the arrival of the autonomous era.

The post Autonomous Era Arrives at CES 2022 With NVIDIA DRIVE Hyperion and Omniverse Avatar appeared first on The Official NVIDIA Blog.

(@ReidoBaggins)

(@ReidoBaggins)

GT: OlivierChlc (@OlivierChlc)

GT: OlivierChlc (@OlivierChlc)

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)