Great things come in twos. Techland’s Dying Light 2 Stay Human arrives with RTX ON and is streaming from the cloud tomorrow, Feb. 4.

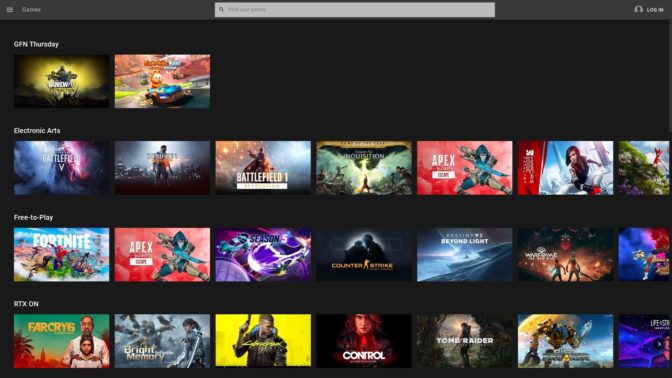

Plus, in celebration of the second anniversary of GeForce NOW, February is packed full of membership rewards in Eternal Return, World of Warships and more. There are also 30 games joining the GeForce NOW library this month, with four streaming this week.

Take a Bite Out of Dying Light 2

It’s the end of the world. Get ready for Techland’s Dying Light 2 Stay Human, releasing on Feb. 4 on GeForce NOW with RTX ON.

Civilization has fallen to the virus, and The City, one of the last large human sanctuaries, is torn by conflict in a world ravaged by the infected dead. You are a wanderer with the power to determine the fate of The City in your search to learn the truth.

Struggle to survive by using clever thinking, daring parkour, resourceful traps and creative weapons to make helpful allies and overcome enemies with brutal combat. Discover the secrets of a world thrown into darkness and make decisions that determine your destiny. There’s one thing you can never forget — stay human.

We’ve teamed up with Techland to bring real-time ray tracing to Dying Light 2 Stay Human, delivering the highest level of realistic lighting and depth into every scene of the new game.

Dying Light 2 Stay Human features a dynamic day-night cycle that is enhanced with the power of ray tracing, including fully ray-traced lighting throughout. Ray-traced global illumination, reflections and shadows bring the world to life with significantly better diffused lighting and improved shadows, as well as more accurate and crisp reflections.

“We’ve been working with NVIDIA to expand the world of Dying Light 2 with ray-tracing technology so that players can experience our newest game with unprecedented image quality and more immersion than ever,” said Tomasz Szałkowski, rendering director at Techland. “Now, gamers can play Dying Light 2 Stay Human streaming on GeForce NOW to enjoy our game in the best way possible and exactly as intended with the RTX 3080 membership, even when playing on underpowered devices.”

Enter the dark ages and stream Dying Light 2 Stay Human from both Steam and Epic Games Store on GeForce NOW at launch tomorrow.

An Anniversary Full of Rewards

Happy anniversary, members. We’re celebrating GeForce NOW turning two with three rewards for members — including in-game content for Eternal Return, World of Warships.

Check in on GFN Thursdays throughout February for updates on the upcoming rewards, and make sure you’re opted in by checking the box for Rewards in the GeForce NOW account portal.

Thanks to the cloud, this past year, over 15 million members around the world have streamed nearly 180 million hours of their favorite games like Apex Legends, Rust and more from the ever-growing GeForce NOW library.

Members have played with the power of the new six-month RTX 3080 memberships, delivering cinematic graphics with RTX ON in supported games like Cyberpunk 2077 and Control. They’ve also experienced gaming with ultra-low latency and maximized eight-hour session lengths across their devices.

It’s All Fun and Games in February

The party doesn’t stop there. It’s also the first GFN Thursday of the month, which means a whole month packed full of games.

Gear up for the 30 new titles coming to the cloud in February, with four games ready to stream this week:

- Life is Strange Remastered (New release on Steam, Feb. 1)

- Life is Strange: Before the Storm Remastered (New release on Steam, Feb. 1)

- Dying Light 2 Stay Human (New release on Steam and Epic Games Store, Feb. 4)

- Warm Snow (Steam)

Also coming in February:

- Werewolf: The Apocalypse – Earthblood (New release on Steam Feb. 7)

- Sifu (New release on Epic Games Store, Feb. 8)

- Diplomacy is Not an Option (New release on Steam, Feb. 9)

- SpellMaster: The Saga (New release on Steam, Feb. 16)

- Destiny 2: The Witch Queen Deluxe Edition (New release on Steam, Feb. 22)

- SCP: Pandemic (New release on Steam, Feb. 22)

- Martha is Dead (New release on Steam and Epic Games Store, Feb. 24)

- Ashes of the Singularity: Escalation (Steam)

- AWAY: The Survival Series (Epic Games Store)

- Citadel: Forged With Fire (Steam)

- Escape Simulator (Steam)

- Galactic Civilizations III (Steam)

- Haven (Steam)

- Labyrinthine Dreams (Steam)

- March of Empires (Steam)

- Modern Combat 5 (Steam)

- Parkasaurus (Steam)

- People Playground (Steam)

- Police Simulator: Patrol Officers (Steam)

- Sins of a Solar Empire: Rebellion (Steam)

- Train Valley 2 (Steam)

- TROUBLESHOOTER: Abandoned Children (Steam)

- Truberbrook (Steam and Epic Games Store)

- Two Worlds Epic Edition (Steam)

- Valley (Steam)

- The Vanishing of Ethan Carter (Epic Games Store)

We make every effort to launch games on GeForce NOW as close to their release as possible, but, in some instances, games may not be available immediately.

Extra Games From January

As the cherry on top of the games announced in January, an extra nine made it to the cloud. Don’t miss any of these titles that snuck their way onto GeForce NOW last month:

- Assassin’s Creed III Deluxe Edition (Ubisoft Connect)

- Blacksmith Legends (Steam)

- Daemon X Machina (Epic Games Store)

- Expeditions: Rome (Steam and Epic Games Store)

- Galactic Civilizations 3 (Epic Games Store)

- Hitman 3 (Steam)

- Supraland Six Inches Under (Steam)

- Tropico 6 (Epic Games Store)

- WARNO (Steam)

With another anniversary for the cloud and all of these updates, there’s never too much fun. Share your favorite GeForce NOW memories from the past year and talk to us on Twitter.

so we thought you should know that…

today is 2/2/22

2⃣morrow we kick off celebrations for our 2-year anniversaryon Friday, @DyingLight 2 launches in the cloud

and yes, we’ve been dying 2 tell you all of this

—

NVIDIA GeForce NOW (@NVIDIAGFN) February 2, 2022

The post 2 Powerful 2 Be Stopped: ‘Dying Light 2 Stay Human’ Arrives on GeForce NOW’s Second Anniversary appeared first on The Official NVIDIA Blog.