Support for the new GeForce RTX 3080 Ti and 3070 Ti Laptop GPUs is available today in the February Studio driver.

Updated monthly, NVIDIA Studio drivers support NVIDIA tools and optimize the most popular creative apps, delivering added performance, reliability and speed to creative workflows.

Creatives will also benefit from the February Studio driver with enhancements to their existing creative apps as well as the latest app releases, including a major update to Maxon’s Redshift renderer.

The NVIDIA Studio platform is being rapidly adopted by aspiring artists, freelancers and creative professionals who seek to take their projects to the next level. The next generation of Studio laptops further powers their ambitions.

Creativity Unleashed — 3080 Ti and 3070 Ti Studio Laptops

Downloading the February Studio driver will help unlock massive time savings, especially for 3080 Ti and 3070 Ti GPU owners, in essential creative apps.

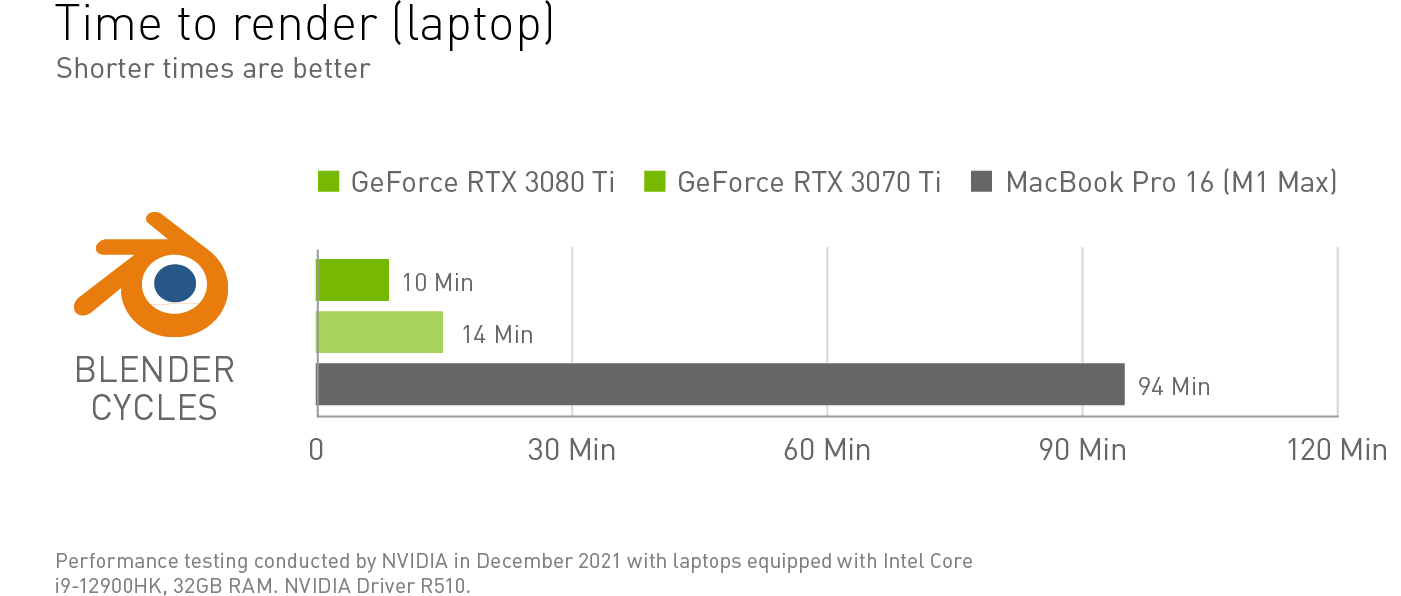

Blender renders are exceptionally fast on GeForce RTX 3080 Ti and 3070 Ti GPUs with RT Cores powering hardware-accelerated ray tracing.

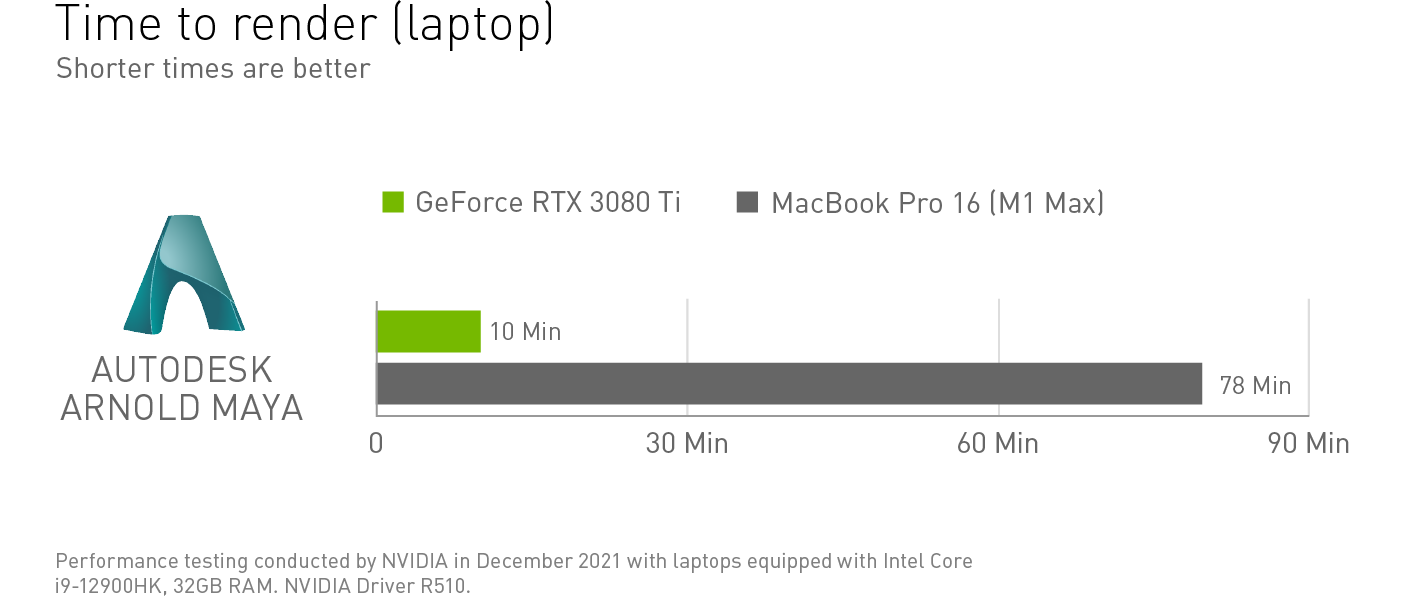

Autodesk aficionados with a GeForce RTX 3080 Ti GPU equipped laptop can render the heaviest of scenes much faster, in this example saving over an hour.

Video production specialists in REDCINE-X PRO have the freedom to edit in real-time with elevated FPS, resulting in more accurate playback, requiring far less time in the editing bay.

Creators can move at the speed of light with the 2022 lineup of Studio laptops and desktops.

MSI has announced the Creator Z16 and Creator Z17 Studio laptops, set for launch in March, with up to GeForce RTX 3080 Ti Laptop GPUs.

ASUS’s award-winning ZenBook Pro Duo, coming later this year, sports a GeForce RTX 3060 GPU, plus a 15.6-inch 4K UHD OLED touchscreen and secondary 4K screen, unlocking numerous creative possibilities.

The Razer Blade 17 and 15, available now, come fully loaded with a GeForce RTX 3080 Ti GPU and 32GB of memory — and they’re configurable with a beautiful 4K 144hz, 100-percent DCI-P3 display. Razer Blade 14 will launch on Feb. 17.

GIGABYTE’s newly refreshed AERO 16 and 17 Studio laptops, equipped with GeForce RTX 3070 Ti and 3080 Ti GPUS, are also now available.

These creative machines power RTX-accelerated tools, including NVIDIA Omniverse, Canvas and Broadcast, making next-generation AI technology even more accessible while reducing and removing tedious work.

Fourth-generation Max-Q technologies — including CPU Optimizer and Rapid Core Scaling — maximize creative performance in remarkably thin laptop designs.

Stay tuned for more Studio product announcements in the coming months.

Shift to Redshift RT

Well-known to 3D artists, Maxon’s Redshift renderer is powerful, biased and GPU accelerated — built to exceed the demands of contemporary high-end production rendering.

Redshift recently launched Redshift RT — a real-time rendering feature — in beta, allowing 3D artists to omit unnecessary wait times for renders to finalize.

Redshift RT runs exclusively on NVIDIA RTX GPUs, bolstered by RT Cores, powering hardware-accelerated, interactive ray tracing.

Redshift RT, which is part of the current release, enables a more natural, intuitive way of working. It offers increased freedom to try different options for creating spectacular content, and is best used for scene editing and rendering previews. Redshift Production remains the highest possible quality and control renderer.

Redshift RT technology is integrated in the Maxon suite of creative apps including Cinema 4D, and is available for Autodesk 3ds Max and Maya, Blender, Foundry Katana and SideFX Houdini, as well as architectural products Vectorworks, Archicad and Allplan, dramatically speeding up all types of visual workflows.

With so many options, now’s the time to take a leap into 3D. Check out our Studio YouTube channel for standouts, tutorials, tips and tricks from industry-leading artists on how to get started.

Get inspired by the latest NVIDIA Studio Standouts video featuring some of our favorite digital art from across the globe.

Follow NVIDIA Studio on Facebook, Twitter and Instagram for the latest information on creative app updates, new Studio apps, creator contests and more. Get updates directly to your inbox by subscribing to the Studio newsletter.

The post Support for New NVIDIA RTX 3080 Ti, 3070 Ti Studio Laptops Now Available in February Studio Driver appeared first on The Official NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)