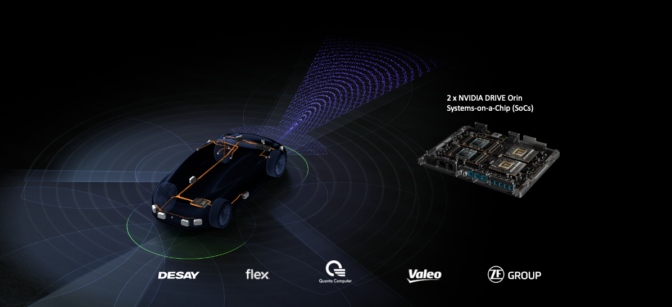

Autonomous vehicles are born in the data center, which is why NVIDIA and Deloitte are delivering a strong foundation for developers to deploy robust self-driving technology.

At CES this week, the companies detailed their collaboration, which is aimed at easing the biggest pain points in AV development. Deloitte, a leading global consulting firm, is pairing with NVIDIA to offer a range of services for data generation, collection, ingestion, curation, labeling and deep neural network (DNN) training with NVIDIA DGX SuperPOD.

Building AVs requires massive amounts of data. A fleet of 50 test vehicles driving six hours a day generates 1.6 petabytes daily — if all that data were stored on standard 1GB flash drives, they’d cover more than 100 football fields. Yet that isn’t enough.

On top of this collected data, AV training and validation requires data from rare and dangerous scenarios that the vehicle may encounter, but could be difficult to come across in standard data gathering. That’s where simulated data comes in.

NVIDIA DGX systems and advanced training tools enable streamlined, large-scale DNN training and optimization. Using the power of GPUs and AI, developers can seamlessly collect and curate data to comprehensively train DNNs for autonomous vehicle perception, planning, driving and more.

Developers can also train and test these DNNs in simulation with NVIDIA DRIVE Sim, a physically accurate, cloud-based simulation platform. It taps into NVIDIA’s core technologies — including NVIDIA RTX, Omniverse and AI — to deliver a wide range of real-world scenarios for AV development and validation.

DRIVE Sim can generate high-fidelity synthetic data with ground truth using NVIDIA Omniverse Replicator to train the vehicle’s perception systems or test the decision-making processes.

It can also be connected to the AV stack in software-in-the-loop or hardware-in-the-loop configurations to validate the complete integrated system.

“The robust AI infrastructure provided by NVIDIA DGX SuperPOD is paving the way for our clients to develop transformative autonomous driving solutions for safer and more efficient transportation,” said Ashok Divakaran, Connected and Autonomous Vehicle Lead at Deloitte.

A Growing Partnership

Deloitte is at the forefront of AI innovation, services and research, including AV development.

In March, it announced the launch of the Deloitte Center for AI Computing, a first-of-its-kind center designed to accelerate the development of innovative AI solutions for its clients.

The center is built on NVIDIA DGX A100 systems to bring together the supercomputing architecture and expertise that Deloitte clients require as they become AI-fueled organizations.

This collaboration now extends to AV development, using robust AI infrastructure to architect solutions for truly intelligent transportation.

NVIDIA DGX POD is the foundation, providing an AI compute infrastructure based on a scalable, tested reference architecture featuring up to eight DGX A100 systems, NVIDIA networking and high-performance storage.

To further scale AV development and speed time to results, customers can choose the NVIDIA DGX SuperPOD, which includes 20 or more DGX systems plus networking and storage.

With Deloitte’s long-standing work with the automotive industry and investment in AI innovation, combined with the unprecedented compute of NVIDIA DGX systems, developers will have access to the best AV training solutions for truly revolutionary products.

Deloitte’s leadership in AI is paired with a broad and deep set of technical capabilities and services. Among its ranks are more than 5,500 systems integration developers, 2,000 data scientists and 4,500 cybersecurity practitioners. In 2020, Deloitte was named the global leader in worldwide system integration services by the International Data Corporation.

Deloitte also has deep experience with the automotive industry, serving three-quarters of the Fortune 1000 automotive companies.

Streamlined Solutions

With combined experience and cutting-edge technology, NVIDIA and Deloitte are offering robust data center solutions for AV developers, encompassing infrastructure, data management, machine learning operations and synthetic data generation.

These services begin with Infrastructure-as-a-Service, which provides management of the DGX SuperPOD infrastructure in an on-prem or co-location environment. Experts design and set up this AI infrastructure, as well as provide ongoing infra operations, for streamlined and efficient AV development.

With Data-Management-as-a-Service, developers can use tools for data ingestion and curation, enabling scale and automation for DNN training.

NVIDIA and Deloitte can also improve data scientist productivity up to 30 percent with MLOps-as-a-Service. This turnkey solution deploys and supports enterprise-grade MLOps software to train DNNs and accelerate accuracy.

Finally, NVIDIA and Deloitte make it possible to curate specific scenarios for comprehensive DNN training with Synthetic Data Generation-as-a-Service. Developers can take advantage of simulation expertise to generate high-fidelity training data to cover the rare and hazardous situations AVs must be able to handle safely.

Equipped with these invaluable tools, AV developers now have the capability to ease some of the largest bottlenecks in DNN training to deliver safer, more efficient transportation.

The post Teamwork Makes AVs Work: NVIDIA and Deloitte Deliver Turnkey Solutions for AV Developers appeared first on The Official NVIDIA Blog.