You’ve reached your weekly gaming checkpoint. Welcome to a positively packed GFN Thursday.

This week delivers a sweet deal for gamers ready to upgrade their PC gaming from the cloud. With any new, paid six-month Priority or GeForce NOW RTX 3080 subscription, members will receive Crysis Remastered for free for a limited time.

Gamers and music lovers alike can get hyped for an awesome entertainment experience playing Core this week and visiting the digital world of Oberhasli. There, they’ll enjoy the anticipated deadmau5 “Encore” concert and event.

And what kind of GFN Thursday would it be without new games? We’ve got eight new titles joining the GeForce NOW library this week.

GeForce NOW Can Run Crysis … And So Can You

But can it run Crysis? GeForce NOW sure can.

For a limited time, get a copy of Crysis Remastered free with select GeForce NOW memberships. Purchase a six-month Priority membership, or the new GeForce NOW RTX 3080 membership, and get a free redeemable code for Crysis Remastered on the Epic Games Store.

Current monthly Founders and Priority members are eligible by upgrading to a six-month membership. Founders, exclusively, can upgrade to a GeForce NOW RTX 3080 membership and receive 10 percent off the subscription price and no risk to their current Founders benefits. They can revert back to their original Founders plan and retain “Founders for Life” pricing, as long as they remain in consistent good standing on any paid membership plan.

This special bonus also applies to existing GeForce NOW RTX 3080 members and preorders, as a thank you for being among the first to upgrade to the next generation in cloud gaming. Current members on the GeForce NOW RTX 3080 plan will receive game codes in the days ahead; while members who have preordered but haven’t been activated yet, will receive their game code when their GeForce NOW RTX 3080 service is activated. Please note, terms and conditions apply.

Stream Crytek’s classic first-person shooter, remastered with graphics optimized for a new generation of hardware and complete with stunning support for RTX ON and DLSS. GeForce NOW members can experience the first game in the Crysis series — or 1,000+ more games — across nearly all of their devices, turning even a Mac or a mobile device into the ultimate gaming rig.

The mission starts here.

Experience the deadmau5 Encore in Core

This GFN Thursday brings Core and deadmau5 to the cloud. From shooters, survival and action adventure to MMORPGs, platformers and party games, Core is a multiverse of exciting gaming entertainment with over 40,000 free-to-play, Unreal-powered games and worlds.

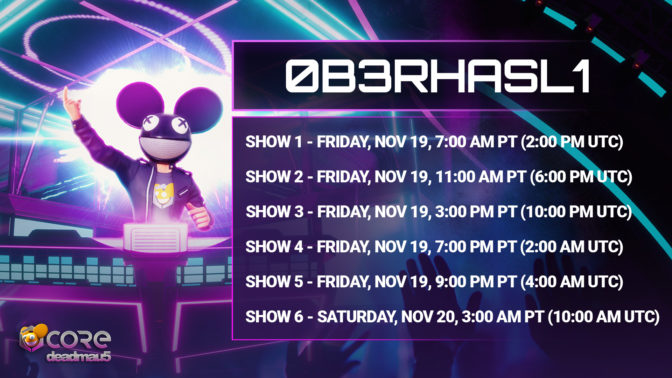

This week, members can visit the fully immersive digital world of Oberhasli — designed with the vision of the legendary producer, musician and DJ, deadmau5 — and enjoy an epic “Encore” concert and event. Catch the deadmau5 performance, with six showings running from Friday, Nov. 19, to Saturday, Nov. 20. The concert becomes available every hour, on the hour, the following week.

The fun continues with three games inspired by deadmau5’s music — Hop ‘Til You Drop, Mau5 Hau5 and Ballon Royale — set throughout 19 dystopian worlds featured in the official When The Summer Dies music video. Party on with exclusive deadmau5 skins, emotes and mounts, and interact with other fans while streaming the exclusive, interactive and must-experience deadmau5 performance celebrating the launch of Core on GeForce NOW with the “Encore” concert this week.

A New Challenge Calls

It wouldn’t be GFN Thursday without a new set of games coming to the cloud. Get ready to grind one of the newest joining the GeForce NOW library this week:

- Combat Mission Cold War (New release on Steam, Nov. 16)

- The Last Stand: Aftermath (New release on Steam, Nov. 16)

- Myth of Empires (New release on Steam, Nov. 18)

- Icarus (Beta weekend on Steam, Nov. 19)

- Assassin’s Creed: Syndicate Gold Edition (Ubisoft Connect)

- Core (Epic Games Store)

- Lost Words: Beyond the Page (Steam)

- World of Tanks (Steam)

We make every effort to launch games on GeForce NOW as close to their release as possible, but, in some instances, games may not be available immediately.

Update on ‘Bright Memory: Infinite’

Bright Memory: Infinite was added last week, but during onboarding it was discovered that enabling RTX in the game requires an upcoming operating system upgrade to GeForce NOW servers. We expect the update to be complete in December and will provide more information here when it happens.

GeForce NOW Coming to LG Smart TVs

We’re working with LG Electronics to add support for GeForce NOW to LG TVs, starting with a beta release of the app in the LG Content Store for select 2021 LG OLED, QNED MiniLED and NanoCell models. If you have one of the supported TVs, check it out and share feedback to help us improve the experience.

And finally, here’s our question for the week:

𝙡𝙚𝙩’𝙨 𝙨𝙚𝙩𝙩𝙡𝙚 𝙩𝙝𝙞𝙨 𝙤𝙣𝙘𝙚 𝙖𝙣𝙙 𝙛𝙤𝙧 𝙖𝙡𝙡:

who’s the tougher enemy?

aliens or zombies

—

NVIDIA GeForce NOW (@NVIDIAGFN) November 17, 2021

Let us know on Twitter or in the comments below.

The post A GFN Thursday Deal: Get ‘Crysis Remastered’ Free With Any Six-Month GeForce NOW Membership appeared first on The Official NVIDIA Blog.