Computer vision and edge AI are looking beyond the pasture.

Plainsight, a San Francisco-based startup and NVIDIA Metropolis partner, is helping the meat processing industry improve its operations — from farms to forks. By pairing Plainsight’s vision AI platform and NVIDIA GPUs to develop video analytics applications, the company’s system performs precision livestock counting and health monitoring.

With animals such as cows that look so similar, frequently shoulder-to-shoulder and moving quickly, inaccuracies in livestock counts are common in the cattle industry, and often costly.

On average, the cost of a cow in the U.S. is between $980 and $1,200, and facilities process anywhere between 1,000 to 5,000 cows per day. At this scale, even a small percentage of inaccurate counts equates to hundreds of millions of dollars in financial losses, nationally.

“By applying computer vision powered by edge AI and NVIDIA Metropolis, we’re able to automate what has traditionally been a very manual process and remove the uncertainty that comes with human counting,” said Carlos Anchia, CEO of Plainsight. “Organizations begin to optimize existing revenue streams when accuracy can be operationally efficient.”

Plainsight is working with JBS USA, one of the world’s largest food companies, to integrate vision AI into its operational processes. Vision AI-powered cattle counting was among the first solutions to be implemented.

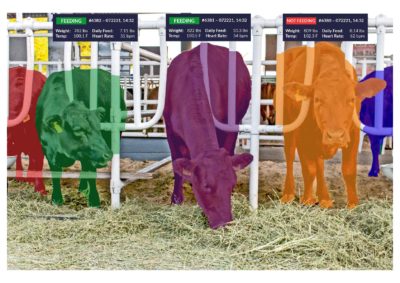

At JBS, cows are counted by fixed-position cameras, connected via a secured private network to Plainsight’s vision AI edge application, which detects, tracks and counts the cows as they move past.

Plainsight’s models count livestock with over 99.5 percent accuracy — about two percentage points better than manual livestock counting by humans in the same conditions, according to the company.

For a vision AI solution to be widely adopted by an organization, the accuracy must be higher than humans performing the same activity. By monitoring and tracking each individual animal, the models simplify an otherwise complex process.

Highly robust and accurate computer vision models are only a portion of the cattle counting solution. Through continued collaboration with JBS’s operations and innovation teams, Plainsight provided a path to the digital transformation required to more accurately provide accountability when receiving livestock at scale and thus ensure that the payment for livestock received is as accurate as possible.

Higher Accuracy with GPMoos

For JBS, the initial proof of value involved building models and deploying on an NVIDIA Jetson AGX Xavier Developer Kit.

After quickly achieving nearly 100 percent accuracy levels with their models, the teams moved into a full pilot phase. To augment the model to handle new and often challenging environmental conditions, Plainsight’s AI platform was used to quickly and easily annotate, build and deploy model improvements in preparation for a nationwide rollout.

As a member partner of NVIDIA Metropolis, an application framework that brings visual data and AI together, Plainsight continues to develop and improve models and AI pipelines to enable a national rollout with the U.S. division of JBS.

There, Plainsight uses a technology stack built on the NVIDIA EGX platform, incorporating NVIDIA-Certified Systems with NVIDIA T4 GPUs. Plainsight’s application processes multiple video streams per GPU in real time to count and monitor livestock as part of managing the accounting of livestock when received.

“Innovation is fundamental to the JBS culture, and the application of AI technology to improve efficiencies for daily work routines is important,” said Frederico Scarin do Amaral, Senior Manager Business Solutions of JBS USA. “Our partnership with Plainsight enhances the work of our team members and ensures greater accuracy of livestock count, improving our operations and efficiency, as well as allowing for continual improvements of animal welfare.”

Milking It

Inaccurate counting is only part of the problem the industry faces, however. Visual inspection of livestock is arduous and error-prone, causing late detection of diseases and increasing health risks to other animals.

The same symptoms humans can identify by looking at an animal, such as gait and abnormal behavior, can be approximated by computer vision models built, trained and managed through Plainsight’s vision AI platform.

The models identify symptoms of particular diseases, based on the gait and anomalies in how livestock behave when exiting transport vehicles, in a pen or in feeding areas.

“The cameras are an unblinking source of truth that can be very useful in identifying and alerting to problems otherwise gone unnoticed,” said Anchia. “The combination of vision AI, cameras and Plainsight’s AI platform can help enhance the vigilance of all participants in the cattle supply chain so they can focus more on their business operations and animal welfare improvements as opposed to error-prone manual counting.”

Legen-Dairy

In addition to a variety of other smart agriculture applications, Plainsight is using its vision AI platform to monitor and track cattle on the blockchain as digital assets.

The company is engaged in a first-of-its-kind co-innovation project with CattlePass, a platform that generates a secure and unique digital record of individual livestock, also known as a non-fungible token, or NFT.

Plainsight is applying its advanced vision AI models and platform for monitoring cattle health. The suite of advanced technologies, including genomics, health and proof-of-life records, will provide 100 percent verifiable proof of ownership and transparency into a complete living record of the animal throughout its lifecycle.

Cattle ranchers will be able to store the NFTs in a private digital wallet while collecting and adding metadata: feed, heartbeat, health, etc. This data can then be shared with permissioned viewers such as inspectors, buyers, vets and processors.

Cattle ranchers will be able to store the NFTs in a private digital wallet while collecting and adding metadata: feed, heartbeat, health, etc. This data can then be shared with permissioned viewers such as inspectors, buyers, vets and processors.

The data will remain with each animal throughout its life through harvest, and data will be provided with a unique QR code printed on the beef packaging. This will allow for visibility into the proof of life and quality of each cow, giving consumers unprecedented knowledge about its origin.

The post Cattle-ist for the Future: Plainsight Revolutionizes Livestock Management with AI appeared first on The Official NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)