NVIDIA DRIVE-powered cars electrified the atmosphere this week at Auto Shanghai.

The global auto show is the oldest in China and has become the stage to debut the latest vehicles. And this year, automakers, suppliers and startups developing on NVIDIA DRIVE brought a new energy to the event with a wave of intelligent electric vehicles and self-driving systems.

The automotive industry is transforming into a technology industry — next-generation lineups will be completely programmable and connected to a network, supported by software engineers who will invent new software and services for the life of the car.

Just as the battery capacity of an electric vehicle provides miles of range, the computing capacity of these new vehicles will give years of new delight.

EVs for Everyday

Automakers have been introducing electric vehicle technology with one or two specialized models. Now, these lineups are becoming diversified, with an EV for every taste.

Joining the recently launched EQS flagship sedan and EQA SUV on the showfloor, the Mercedes-Benz EQB adds a new flavor to the all-electric EQ family. The compact SUV brings smart electromobility in a family size, with seven seats and AI features.

Like its EQA sibling, the EQB features the latest generation MBUX AI cockpit, powered by NVIDIA DRIVE. The high-performance system includes an augmented reality head-up display, AI voice assistant and rich interactive graphics to enable the driver to enjoy personalized, intelligent features.

EV maker Xpeng is bringing its new energy technology to the masses with the P5 sedan. It joins the P7 sports sedan in offering intelligent mobility with NVIDIA DRIVE.

The P5 will be the first to bring Xpeng’s Navigation Guided Pilot (NGP) capabilities to public roads. The automated driving system leverages the automaker’s full-stack XPILOT 3.5, powered by NVIDIA DRIVE AGX Xavier. The new architecture processes data from 32 sensors — including two lidars, 12 ultrasonic sensors, five millimeter-wave radars and 13 high-definition cameras — integrated into 360-degree dual-perception fusion to handle challenging and complex road conditions.

Also making its auto show debut was the NIO ET7, which was first unveiled during a company event in January. The ET7 is the first vehicle that features NIO’s Adam supercomputer, which leverages four NVIDIA DRIVE Orin processors to achieve more than 1,000 trillion operations per second (TOPS).

The flagship vehicle leapfrogs current model capabilities, with more than 600 miles of battery range and advanced autonomous driving. With Adam, the ET7 can perform point-to-point autonomy, using 33 sensors and high-performance compute to continuously expand the domains in which it operates — from urban to highway driving to battery swap stations.

Elsewhere on the showfloor, SAIC’s R Auto exhibited the intelligent ES33. This smart, futuristic vehicle equipped with R-Tech leverages the high performance of NVIDIA DRIVE Orin to deliver automated driving features for a safer, more convenient ride.

SAIC- and Alibaba-backed IM Motors — which stands for intelligence in motion — also made its auto show debut with the electric L7 sedan and SUV, powered by NVIDIA DRIVE. These first two vehicles will have autonomous parking and other automated driving features, as well as a 93kWh battery that comes standard.

Improving Intelligence

In addition to automaker reveals, suppliers and self-driving startups showcased their latest technology built on NVIDIA DRIVE.

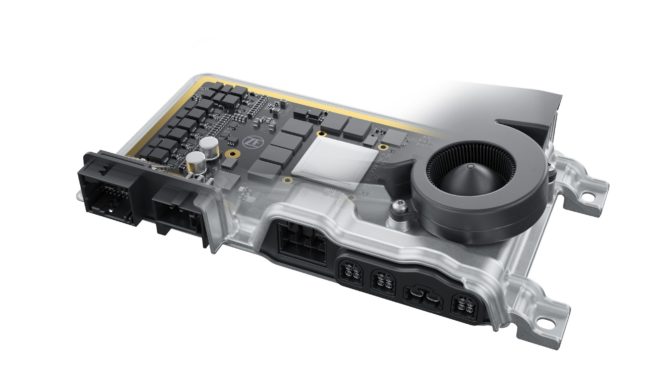

Global supplier ZF continued to push the bounds of autonomous driving performance with the latest iteration of its ProAI Supercomputer. With NVIDIA DRIVE Orin at its core, the scalable autonomous driving compute platform supports systems with level 2 capabilities all the way to full self-driving, with up to 1,000 TOPS of performance.

Autonomous driving startup Momenta demonstrated the newest capabilities of MPilot, its autopilot and valet parking system. The software, which is designed for mass production vehicles, leverages DRIVE Orin, which enhances production efficiency for a more streamlined time to market.

From advanced self-driving systems to smart, electric vehicles of all sizes, the NVIDIA DRIVE ecosystem stole the show this week at Auto Shanghai.

The post The Future’s So Bright: NVIDIA DRIVE Shines at Auto Shanghai appeared first on The Official NVIDIA Blog.