The next stop on the NVIDIA DRIVE roadmap is Atlan.

During today’s opening keynote of the GPU Technology Conference, NVIDIA founder and CEO Jensen Huang unveiled the upcoming generation of AI compute for autonomous vehicles, NVIDIA DRIVE Atlan. A veritable data center on wheels, Atlan centralizes the vehicle’s entire compute infrastructure into a single system-on-a-chip.

While vehicles are packing in more and more compute technology, they’re lacking the physical security that comes with data center-level processing. Atlan is a technical marvel for safe and secure AI computing, fusing all of NVIDIA’s technologies in AI, automotive, robotics, safety and BlueField data centers.

The next-generation platform will achieve an unprecedented 1,000 trillion operations per second (TOPS) of performance and an estimated SPECint score of more than 100 (SPECrate2017_int) — greater than the total compute in most robotaxis today. Atlan is also the first SoC to be equipped with an NVIDIA BlueField data processing unit (DPU) for trusted security, advanced networking and storage services.

While Atlan will not be available for a couple of years, software development is well underway. Like NVIDIA DRIVE Orin, the next-gen platform is software compatible with previous DRIVE compute platforms, allowing customers to leverage their existing investments across multiple product generations.

“To achieve higher levels of autonomy in more conditions, the number of sensors and their resolutions will continue to increase,” Huang said. “AI models will get more sophisticated. There will be more redundancy and safety functionality. We’re going to need all of the computing we can get.”

Advancing Performance at Light Speed

Autonomous vehicle technology is developing faster than it has in previous years, and the core AI compute must advance in lockstep to support this critical progress.

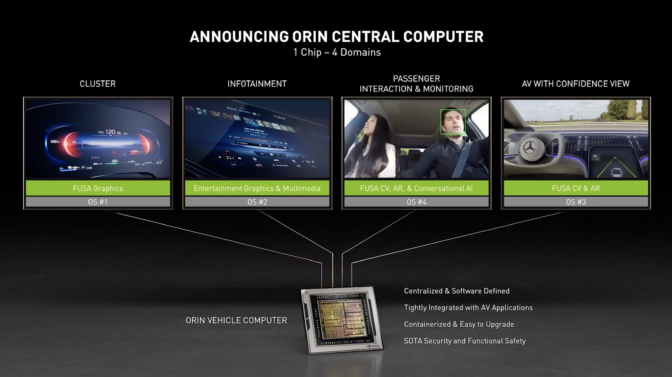

Cars and trucks of the future will require an optimized AI architecture not only for autonomous driving, but also for intelligent vehicle features like speech recognition and driver monitoring. Upcoming software-defined vehicles will be able to converse with occupants: answering questions, providing directions and warning of road conditions ahead.

Atlan is able to deliver more than 1,000 TOPS — a 4x gain over the previous generation — by leveraging NVIDIA’s latest GPU architecture, new Arm CPU cores and deep learning and computer vision accelerators.The platform architecture provides ample compute horsepower for the redundant and diverse deep neural networks that will power future AI vehicles and leaves headroom for developers to continue adding features and improvements.

This high-performance platform will run autonomous vehicle, intelligent cockpit and traditional infotainment applications concurrently.

A Guaranteed Shield with BlueField

Like every generation of NVIDIA DRIVE, Atlan is designed with the highest level of safety and security.

As a data-center-infrastructure-on-a-chip, the NVIDIA BlueField DPU is architected to handle the complex compute and AI workloads required for autonomous vehicles. By combining the industry-leading ConnectX network adapter with an array of Arm cores, BlueField offers purpose-built hardware acceleration engines with full programmability to deliver “zero-trust” security to prevent data breaches and cyberattacks.

This secure architecture will extend the safety and reliability of the NVIDIA DRIVE platform for vehicle generations to come. NVIDIA DRIVE Orin vehicle production timelines start in 2022, and Atlan will follow, sampling in 2023 and slated for 2025 production vehicles.

The post A Data Center on Wheels: NVIDIA Unveils DRIVE Atlan Autonomous Vehicle Platform appeared first on The Official NVIDIA Blog.