Looking to improve your cloud gaming experience? First, become a master of your network.

Twitch-class champions in cloud gaming shred Wi-Fi and broadband waves. They cultivate good ping to defeat two enemies — latency and jitter.

What Is Latency?

Latency or lag is a delay in getting data from the device in your hands to a computer in the cloud and back again.

What Is Jitter?

Jitter is the annoying disruption you feel that leads to yelling at your router, (“You’re breaking up, again!”) when pieces of that data (called packets) get sidetracked.

Why Are Latency and Jitter Important?

Cloud gaming works by rendering game images on a custom GFN server that may be miles away, those images are then sent to a game server, and finally appear on the device in front of you.

When you fire at an enemy, your device sends data packets to these servers. The kill happens on the game on those servers, sending back commands that display the win on your screen.

And it all happens in less than the blink of an eye. In technical terms, it’s measured in “ping.”

What Is Ping?

Ping in is the time in milliseconds it takes a data packet to go to the server and back.

Anyone with the right tools and a little research can prune their ping down to less than 30 milliseconds. Novices can get pummeled with as much as 300 milliseconds of lag. It’s the difference between getting or being the game-winning kill.

Before we describe the ways to crush latency and jitter, let’s take its measure.

To Measure Latency, Test Your Ping

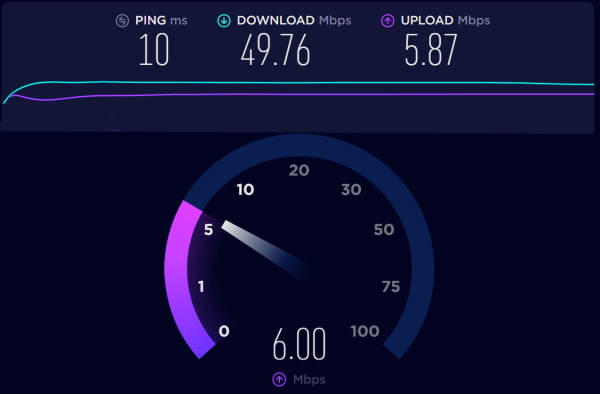

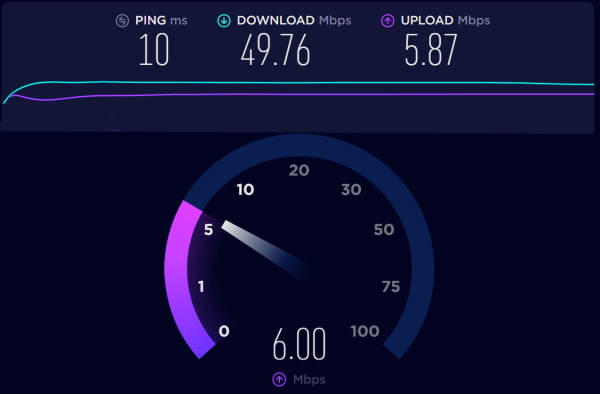

Speedtest.net and speedsmart.net are easy to take, but they only measure the latency to a generic server that may be in your network.

It doesn’t measure the time it takes to get data to and from the server you’re connecting to for your cloud gaming session. For a more accurate gauge of your ping, some cloud gaming services, such as NVIDIA GeForce NOW, sport their own built-in test for network latency. Those network tests will measure the ping time to and the respective cloud gaming server.

Blazing Wi-Fi and broadband can give your ping some zing.

Blazing Wi-Fi and broadband can give your ping some zing.

My Speedtest result showed a blazing 10 milliseconds, while Speedsmart measured a respectable 23ms.

Your mileage may vary, too. If it sticks its head much up over 30ms, the first thing to do is check your ISP or network connection. Still having trouble? Try rebooting. Turn your device and your Wi-Fi network off for 10 seconds, then turn them back on and run the tests again.

How to Reduce Latency and Ping

If the lag remains, more can be done for little or no cost, and new capabilities are coming down the pike that will make things even better.

First, try to get off Wi-Fi. Simply running a standard Ethernet cable from your Wi-Fi router to your device can slash latency big time.

If you can’t do that, there are still plenty of ways to tune your Wi-Fi.

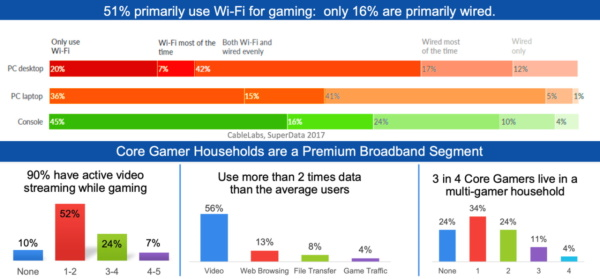

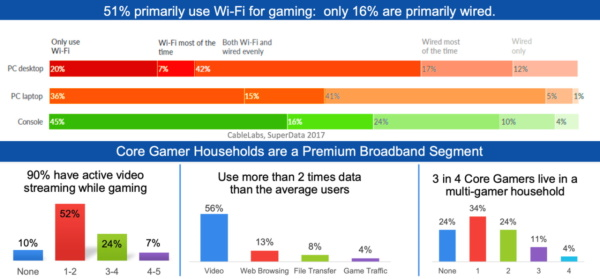

Ethernet is faster, but research from CableLabs shows most gamers use Wi-Fi.

Ethernet is faster, but research from CableLabs shows most gamers use Wi-Fi.

Your ping may be stuck in heavy traffic. Turn off anything else running on your Wi-Fi network, especially streaming videos, work VPNs — hey, it’s time for play — and anyone trying to download the Smithsonian archives.

A High Five Can Avoid Interference

If rush-hour traffic is unavoidable on your home network, you can still create your own diamond lane.

Unless you have an ancient Wi-Fi access point (AP, for short — often referred to as a router) suitable for display in a museum, it should support both 2.4- and 5GHz channels.

You can claw back many milliseconds of latency if you shunt most of your devices to the 2.4GHz band and save 5GHz for cloud gaming.

Apps called Wi-Fi analyzers on the Android and iTunes stores can even determine which slices of your Wi-Fi airspace are more or less crowded. A nice fat 80MHz channel in the 5GHz band without much going on nearby is an ideal runway for cloud gaming.

Quash Latency with QoS

If it’s less than a decade old, your AP probably has something called quality of service, or QoS.

QoS can give some apps higher priority than others. APs vary widely in how they implement QoS, but it’s worth checking to see if your network can be set to give priority to cloud gaming.

NVIDIA provides a list of the recommended AP it’s tested with GeForce NOW as well as a support page for how to apply QoS and other techniques.

Take a Position Against Latency

If latency persists, see if you can get physically closer to your AP. Can you move to the same room?

If not, consider buying a mesh network. That’s a collection of APs you can string around your home, typically with Ethernet cables, so you have an AP in every room where you use Wi-Fi.

Some folks suggest trying different positions for your router and your device to get the sweetest reception. But others say this will only shave a millisecond or so off your lag at best, so don’t waste too much time playing Wi-Fi yoga.

Stay Tuned for Better Ping

The good news is more help is on the way. The latest version, Wi-Fi 6, borrows a connection technology from cellular networks (called OFDMA) that reduces signal interference significantly, reducing latency.

So, if you can afford it, get a Wi-Fi 6 AP, but you’ll have to buy a gaming device that supports Wi-Fi 6, too.

Next year, Wi-Fi 6E devices should be available. They’ll sport a new 6MHz Wi-Fi band where you can find fresh channels for gaming.

Coping with Internet Latency

Your broadband connection is the other part of the net you need to set up for cloud gaming. First, make sure your internet plan matches the kind of cloud gaming you want to play.

These days a basic plan tops out at about 15 Mbits/second. That’s enough if your screen can display 1280×720 pixels, aka standard high definition or 720p. If you want a smoother, more responsive experience, step up to 1080p resolution, full high def or even 4K ultra-high def — this requires at least 25 Mbits/s. More is always better.

If you’re playing on a smartphone, 5G cellular services are typically the fastest links, but in some areas a 4G LTE service may be well optimized for gaming. It’s worth checking the options with your cellular provider.

When logging into your cloud gaming service, choosing the closest server can make a world of difference.

For example, using the speedsmart.net test gave me a ping of 29ms from a server in San Francisco, 41 miles away. Choosing a server in Atlanta, more than 2,000 miles away, bloated my ping to 80ms. And forget about even trying to play on a server on another continent.

GeForce NOW members can sit back and relax on this one. The service automatically picks the fastest server for you, even if the server is a bit farther away.

A Broadband Horizon for Cloud Gaming

Internet providers want to tame the latency and jitter in their broadband networks, too.

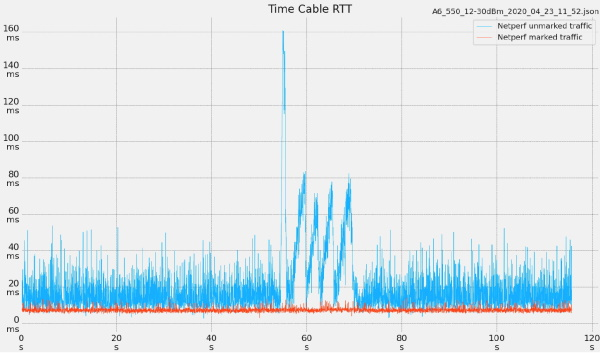

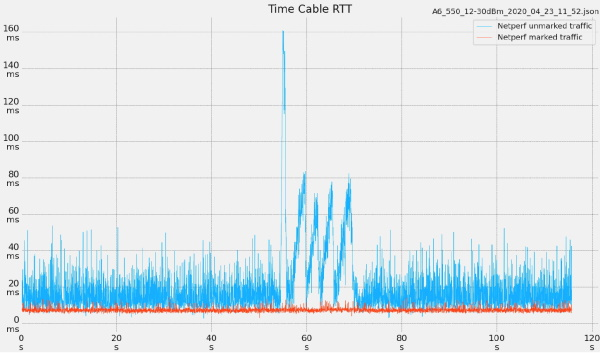

Cable operators plan to upgrade their software, called DOCSIS 3.1, to create low-latency paths for cloud gamers. A version of the software, called L4S, for other kinds of internet access providers and Wi-Fi APs is also in the works.

Broadband latency should shrink to a fraction of its size (from blue to red in the chart) once low-latency DOCSIS 3.1 software is available and supported.

Broadband latency should shrink to a fraction of its size (from blue to red in the chart) once low-latency DOCSIS 3.1 software is available and supported.

The approach requires some work on the part of application developers and cloud gaming services. But the good news is engineers and developers across all these companies are engaged in the effort and it promises dramatic reductions in latency and jitter, so stay tuned.

Now you know the basics of navigating network latency to become a champion cloud gamer, so go hit “play.”

Follow GeForce NOW on Facebook and Twitter and stay up to date on the latest features and game launches.