Call it Moneyball for deep learning.

New York Times writer Cade Metz tells the funny, inspiring — and ultimately triumphant — tale of how a dogged group of AI researchers bet their careers on the long-dismissed technology of deep learning.

In his new book, Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World, Metz reveals the human personalities behind the rise of AI, with a cast of well-known characters that includes Geoffrey Hinton, Yann Lecun, Yoshua Bengio and more.

The book begins with Metz’s favorite anecdote — how Hinton, a professor at the University of Toronto, and two students, Alex Kruszewski and Ilya Sutskever, moved into Google as large technology companies began to see the merits of AI.

Another fascinating story focuses on UC Berkeley professor Sergey Levine’s work on reinforcement learning at Google. He helped set up the “arm farm” — multiple robotic arms that learn how to successfully pick up items by trying and failing repeatedly.

Levine left the arms to do their work over the weekend, and came back Monday to what looked like a crime scene — one of the arms had failed to pick up lipstick correctly, resulting in what looked like bloodstains all over the room.

For more stories about the minds behind today’s technology, Genius Makers is out now.

Key Points From This Episode:

- Metz’s book captures the nuance and contradictions within the AI community, where experts can have polarizing viewpoints on emerging technology. Metz gives the example of scientists Frank Rosenblatt and Marvin Minsky, who firmly disagreed on what potential neural networks held.

- One recurring theme throughout Genius Makers is that of “old ideas or new” — a mantra at Hinton’s university lab. It represented his belief that, if a scientist believed an idea could work, even if it seemed like a slim chance, they should keep trying until proven wrong. It’s a belief that’s served him well throughout his long career.

Tweetables:

“Part of my mission on earth is to show people that engineers, like my father, are real, interesting, fascinating people.” — Cade Metz [1:37]

“These technologies are continuing to progress at an incredibly fast rate and the questions that they raise have not been solved.” — Cade Metz [31:22]

You Might Also Like

Deep Learning Pioneer Andrew Ng on AI as the New Electricity

Purple shirts, haircuts and cats. How are these three all related? According to deep learning pioneer Andrew Ng, they all played a part in AI’s growing presence in our lives. Ng, formerly of Google and Baidu, shares his thoughts on AI being the new electricity.

NVIDIA Chief Scientist Bill Dally on Where AI Goes Next

Bill Dally, chief scientist at NVIDIA and one of the pillars of the computer science world, talks about his perspective on the world of deep learning and AI in general.

Investor, AI Pioneer Kai-Fu Lee on the Future of AI in the US, China

Kai-Fu Lee, developer of the world’s first speaker-independent continuous speech recognition system in 1988, has led teams at Apple, Silicon Graphics, Microsoft and Google. Lee also started Sinovation Ventures, managing a $2 billion fund focusing on tech startups in China and the U.S. He talks about his latest book, AI Superpowers: China, Silicon Valley, and the New World Order.

Tune in to the AI Podcast

Get the AI Podcast through iTunes, Google Podcasts, Google Play, Castbox, DoggCatcher, Overcast, PlayerFM, Pocket Casts, Podbay, PodBean, PodCruncher, PodKicker, Soundcloud, Spotify, Stitcher and TuneIn. If your favorite isn’t listed here, drop us a note.

Make the AI Podcast Better

Have a few minutes to spare? Fill out this listener survey. Your answers will help us make a better podcast.

The post In ‘Genius Makers’ Cade Metz Tells Tale of Those Behind the Unlikely Rise of Modern AI appeared first on The Official NVIDIA Blog.

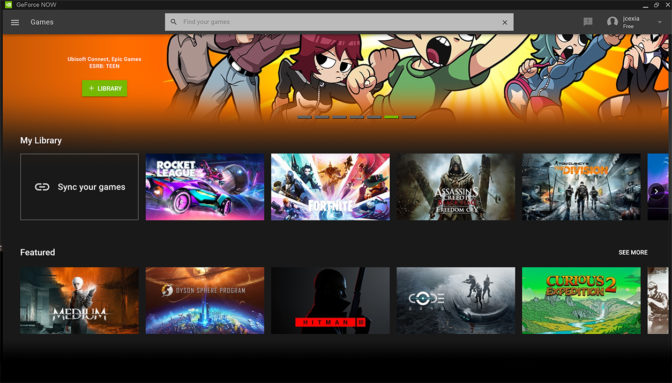

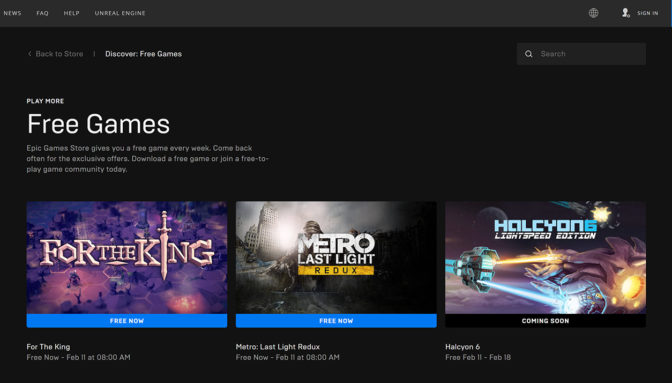

Sync your entire Steam game library in about one minute.

Sync your entire Steam game library in about one minute.

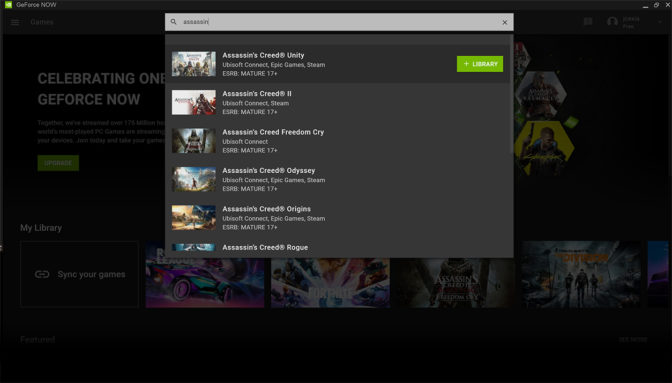

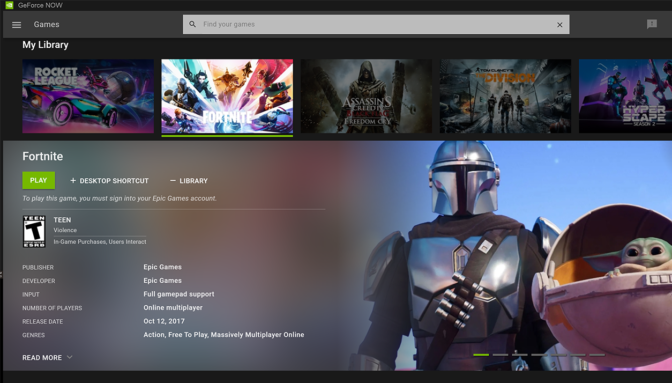

Discover a game you want to play? Find which store it’s supported on in the game page.

Discover a game you want to play? Find which store it’s supported on in the game page.

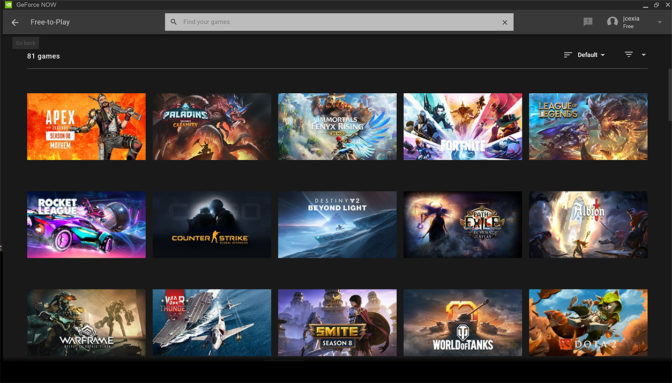

Members can build their library with +80 (and counting) free-to-play games.

Members can build their library with +80 (and counting) free-to-play games.