Got a conflict with your 2pm appointment? Just spin up a quick assistant that takes good notes and when your boss asks about you even identifies itself and explains why you aren’t there.

Nice fantasy? No, it’s one of many use cases a team of some 50 ninja programmers, AI experts and 20 beta testers is exploring with Dasha. And they’re looking for a few good developers to join a beta program for the product that shows what’s possible with conversational AI on any device with a mic and a speaker.

“Conversational AI is going to be [seen as] the biggest paradigm shift of the last 40 years,” the chief executive and co-founder of Dasha, Vlad Chernyshov, wrote in a New Year’s tweet.

Using the startup’s software, its partners are already creating cool prototypes that could help make that prediction come true.

For example, a bank is testing Dasha to create a self-service support line. And the developer of a popular console game is using it to create an in-game assistant that players can consult via a smartwatch on their character’s wrist.

Custom Conversations Created Quickly

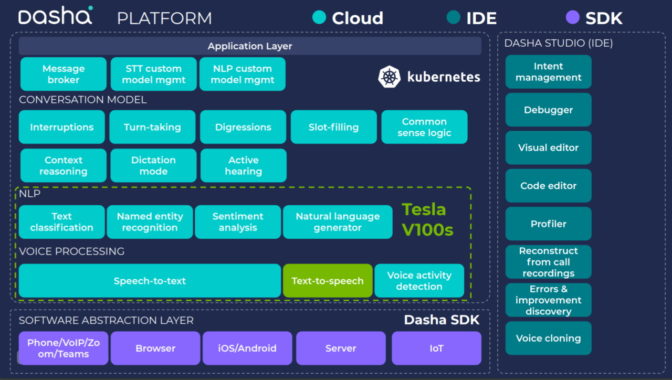

Dasha’s development tool lets an average IT developer use familiar library calls to design custom dialogs for any business process. They can tap into the startup’s unique capabilities in speech recognition, synthesis and natural-language processing running on NVIDIA GPUs in the cloud.

“We built all the core technology in house because today’s alternatives have too high a latency, the voice does not sound natural or the level of controls is not flexible enough for what customers want to do,” Chernyshov said.

The startup prides itself on its software that both creates and understands speech with natural inflections of emotion, breathing—even the “ums” and “ahs” that pepper real conversations. That level of fluency is helping early users get better responses from programs like Dasha Delight that automates post-sales satisfaction surveys.

Delighting Customers with Conversational AI

A bank that caters to small businesses gave Delight to its two-person team handling customer satisfaction surveys. With automated surveys, they covered more customers and even developed a process to respond to complaints, sometimes with problem fixes in less than an hour.

Separately, the startup developed a smartphone app called Dasha Assistant. It uses conversational AI to screen out unwanted sales calls but put through others like the pizza man confirming an order.

Last year, the company even designed an app to automate contact tracing for COVID-19.

An Ambitious Mission in AI

While one team of developers pioneers such new use cases, a separate group of researchers at Dasha pushes the envelope in realistic speech synthesis.

“We have a mission of going after artificial general intelligence, the ability for computers to understand like humans do, which we believe comes through developing systems that speak like humans do because speech is so closely tied to our intelligence,” said Chernyshov.

Below: Chernyshov demos a customer service experience with Dasha’s conversational AI.

He’s had a passion for dreaming big ideas and coding them up since 2007. That’s when he built one of the first instant messaging apps for Android at his first startup while pursuing his computer science degree in the balmy southern Siberian city of Novosibirsk, in Russia.

With no venture capital community nearby, the startup died, but that didn’t stop a flow of ideas and prototypes.

By 2017 Chernyshov learned how to harness AI and wrote a custom program for a construction company. It used conversational AI to automate the work of seeking a national network of hundreds of dealers.

“We realized the main thing preventing mainstream adoption of conversational AI was that most automated systems were really stupid and nobody was focused on making them comfortable and natural to talk with,” he said.

A 7x Speed Up With GPUs

To get innovations to the market quickly, Dasha runs all AI training and inference work on NVIDIA A100 Tensor Cores and earlier generation GPUs.

The A100 trains Dasha’s latest models for speech synthesis in a single day, 7x faster than previous-generation GPUs. In one of its experiments, Dasha trained a Transformer model 1.85x faster using four A100s than with eight V100 GPUs.

“We would never get here without NVIDIA. Its GPUs are an industry standard, and we’ve been using them for years on AI workflows,” he said.

NVIDIA software also gives Dasha traction. The startup eases the job of running AI in production with TensorRT, NVIDIA code that can squeeze the super-sized models used in conversational AI so they deliver inference results faster with less memory and without losing accuracy.

Mellotron, a model for speech synthesis developed by NVIDIA, gave Dasha a head start creating its custom neural networks for fluent systems.

“We’re always looking for better model architecture to do faster inference and speech synthesis, and Mellotron is superior to other alternatives,” he said.

Now, Chernyshov is looking for a few ninja programmers in a handful of industries he wants represented in the beta program for Dasha. “We want to make sure every sector gets a voice,” he quipped.

The post Now Hear This: Startup Gives Businesses a New Voice appeared first on The Official NVIDIA Blog.