Bringing more gaming capabilities to millions more gamers, NVIDIA on Tuesday announced more than 70 new laptops will feature GeForce RTX 30 Series Laptop GPUs and unveiled the NVIDIA GeForce RTX 3060 graphics card for desktops.

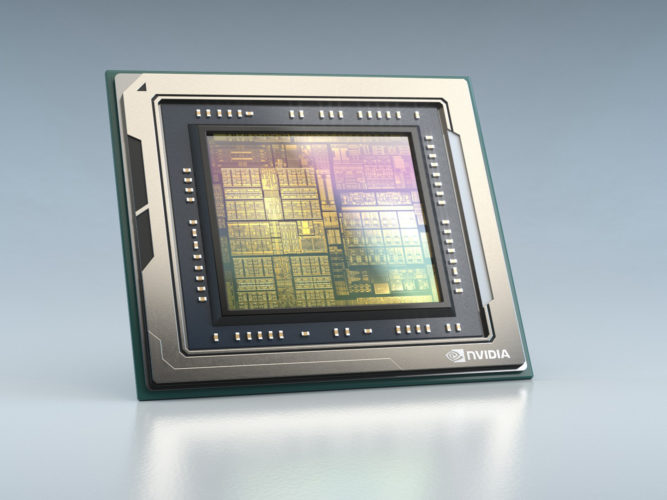

All are powered by the award-winning NVIDIA Ampere GPU architecture, the second generation of RTX with enhanced Ray Tracing Cores, Tensor Cores, and new streaming multiprocessors.

The announcements were among the highlights of a streamed presentation from Jeff Fisher, senior vice president of NVIDIA’s GeForce business.

Amid the unprecedented challenges of 2020, “millions of people tuned into gaming — to play, create and connect with one another,” Fisher said. “More than ever, gaming has become an integral part of our lives.” Among the stats he cited:

- Steam saw its number of concurrent users more than double from 2018

- Discord, a messaging and social networking service most popular with gamers, has seen monthly active users triple to 140 million from two years ago

- In 2020 alone, more than 100 billion hours of gaming content have been watched on YouTube

- Also in 2020, viewership of esports reached half a billion people

Meanwhile, NVIDIA has been delivering a series of major gaming advancements, Fisher explained.

RTX ‘the New Standard’

Two years ago, NVIDIA introduced a breakthrough in graphics real-time ray tracing and AI-based DLSS (deep learning super sampling), together called RTX, he said.

NVIDIA quickly partnered with Microsoft and top developers and game engines to bring the visual realism of movies to fully interactive gaming, Fisher said.

In fact, 36 games are now powered by RTX. They include the #1 Battle Royale game, the #1 RPG, the #1 MMO and the #1 best-selling game of all time – Minecraft.

Now, we’re announcing more games that support RTX technology, including DLSS, which is coming to both Call of Duty: Warzone and Square Enix’s new IP, Outriders. And Five Nights at Freddy’s: Security Breach and F.I.S.T.: Forged in Shadow Torch will be adding ray tracing and DLSS.

For more details, read our January 2021 RTX Games article.

“The momentum is unstoppable,” Fisher said. “As consoles and the rest of the ecosystem are now onboard — ray tracing is the new standard.

last year, NVIDIA launched its second generation of RTX, the GeForce RTX 30 Series GPUs. Based on the NVIDIA Ampere architecture, it represents “our biggest generational leap ever,” Fisher said.

NVIDIA built NVIDIA Reflex to deliver the lowest system latency for competitive gamers – from mouse click to display. Since Reflex’s launch in September, a dozen games have added support.

Fisher announced that Overwatch and Rainbow Six Siege are also adopting NVIDIA Reflex. Now, 7 of the top 10 competitive shooters support Reflex.

And over the past four months, NVIDIA has launched four NVIDIA Ampere architecture-powered graphics cards, from the ultimate BFGPU — the GeForce RTX 3090 priced at $1,499 — to the GeForce RTX 3060 Ti at $399.

“Ampere has been our fastest selling architecture ever, selling almost twice as much as our prior generation,” Fisher said.

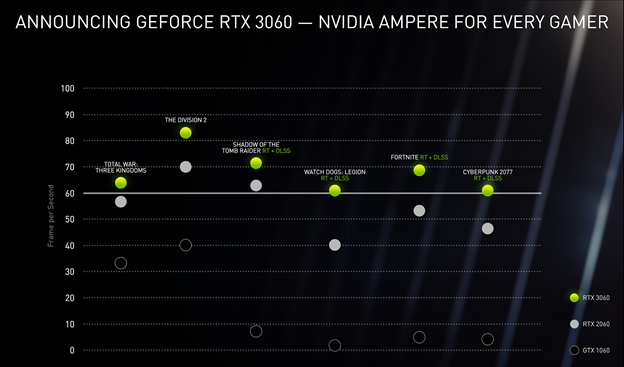

GeForce RTX 3060: An NVIDIA Ampere GPU for Every Gamer

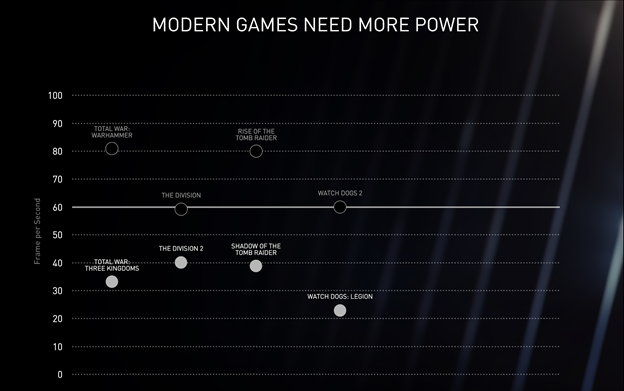

With gaming now a key part of global culture, the new GeForce RTX 3060 brings the power of the NVIDIA Ampere architecture to every gamer, Fisher said.

“The RTX 3060 offers twice the raster performance of the GTX 1060 and 10x the ray-tracing performance,” Fisher said, noting that the GTX 1060 is the world’s most popular GPU. “The RTX 3060 powers the latest games with RTX On at 60 frames per second.”

The RTX 3060 has 13 shader teraflops, 25 RT teraflops for ray tracing, and 101 tensor teraflops to power DLSS, an NVIDIA technology introduced in 2019 that uses AI to accelerate games. And it boasts 12 gigabytes of GDDR6 memory.

“With most of the installed base underpowered for the latest games, we’re bringing RTX to every gamer with the GeForce RTX 3060,” Fisher said.

The GeForce RTX 3060 starts at just $329 and will be available worldwide in late February.

“Amazing Gaming Doesn’t End at the Desktop”

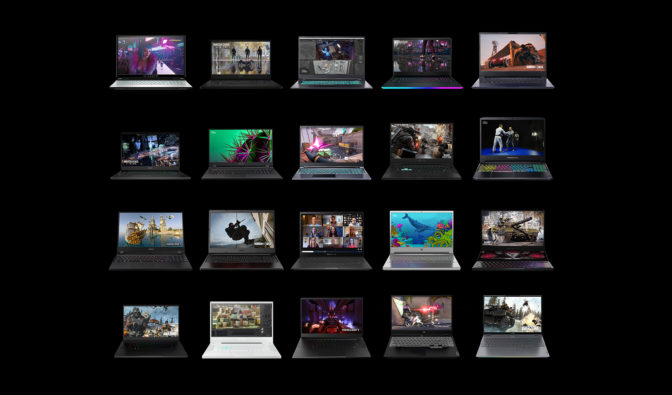

NVIDIA also announced a new generation of NVIDIA Ampere architecture-powered laptop GPUs.

Laptops, Fisher explained, are the fastest-growing gaming platform. There are now 50 million gaming laptops, which powered over 14 billion gaming hours last year.

“Amazing gaming doesn’t end at the desktop,” Fisher said.

These high-performance machines also meet the needs of 45 million creators and everyone working and studying from home, Fisher said.

The new generation of NVIDIA Ampere architecture-powered laptops, with second-generation RTX and third-generation Max-Q technologies, deliver twice the power efficiency of previous generations.

Efficiency Is Paramount in Laptops

That’s why NVIDIA introduced Max-Q four years ago, Fisher explained.

Max-Q is a system design approach that delivers high performance in thin and light gaming laptops.

“It has fundamentally changed how laptops are built, every aspect — the CPU GPU, software, PCB design, power delivery, thermals — are optimized for power and performance,” Fisher said.

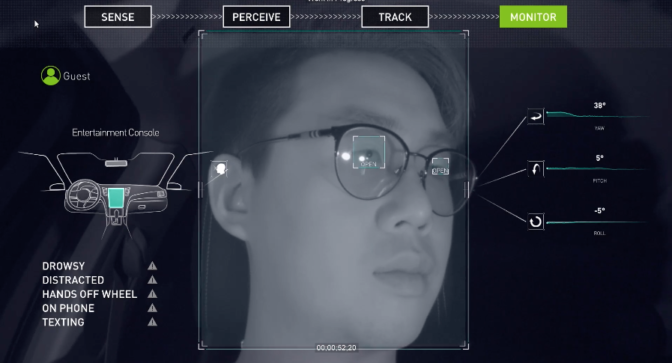

NVIDIA’s third-gen Max-Q technologies use AI and new system optimizations to make high-performance gaming laptops faster and better than ever, he said.

Fisher introduced Dynamic Boost 2.0, which for the first time uses AI to shift power between the CPU, GPU and now, GPU memory.

“So your laptop is constantly optimizing for maximum performance,” Fisher said.

Fisher also introduced WhisperMode 2.0, which delivers a new level of acoustic control for gaming laptops.

Pick your desired acoustics and WhisperMode 2.0’s AI-powered algorithms manage the CPU, GPU system temperature and fan speeds to “deliver great acoustics at the best possible performance,” Fisher explained.

Another new feature, Resizable BAR, uses the advanced capabilities of PCI Express to boost gaming performance.

Games use GPU memory for textures, shaders and geometry — constantly updating as the player moves through the world.

Today, only part of the GPU’s memory can be accessed at any one time by the CPU, requiring many memory updates, Fisher explained.

With Resizable BAR, the game can access the entire GPU memory, allowing for multiple updates at the same time, improving performance, Fisher said.

Resizable BAR will also be supported on GeForce RTX 30 Series graphics cards for desktops, starting with the GeForce RTX 3060. NVIDIA and GPU partners are readying VBIOS updates for existing GeForce RTX 30 series graphics cards starting in March.

Finally, NVIDIA DLSS offers a breakthrough for gaming laptops. It uses AI and RTX Tensor Cores to deliver up to 2x to performance in the same power envelope.

World’s Fastest Laptops for Gamers and Creators

Starting at $999, RTX 3060 laptops are “faster than anything on the market today,” Fisher said.

They’re 30 percent faster than the PlayStation 5 and deliver 90 frames per second on the latest games at ultra settings 1080p, Fisher said.

Starting at $1,299, GeForce RTX 3070 laptops are “a 1440p gaming beast.”

Boasting twice the pixels of 1080p, this new generation of laptops “provides the perfect mix of high-fidelity graphics and great performance.”

And starting at $1,999, GeForce RTX 3080 laptops will come with up to 16 gigabytes of GDDR6 memory.

They’re “the world’s fastest laptop for gamers and creators,” Fisher said, delivering hundreds of frames per second with RTX on.

As a result, laptop gamers will be able to play at 240 frames per second, across top titles like Overwatch, Rainbow Six Siege, Valorant and Fortnite, Fisher said.

Availability

Manufacturers worldwide, starting Jan. 26, will begin shipping over 70 different GeForce RTX gaming and creator laptops featuring GeForce RTX 3080 and GeForce RTX 3070 laptop GPUs, followed by GeForce RTX 3060 laptop GPUs on Feb. 2.

The GeForce RTX 3060 graphics card will be available in late February, starting at $329, as custom boards — including stock-clocked and factory-overclocked models — from top add-in card providers such as ASUS, Colorful, EVGA, Gainward, Galaxy, Gigabyte, Innovision 3D, MSI, Palit, PNY and Zotac.

Look for GeForce RTX 3060 GPUs at major retailers and etailers, as well as in gaming systems by major manufacturers and leading system builders worldwide.

“RTX is the new standard, and the momentum continues to grow,” Fisher said.

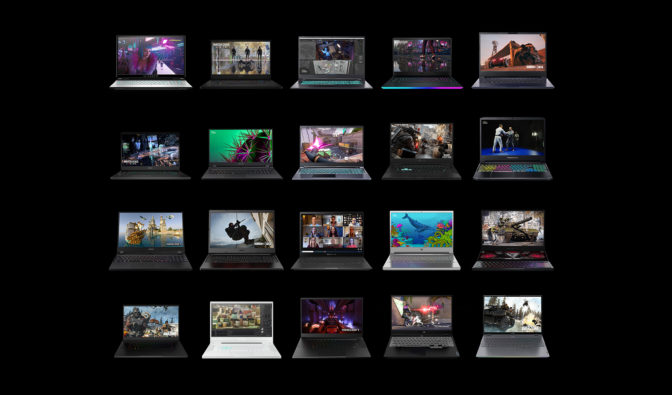

NVIDIA Studio laptops with new RTX 30 Series Laptop GPUs offer improved performance.

NVIDIA Studio laptops with new RTX 30 Series Laptop GPUs offer improved performance.