AI is the largest technology force of our time, with the most potential to transform industries. It will bring new intelligence to healthcare, education, automotive, retail and finance, creating trillions of dollars in a new AI economy.

As businesses look ahead to 2021 priorities, now’s a great time to look back at where the world stands on global AI adoption.

Retailers like Walmart and Tesco are mining new AI opportunities for product forecasting, supply chain management, intelligent store installations and predicting consumer buying trends. Healthcare players in the age of COVID are trying to speed scientific research and vaccine development.

Meantime, educators are employing AI to train a data-savvy workforce. And legions of businesses are examining how AI can help them adapt to remote work and distance collaboration.

Yet mainstream adoption of AI continues to skew toward big tech companies, automotive and retail, which are attempting to scale across their organizations instead of investing in skunkwork projects, according to a 2019 McKinsey global survey of about 2,000 organizations.

We asked some of the top experts at NVIDIA where they see the next big things in AI happening as companies parse big data and look for new revenue opportunities. NVIDIA works with thousands of AI-focused startups, ISVs, hardware vendors and cloud companies, as well as companies and research organizations around the world. These broad collaborations offer a bird’s eye view into what’s happening and where.

Here’s what our executives had to say:

CLEMENT FARABET

CLEMENT FARABET

Vice President, NVIDIA AI Infrastructure

AI as a Compiler: As AI training algorithms get faster, more robust and with richer tooling, AI will become equivalent to a compiler — developers will organize their datasets as code, and use AI to compile them into models.The end state of this is a large ecosystem of tooling/platforms (just like today’s tools for regular software) to enable more and more non-experts to “program” AIs. We’re partially there, but I think the end state will look very different than where we are today — think compilation in seconds to minutes instead of days of training. And we’ll have very efficient tools to organize data, like we do for code via git today.

AI as a Driver: AI will be assisting most vehicles to move around the physical world and continuously learning from their environments and co-pilots (human drivers) to improve, on their way to becoming fully independent drivers. The value of this is there today and will only grow larger. The end state is commoditized level 4 autonomous vehicles, relying on cheap enough sensor platforms.

BRYAN CATANZARO

BRYAN CATANZARO

Vice President, NVIDIA Applied Deep Learning Research

Conversational AI: Chabots might seem like so-last-decade when it comes to video games designed to take advantage of powerful PC graphics cards and CPUs in today’s computers. AI for some time has been used to generate responsive, adaptive or intelligent behaviors primarily in non-player characters. Conversational AI will take gameplay further by allowing real-time interaction via voice to flesh out character-driven approaches. When your in-game enemies start to talk and think like you, watch out.

Multimodal Synthesis: Can a virtual actor win an Academy Award? Advances in multimodal synthesis — the AI-driven art of creating speech and facial expressions from data — will be able to create characters that look and sound as real as a Meryl Streep or Dwayne Johnson.

Remote Work: AI solutions will make working from home easier and more reliable (and perhaps more pleasant) through better videoconferencing, audio quality and auto-transcription capabilities.

ANIMA ANANDKUMAR

ANIMA ANANDKUMAR

Director of ML Research, NVIDIA, and Bren Professor at Caltech

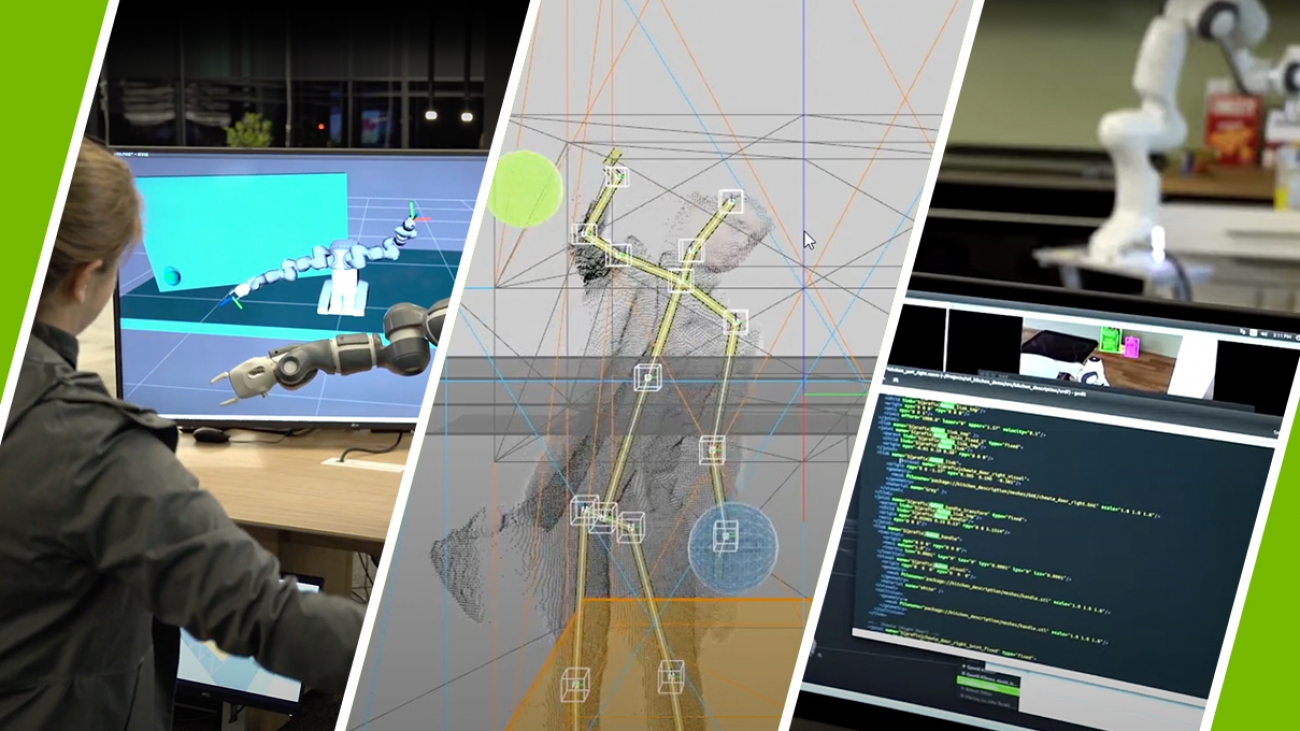

Embodied AI: The mind and body will start coming together. We will see greater adaptivity and agility in our robots as we train them to do more complex and diverse tasks.The role of high fidelity simulations is critical here to overcome the dearth of real data.

AI4Science: AI will continue to get integrated into scientific applications at scale. Traditional solvers and pipelines will be ultimately completely replaced with AI to achieve as high as a 1000x increase in speed. This will require combining deep learning with domain-specific knowledge and constraints.

ALISON LOWNDES

ALISON LOWNDES

Artificial Intelligence, NVIDIA Developer Relations

Democratized AI: The more people who have access to the dataset, and who are trained in how to mine it, the more innovations that will emerge. Nations will begin to solidify AI strategies, while universities and colleges will work in partnership with private industry to create more end-user mobile applications and scientific breakthroughs.

Simulation AI: “What does (insert AI persona here) think? The AI-based simulation increasingly will mimic human intelligence, with the ability to reason, problem solve and make decisions. You’ll see increased use here for both AI research and design and engineering.

AI for Earth Observation (AI4EO): It may be a small world after all, but there’s still a lot we don’t know about Mother Earth. A global AI framework would process satellite data in orbit and on the ground for rapid, if not real-time, actionable knowledge. It could create new monitoring solutions, especially for climate change, disaster response and biodiversity loss.

KIMBERLY POWELL

KIMBERLY POWELL

Vice President & General Manager, NVIDIA Healthcare

Federated Learning: The clinical community will increase their use of federated learning approaches to build robust AI models across various institutions, geographies, patient demographics and medical scanners. The sensitivity and selectivity of these models are outperforming AI models built at a single institution, even when there is copious data to train with. As an added bonus, researchers can collaborate on AI model creation without sharing confidential patient information. Federated learning is also beneficial for building AI models for areas where data is scarce, such as for pediatrics and rare diseases.

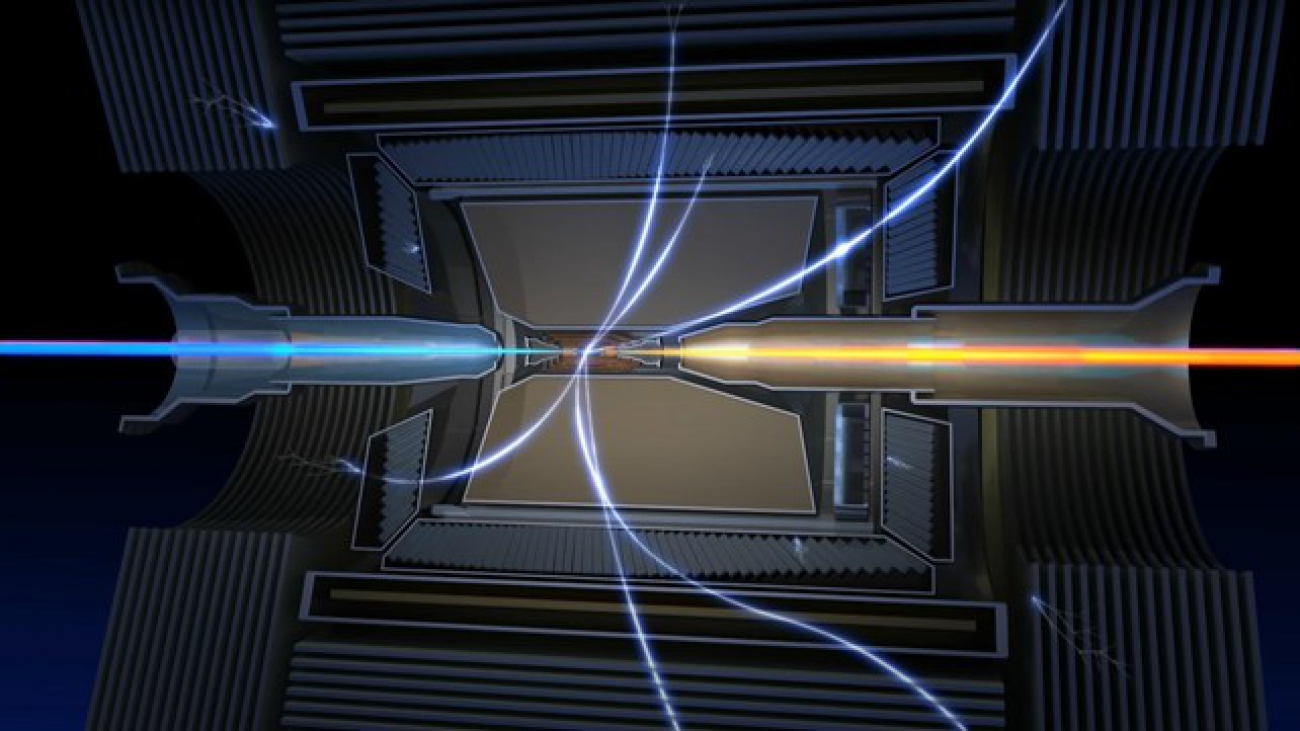

AI-Driven Drug Discovery: The COVID-19 pandemic has put a spotlight on drug discovery, which encompasses microscopic viewing of molecules and proteins, sorting through millions of chemical structures, in-silico methods for screening, protein-ligand interactions, genomic analysis, and assimilating data from structured and unstructured sources. Drug development typically takes over 10 years, however, in the wake of COVID, pharmaceutical companies, biotechs and researchers realize that acceleration of traditional methods is paramount. Newly created AI-powered discovery labs with GPU-accelerated instruments and AI models will expedite time to insight — creating a computing time machine.

Smart Hospitals: The need for smart hospitals has never been more urgent. Similar to the experience at home, smart speakers and smart cameras help automate and inform activities. The technology, when used in hospitals, will help scale the work of nurses on the front lines, increase operational efficiency and provide virtual patient monitoring to predict and prevent adverse patient events.

CHARLIE BOYLE

CHARLIE BOYLE

Vice President & General Manager, NVIDIA DGX Systems

Shadow AI: Managing AI across an organization will be a hot-button internal issue if data science teams implement their own AI platforms and infrastructure without IT involvement. Avoiding shadow AI requires a centralized enterprise IT approach to infrastructure, tools and workflow, which ultimately enables faster, more successful deployments of AI applications.

AI Center of Excellence: Companies have scrambled over the past 10 years to snap up highly paid data scientists, yet their productivity has been lower than expected because of a lack of supportive infrastructure. More organizations will speed the investment return on AI by building centralized, shared infrastructure at supercomputing scale. This will facilitate the grooming and scaling of data science talent, the sharing of best practices and accelerate the solving of complex AI problems.

Hybrid Infrastructure: The Internet of Things will lead decision-makers to adopt a mixed AI approach, using the public cloud (AWS, Azure, Oracle Cloud,Google Cloud) and private clouds (on-premises servers) to deliver applications faster (with lower latency, in industry parlance) to customers and partners while maintaining security by limiting the amount of sensitive data shared across networks. Hybrid approaches will also become more popular as governments adopt strict data protection laws governing the use of personal information.

KEVIN DEIERLING

KEVIN DEIERLING

Senior Vice President, NVIDIA Networking

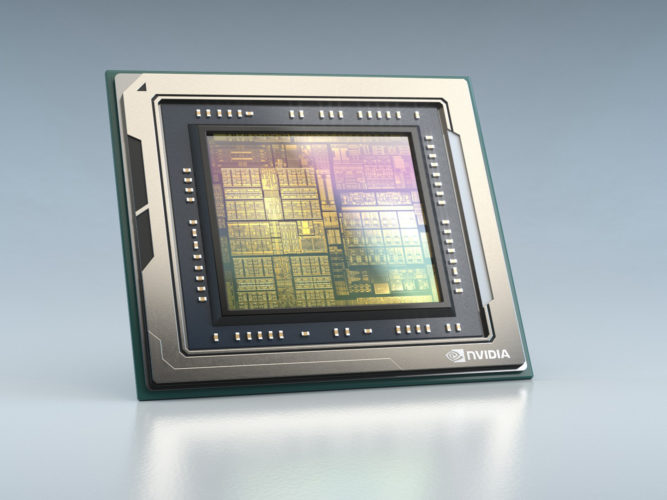

Accelerating Change in the Data Center: Security and management will be offloaded from CPUs into GPUs, SmartNICs and programmable data processing units to deliver expanded application acceleration to all enterprise workloads and provide an extra layer of security. Virtualization and scalability will be faster, while CPUs will run apps faster and offer accelerated services.

AI as a Service: Companies that are reluctant to spend time and resources investing in AI, whether for financial reasons or otherwise, will begin turning to third-party providers for experimentation. AI platform companies and startups will become key partners by providing access to software, infrastructure and potential partners.

Transformational 5G: Companies will begin defining what “the edge” is. Autonomous driving is essentially a data center in the car, allowing the AI to make instantaneous decisions, while also being able to report back for training. You’ll see the same thing with robots in the warehouse and the workplace, where there will be inference learning at the edge and training at the core. Just like 4G spawned transformational change in transportation with Lyft and Uber, 5G will bring transformational deals and capabilities. It won’t happen all at once, but you’ll start to see the beginnings of companies seeking to take advantage of the confluence of AI, 5G and new computing platforms.

SANJA FIDLER

SANJA FIDLER

Director AI, NVIDIA and Professor Vector Institute for Artificial Intelligence

AI for 3D Content Creation: AI will revolutionize the content creation process, offering smart tools to reduce mundane work and to empower creativity. In particular, creating 3D content for architecture, gaming, films and VR/AR has been very laborious: games like Call of Duty take at least a year to make, even with hundreds of people involved and millions budgeted.

With AI, one will be able to build virtual cities by describing them in words, and see virtual characters come to life to converse and behave in desired ways without needing to hard code the behavior. Creating a 3D asset will become as easy as snapping a photo, and modernizing and restyling old games will happen with the click of a button.

AI for Robotics Simulation: Testing robots in simulated environments is key for safety-critical applications such as self-driving cars or operating robots. Deep learning will bring simulation to the next level, by learning to mimic the world from data, both in terms of creating 3D environments, simulating diverse behaviors, simulating and re-simulating new or observed road scenarios, and simulating the sensors in ways that are closer to reality.

An Opportunity for Reinvention

To accomplish any or all of these tasks, organizations will have to move more quickly for internal alignment. For example, 72 percent of big AI adopters in the McKinsey survey say their companies’ AI strategy aligns with their corporate strategy, compared with 29 percent of respondents from other companies. Similarly, 65 percent of the high performers report having a clear data strategy that supports and enables AI, compared with 20 percent from other companies.

Even as the global pandemic creates uncertainty around the world, 2021 will be a time of reinvention as players large and small leverage AI to improve on their business models. More companies will operationalize AI as early results prove promising enough to commit more resources to their efforts.