As the healthcare world battles the pandemic, the medical-imaging field is gaining ground with AI, forging new partnerships and funding startup innovation. It will all be on display at RSNA, the Radiological Society of North America’s annual meeting, taking place Nov. 29 – Dec. 5.

Radiologists, healthcare organizations, developers and instrument makers at RSNA will share their latest advancements and what’s coming next — with an eye on the growing ability of AI models to integrate with medical-imaging workflows. More than half of informatics abstracts submitted to this year’s virtual conference involve AI.

In a special public address at RSNA, Kimberly Powell, NVIDIA’s VP of healthcare, will discuss how we’re working with research institutions, the healthcare industry and AI startups to bring workflow acceleration, deep learning models and deployment platforms to the medical imaging ecosystem.

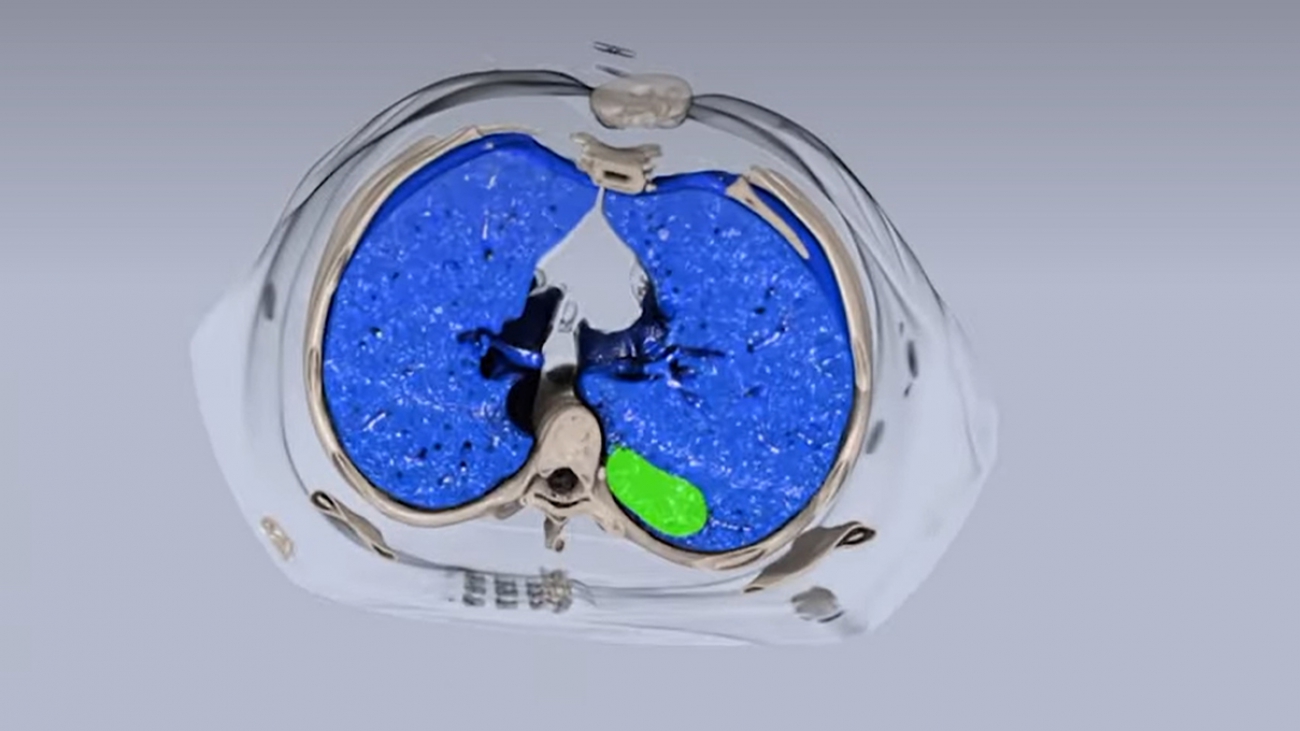

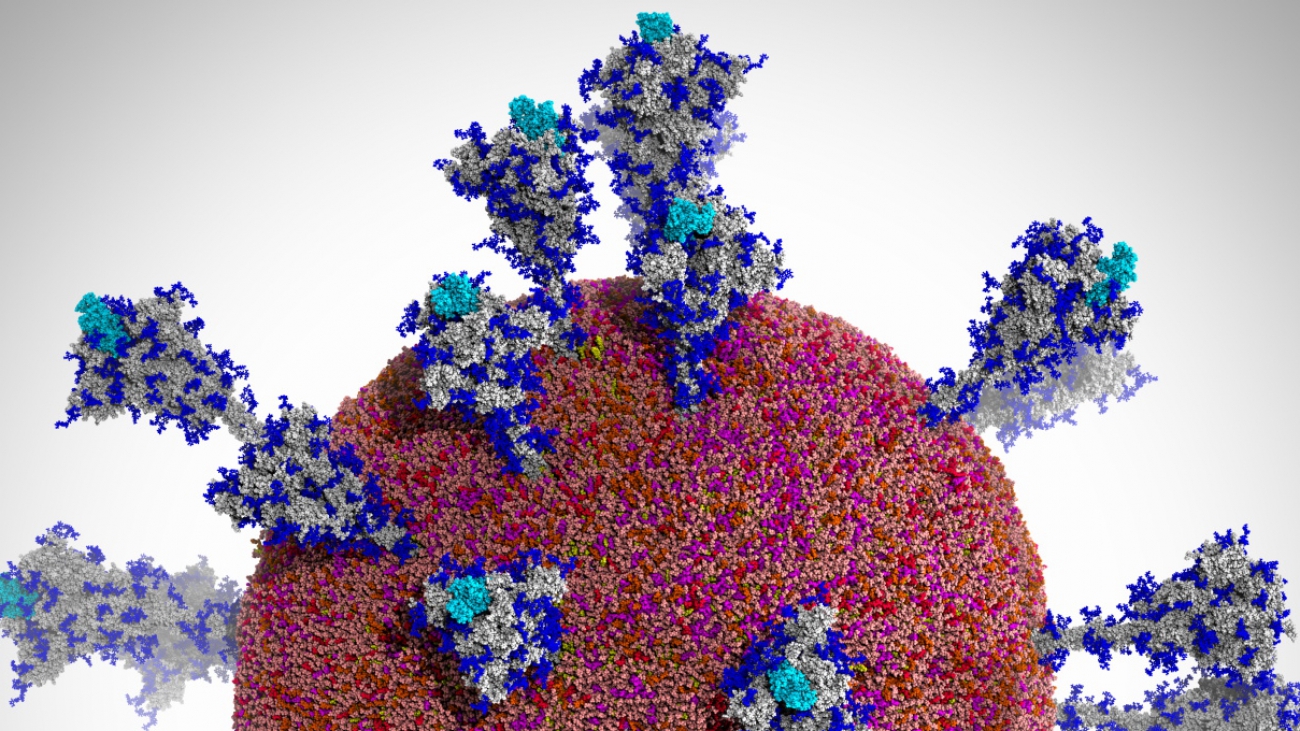

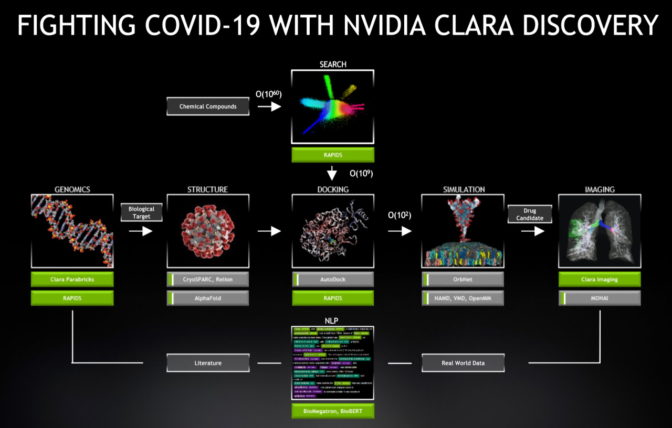

Healthcare and AI experts worldwide are putting monumental effort into developing models that can help radiologists determine the severity of COVID cases from lung scans. They’re also building platforms to smoothly integrate AI into daily workflows, and developing federated learning techniques that help hospitals work together on more robust AI models.

The NVIDIA Clara Imaging application framework is poised to advance this work with NVIDIA GPUs and AI models that can accelerate each step of the radiology workflow, including image acquisition, scan annotation, triage and reporting.

Delivering Tools to Radiologists, Developers, Hospitals

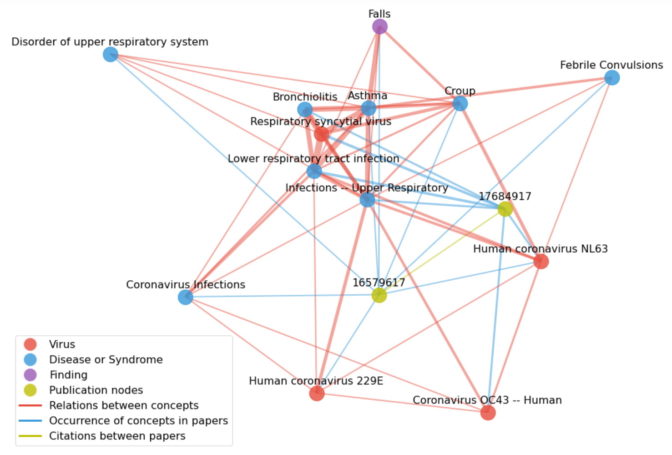

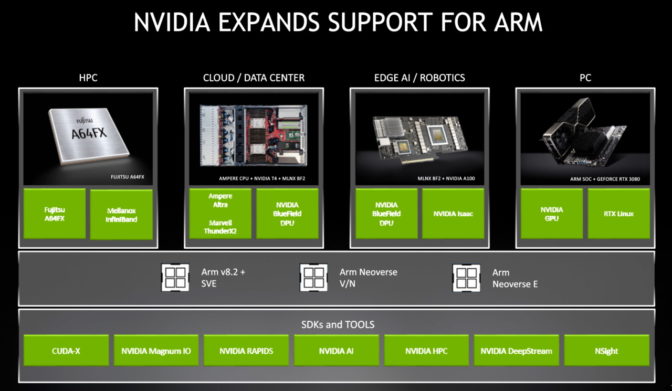

AI developers are working to bridge the gap between their models and the systems radiologists already use, with the goal of creating seamless integration of deep learning insights into tools like PACS digital archiving systems. Here’s how NVIDIA is supporting their work:

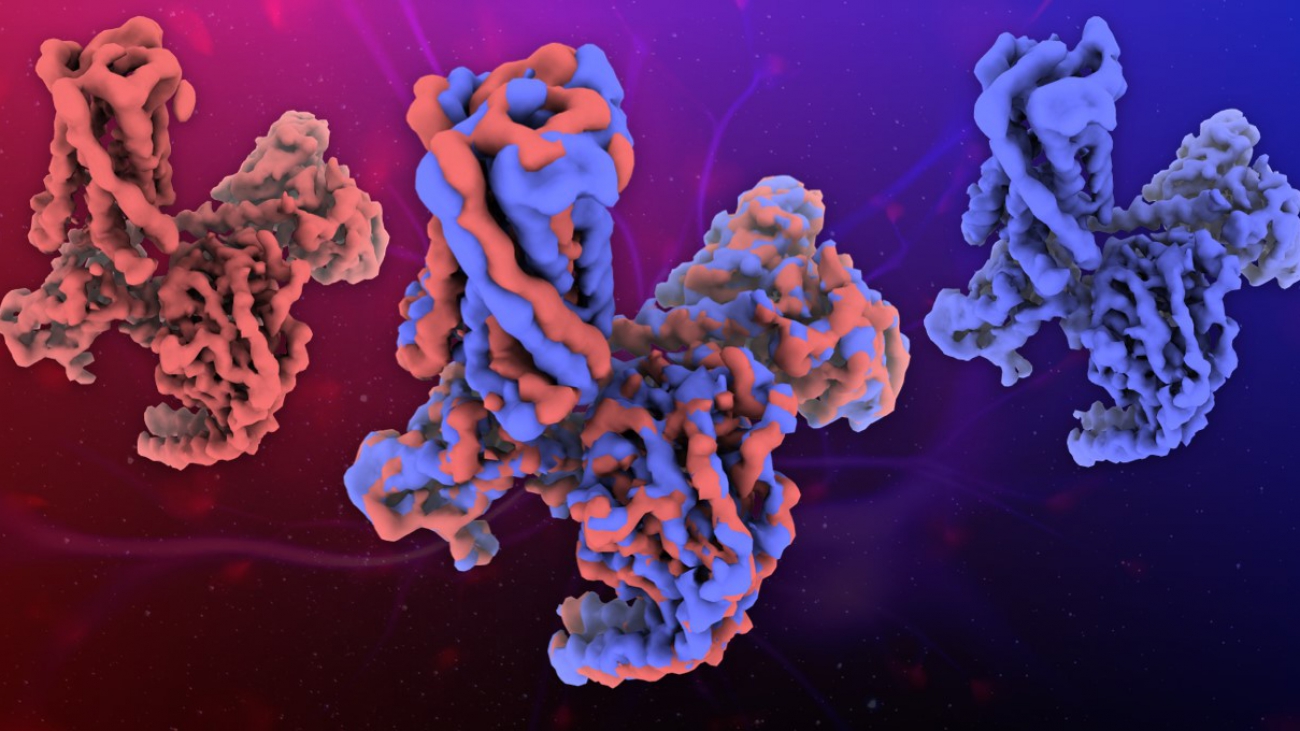

- We’ve strengthened the NVIDIA Clara application framework’s full-stack GPU-accelerated libraries and SDKs for imaging, with new pretrained models available on the NGC software hub. NVIDIA and the U.S. National Institutes of Health jointly developed AI models that can help researchers classify COVID cases from chest CT scans, and evaluate the severity of these cases.

- Using the NVIDIA Clara Deploy SDK, Mass General Brigham researchers are testing a risk assessment model that analyzes chest X-rays to determine the severity of lung disease. The tool was developed by the Athinoula A. Martinos Center for Biomedical Imaging, which has adopted NVIDIA DGX A100 systems to power its research.

- Together with King’s College London, we introduced this year MONAI, an open-source AI framework for medical imaging. Based on the Ignite and PyTorch deep learning frameworks, the modular MONAI code can be easily ported to researchers’ existing AI pipelines. So far, the GitHub project has dozens of contributors and over 1,500 stars.

- NVIDIA Clara Federated Learning enables researchers to collaborate on training robust AI models without sharing patient information. It’s been used by hospitals and academic medical centers to train models for mammogram assessment, and to assess the likelihood that patients with COVID-19 symptoms will need supplemental oxygen.

NVIDIA at RSNA

RSNA attendees can check out NVIDIA’s digital booth to discover more about GPU-accelerated AI in medical imaging. Hands-on training courses from the NVIDIA Deep Learning Institute are also available, covering medical imaging topics including image classification, coarse-to-fine contextual memory and data augmentation with generative networks. The following events feature NVIDIA speakers:

- NVIDIA RSNA 2020 Special Address: Tuesday, Dec. 1, at 6 p.m. CT

- The Imaging Standards You Need to Know: On demand

- Demo: Imaging AI in Practice

Over 50 members of NVIDIA Inception — our accelerator program for AI startups — will be exhibiting at RSNA, including Subtle Medical, which developed the first AI tools for medical imaging enhancement to receive FDA clearance and this week announced $12 million in Series A funding.

Another, TrainingData.io used the NVIDIA Clara SDK to train a segmentation AI model to analyze COVID disease progression in chest CT scans. And South Korean startup Lunit recently received the European CE mark and partnered with GE Healthcare on an AI tool that flags abnormalities on chest X-rays for radiologists’ review.

Visit the NVIDIA at RSNA webpage for a full list of activities at the show. Email to request a meeting with our deep learning experts.

Subscribe to NVIDIA healthcare news here.

The post Bringing Enterprise Medical Imaging to Life: RSNA Highlights What’s Next for Radiology appeared first on The Official NVIDIA Blog.