The popularity of public cloud offerings is evident — just look at how top cloud service providers report double-digit growth year over year.

However, application performance requirements and regulatory compliance issues, to name two examples, often require data to be stored locally to reduce distance and latency and to place data entirely within a company’s control. In these cases, standard private clouds also may offer less flexibility, agility or on-demand capacity.

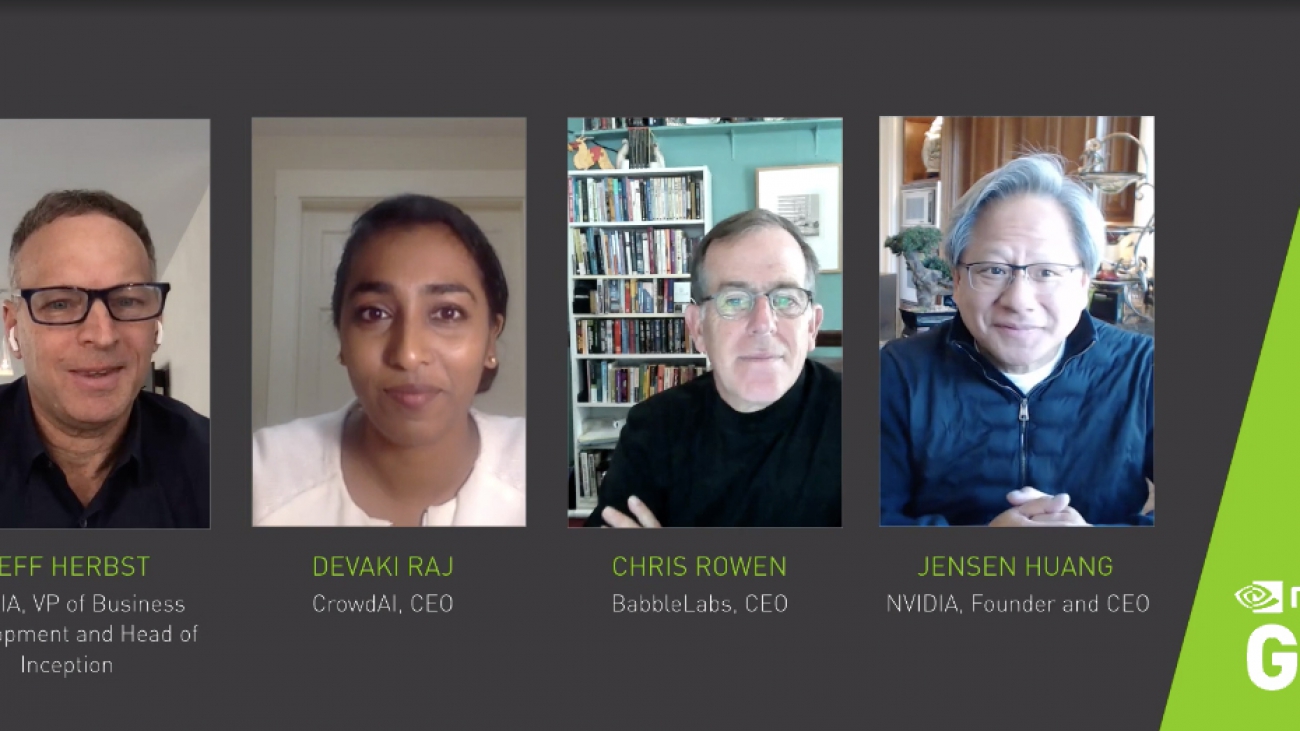

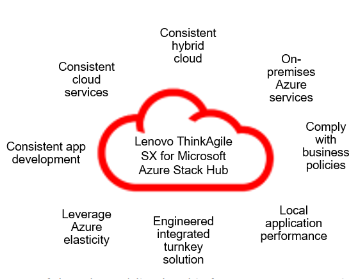

To help resolve these issues, Lenovo, Microsoft and NVIDIA have engineered a hyperconverged hybrid cloud that enables Azure cloud services within an organization’s data center.

By integrating Lenovo ThinkAgile SX, Microsoft Azure Stack Hub and NVIDIA Mellanox networking, organizations can deploy a turnkey, rack-scale cloud that’s optimized with a resilient, highly performant and secure software-defined infrastructure.

Fully Integrated Azure Stack Hub Solution

Lenovo ThinkAgile SX for Microsoft Azure Stack Hub satisfies regulatory compliance and removes performance concerns. Because all data is kept on secure servers in a customer’s data center, it’s much simpler to comply with the governance laws of a country and implement their own policies and practices.

Similarly, by reducing the distance that data must travel, latency is reduced and application performance goals can be more easily achieved. At the same time, customers can cloud-burst some workloads to the Microsoft Azure public cloud, if desired.

Lenovo, Microsoft and NVIDIA worked together to make sure everything performs right out of the box. There’s no need to worry about configuring and adjusting settings for virtual or physical infrastructure.

The power and automation of Azure Stack Hub software, the convenience and reliability of Lenovo’s advanced servers, and the high performance of NVIDIA networking combine to enable an optimized hybrid cloud. Offering the automation and flexibility of Microsoft Azure Cloud with the security and performance of on-premises infrastructure, it’s an ideal platform to:

- deliver Azure cloud services from the security of your own data center,

- enable rapid development and iteration of applications with on-premises deployment tools,

- unify application development across entire hybrid cloud environments, and

- easily move applications and data across private and public clouds.

Agility of a Hybrid Cloud

Azure Stack Hub also seamlessly operates with Azure, delivering an orchestration layer that enables the movement of data and applications to the public cloud. This hybrid cloud protects the data and applications that need protection and offers lower latencies for accessing data. And it still provides the public cloud benefits organizations may need, such as reduced costs, increased infrastructure scalability and flexibility, and protection from data loss.

A hybrid approach to cloud computing keeps all sensitive information onsite and often includes centrally used applications that may have some of this data tied to them. With a hybrid cloud infrastructure in place, IT personnel can focus on building proficiencies in deploying and operating cloud services — such as IaaS, PaaS and SaaS — and less on managing infrastructure.

Network Performance

A hybrid cloud requires a network that can handle all data communication between clients, servers and storage. The Ethernet fabric used for networking in the Lenovo ThinkAgile SX for Microsoft Azure Stack Hub leverages NVIDIA Mellanox Spectrum Ethernet switches — powered by the industry’s highest-performing ASICs — along with NVIDIA Cumulus Linux, the most advanced open network operating system.

At 25Gb/s data rates, these switches provide cloud-optimized delivery of data at line-rate. Using a fully shared buffer, they support fair bandwidth allocation and provide predictably low latency, as well as traffic flow prioritization and optimization technology to deliver data without delays, while the hot-swappable redundant power supplies and fans help provide resiliency for business-sensitive traffic.

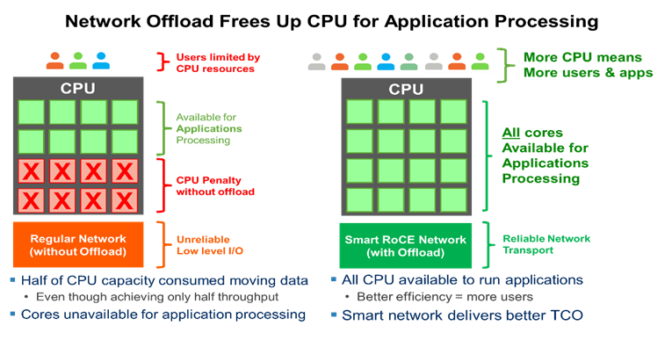

Modern networks require advanced offload capabilities, including remote direct memory access (RDMA), TCP, overlay networks (for example, VXLAN and Geneve) and software-defined storage acceleration. Implementing these at the network layer frees expensive CPU cycles for user applications while improving the user experience.

To handle the high-speed communications demands of Azure Stack Hub, Lenovo configured compute nodes with a dual-port 10/25/100GbE NVIDIA Mellanox ConnectX-4 Lx, ConnectX-5 or ConnectX-6 Dx NICs. The ConnectX NICs are designed to address cloud, virtualized infrastructure, security and network storage challenges. They use native hardware support for RoCE, offer stateless TCP offloads, accelerate overlay networks and support NVIDIA GPUDirect technology to maximize performance of AI and machine learning workloads. All of this results in much needed higher infrastructure efficiency.

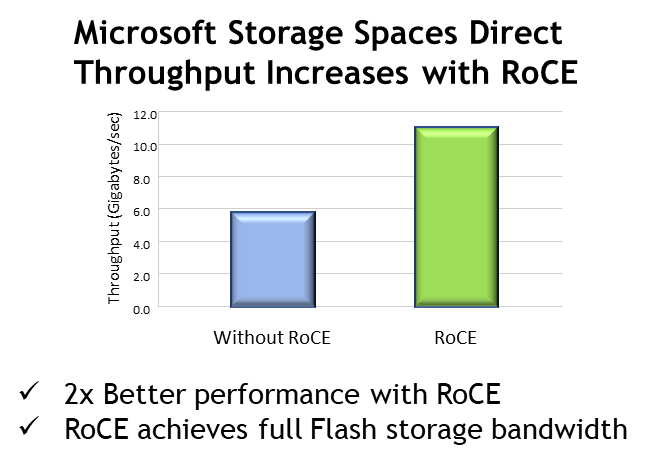

RoCE for Improved Efficiency

Microsoft Azure Stack Hub leverages Storage Spaces Direct (S2D) and Microsoft’s Server Message Block Direct 3.0. SMB Direct uses high-speed RoCE to transfer large amounts of data with little CPU intervention. SMB Multichannel allows servers to simultaneously use multiple network connections and provide fault tolerance through the automatic discovery of network paths.

The addition of these two features allows NVIDIA RoCE-enabled ConnectX Ethernet NICs to deliver line-rate performance and optimize data transfer between server and storage over standard Ethernet. Customers with Lenovo ThinkAgile SX servers or the Lenovo ThinkAgile SX Azure Hub can deploy storage on secure file servers while delivering the highest performance. As a result, S2D is extremely fast with disaggregated file server performance, almost equaling that of locally attached storage.

Run More Workloads

By using intelligent hardware accelerators and offloads, the NVIDIA RoCE-enabled NICs offload I/O tasks from the CPU, freeing up resources to accelerate application performance instead of making data wait for the attention of a busy CPU.

The result is lower latencies and an improvement in CPU efficiencies. This maximizes the performance in Microsoft Azure Stack deployments by leaving the CPU available to run other application processes. Efficiency gets a boost since users can host more VMs per physical server, support more VDI instances and complete SQL Server queries more quickly.

A Transformative Experience with a ThinkAgile Advantage

Lenovo ThinkAgile solutions include a comprehensive portfolio of software and services that supports the full lifecycle of infrastructure. At every stage — planning, deploying, supporting, optimizing and end-of-life — Lenovo provides the expertise and services needed to get the most from technology investments.

This includes single-point-of-contact support for all the hardware and software used in the solution, including Microsoft’s Azure Stack Hub and the ConnectX NICs. Customers never have to worry about who to call — Lenovo takes calls and drives them to resolution.

Learn more about Lenovo ThinkAgile SX for Microsoft Azure Stack Hub with NVIDIA Mellanox networking.

The post On Cloud Mine: Lenovo, Microsoft and NVIDIA Bring Cloud Computing on Premises with Azure Stack Hub appeared first on The Official NVIDIA Blog.