Back to school was destined to look different this year.

With the world adapting to COVID-19, safety measures are preventing a return to in-person teaching in many places. Also, students learning through conventional video conferencing systems often feel the content is difficult to read, or teachers block the words written on presentation boards.

Faced with these challenges, educators at Prefectural University of Hiroshima in Japan envisioned a high-quality remote learning system with additional features not possible with traditional video conferencing.

They chose a distance-learning solution from Sony that links lecturers and students across their three campuses. It uses AI to make it easy for presenters anywhere to engage their audiences and impart information using captivating video. Thanks to these innovations, lecturers at Prefectural University can now teach students simultaneously on three campuses linked by a secure virtual private network.

AI Helps Lecturers Get Smarter About Remote Learning

At the heart of Prefectural’s distance learning system is Sony’s REA-C1000 Edge Analytics Appliance, which was developed using the NVIDIA Jetson Edge AI platform. The appliance lets teachers and speakers quickly create dynamic video presentations without using expensive video production gear or learning sophisticated software applications.

Sony’s exclusive AI algorithms run inside the appliance. These deep learning models employ techniques such as automatic tracking, zooming and cropping to allow non-specialists to produce engaging, professional-quality video in real time.

Users simply connect the Edge Analytics Appliance to a camera that can pan, tilt and zoom; a PC; and a display or recording device. In Prefectural’s case, multiple cameras capture what a lecturer writes on the board, questions and contributions from students, and up to full HD images depending on the size of the lecture hall.

Managing all of this technology is made simple for the lecturers. A touchscreen panel facilitates intuitive operation of the system without the need for complex adjustment of camera settings.

Teachers Achieve New Levels of Transparency

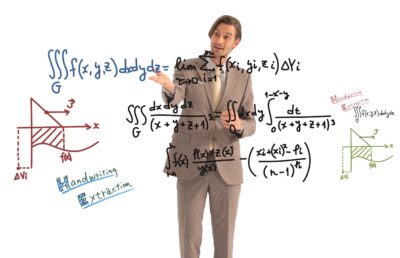

One of the landmark applications in the Edge Analytics Appliance is handwriting extraction, which lets students experience lectures more fully, rather than having to jot down notes.

The application uses a camera to record text and figures as an instructor writes them by hand on a whiteboard or blackboard, and then immediately draws them as if they are floating in front of the instructor.

Students viewing the lecture live from a remote location or from a recording afterward can see and recognize the text and diagrams, even if the original handwriting is unclear or hidden by the instructor’s body. The combined processing power of the compact, energy-efficient Jetson TX2 and Sony’s moving/unmoving object detection technology makes the transformation from the board to the screen seamless.

Handwriting extraction is also customizable: the transparency of the floating text and figures can be adjusted, so that characters that are faint or hard to read can be highlighted in color, making them more legible — and even more so than the original content written on the board.

Create Engaging Content Without Specialist Resources

Another innovative application is Chroma key-less CG overlay, using state-of-the-art algorithms from Sony, like moving-object detection, to produce class content without the need for large-scale video editing equipment.

Like a personal greenscreen for presenters, the application seamlessly places the speaker in front of any animations, diagrams or graphs being presented.

Previously, moving-object detection algorithms required for this kind of compositing could only be run on professional workstations. With Jetson TX2, Sony was able to include this powerful deep learning-based feature within the compact, simple design of the Edge Analytics Appliance.

A Virtual Camera Operator

Numerous additional algorithms within the appliance include those for color-pattern matching, shape recognition, pose recognition and more. These enable features such as:

- PTZ Auto Tracking — automatically tracks an instructor’s movements and ensures they stay in focus.

- Focus Area Cropping — crops a specified portion from a video recorded on a single camera and creates effects as if the cropped portion were recorded on another camera. This can be used to generate, for example, a picture-in-picture effect, where an audience can simultaneously see a close-up of the presenter speaking against a wide shot of the rest of the stage.

- Close Up by Gesture — automatically zooms in on and records students or audience members who stand up in preparation to ask a question.

With the high-performance Jetson platform, the Edge Analytics Appliance can easily handle a wide range of applications like these. The result is like a virtual camera operator that allows people to create engaging, professional-looking video presentations without the expertise or expense previously required to do so.

Officials at Prefectural University of Hiroshima say the new distance learning initiative has already led to greater student and teacher satisfaction with remote learning. Linking the university’s three campuses through the system is also fostering a sense of unity among the campuses.

“We chose Sony’s Edge Analytics Appliance for our new distance learning design because it helps us realize a realistic and comfortable learning environment for students by clearly showing the contents on the board and encouraging discussion. It was also appealing as a cost-effective solution as teachers can simply operate without additional staff,” said Kyousou Kurisu, director of public university corporation, Prefectural University of Hiroshima.

Sony plans to continually update applications available on the Edge Analytics Appliance. So, like any student, the system will only get better over time.

The post AI in Schools: Sony Reimagines Remote Learning with Artificial Intelligence appeared first on The Official NVIDIA Blog.