As businesses and schools consider reopening around the world, they’re taking safety precautions to mitigate the lingering threat of COVID-19 — often taking the temperature of each individual entering their facilities.

Fever is a common warning sign for the virus (and the seasonal flu), but manual temperature-taking with infrared thermometers takes time and requires workers stationed at a building’s entrances to collect temperature readings. AI solutions can speed the process and make it contactless, sending real-time alerts to facilities management teams when visitors with elevated temperatures are detected.

Central California-based IntelliSite Corp. and its recently acquired startup, Deep Vision AI, have developed a temperature screening application that can scan over 100 people a minute. Temperature readings are accurate within a tenth of a degree Celcius. And customers can get up and running with the app within a few hours, with an AI platform running on NVIDIA GPUs on premises or in the cloud for inference.

“Our software platform has multiple AI modules, including foot traffic counting and occupancy monitoring, as well as vehicle recognition,” said Agustin Caverzasi, co-founder of Deep Vision AI, and now president of IntelliSite’s AI business unit. “Adding temperature detection was a natural, easy step for us.”

The temperature screening tool has been deployed in several healthcare facilities and is being tested at U.S. airports, amusement parks and education facilities. Deep Vision is part of NVIDIA Inception, a program that helps startups working in AI and data science get to market faster.

“Deep Vision AI joined Inception at the very beginning, and our engineering and research teams received support with resources like GPUs for training,” Caverzasi said. “It was really helpful for our company’s initial development.”

COVID Risk or Coffee Cup? Building AI for Temperature Tracking

As the pandemic took hold, and social distancing became essential, Caverzasi’s team saw that the technology they’d spent years developing was more relevant than ever.

“The need to protect people from harmful viruses has never been greater,” he said. “With our preexisting AI modules, we can monitor in real time the occupancy levels in a store or a hospital’s waiting room, and trigger alerts before the maximum occupancy is reached in a given area.”

With governments and health organizations advising temperature checking, the startup applied its existing AI capabilities to thermal cameras for the first time. In doing so, they had to fine-tune the model so it wouldn’t be fooled by false positives — for example, when a person shows up red on a thermal camera because of their cup of hot coffee..

This AI model is paired with one of IntelliSite’s IoT solutions called human-based monitoring, or hBM. The hBM platform includes a hardware component: a mobile cart mounted with a thermal camera, monitor and Dell Precision tower workstation for inference. The temperature detection algorithms can now scan five people at the same time.

Double Quick: Faster, Easier Screening

The workstation uses the NVIDIA Quadro RTX 4000 GPU for real-time inference on thermal data from the live camera view. This reduces manual scanning time for healthcare customers by 80 percent, and drops the total cost of conducting temperature scans by 70 percent.

Facilities using hBM can also choose to access data remotely and monitor multiple sites, using either an on-premises Dell PowerEdge R740 server with NVIDIA T4 Tensor Core GPUs, or GPU resources through the IntelliSite Cloud Engine.

If businesses and hospitals are also taking a second temperature measurement with a thermometer, these readings can be logged in the hBM system, which can maintain records for over a million screenings. Facilities managers can configure alerts via text message or email when high temperatures are detected.

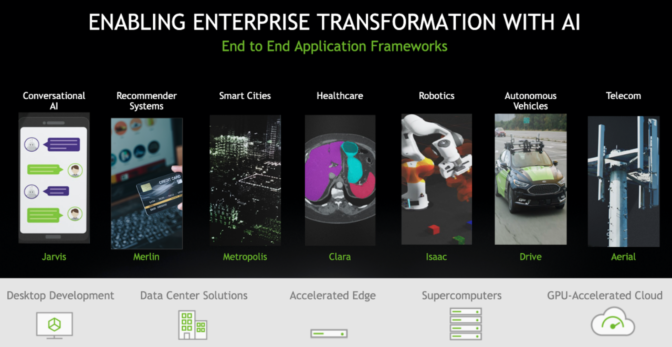

The Deep Vision developer team, based in Córdoba, Argentina, also had to adapt their AI models that use regular camera data to detect people wearing face masks. They use the NVIDIA Metropolis application framework for smart cities, including the NVIDIA DeepStream SDK for intelligent video analytics and NVIDIA TensorRT to accelerate inference.

Deep Vision and IntelliSite next plan to integrate the temperature screening AI with facial recognition models, so customers can use the application for employee registration once their temperature has been checked.

IntelliSite is a member of the NVIDIA Clara Guardian ecosystem, bringing edge AI to healthcare facilities. Visit our COVID page to explore how other startups are using AI and accelerated computing to fight the pandemic.

FDA disclaimer: Thermal measurements are designed as a triage tool and should not be the sole means of diagnosing high-risk individuals for any viral threat. Elevated thermal readings should be confirmed with a secondary, clinical-grade evaluation tool. FDA recommends screening individuals one at a time, not in groups.