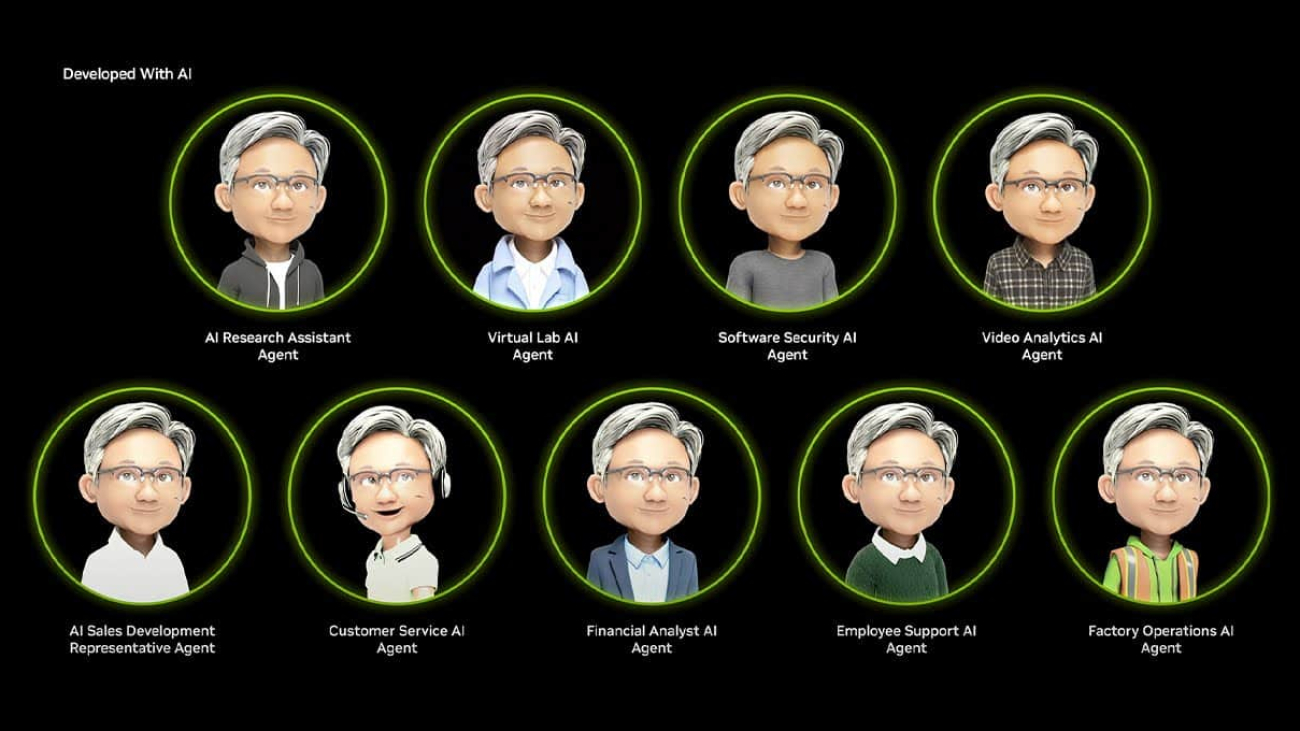

AI agents are poised to transform productivity for the world’s billion knowledge workers with “knowledge robots” that can accomplish a variety of tasks. To develop AI agents, enterprises need to address critical concerns like trust, safety, security and compliance.

New NVIDIA NIM microservices for AI guardrails — part of the NVIDIA NeMo Guardrails collection of software tools — are portable, optimized inference microservices that help companies improve the safety, precision and scalability of their generative AI applications.

Central to the orchestration of the microservices is NeMo Guardrails, part of the NVIDIA NeMo platform for curating, customizing and guardrailing AI. NeMo Guardrails helps developers integrate and manage AI guardrails in large language model (LLM) applications. Industry leaders Amdocs, Cerence AI and Lowe’s are among those using NeMo Guardrails to safeguard AI applications.

Developers can use the NIM microservices to build more secure, trustworthy AI agents that provide safe, appropriate responses within context-specific guidelines and are bolstered against jailbreak attempts. Deployed in customer service across industries like automotive, finance, healthcare, manufacturing and retail, the agents can boost customer satisfaction and trust.

One of the new microservices, built for moderating content safety, was trained using the Aegis Content Safety Dataset — one of the highest-quality, human-annotated data sources in its category. Curated and owned by NVIDIA, the dataset is publicly available on Hugging Face and includes over 35,000 human-annotated data samples flagged for AI safety and jailbreak attempts to bypass system restrictions.

NVIDIA NeMo Guardrails Keeps AI Agents on Track

AI is rapidly boosting productivity for a broad range of business processes. In customer service, it’s helping resolve customer issues up to 40% faster. However, scaling AI for customer service and other AI agents requires secure models that prevent harmful or inappropriate outputs and ensure the AI application behaves within defined parameters.

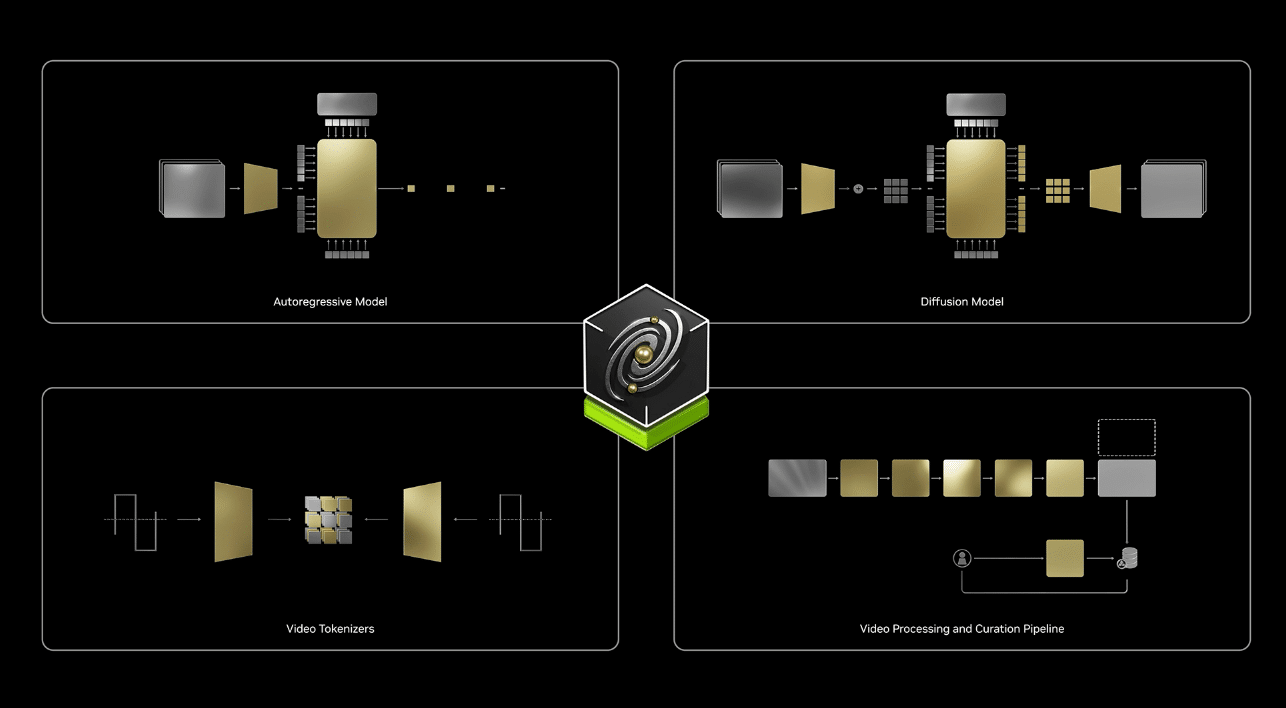

NVIDIA has introduced three new NIM microservices for NeMo Guardrails that help AI agents operate at scale while maintaining controlled behavior:

By applying multiple lightweight, specialized models as guardrails, developers can cover gaps that may occur when only more general global policies and protections exist — as a one-size-fits-all approach doesn’t properly secure and control complex agentic AI workflows.

Small language models, like those in the NeMo Guardrails collection, offer lower latency and are designed to run efficiently, even in resource-constrained or distributed environments. This makes them ideal for scaling AI applications in industries such as healthcare, automotive and manufacturing, in locations like hospitals or warehouses.

Industry Leaders and Partners Safeguard AI With NeMo Guardrails

NeMo Guardrails, available to the open-source community, helps developers orchestrate multiple AI software policies — called rails — to enhance LLM application security and control. It works with NVIDIA NIM microservices to offer a robust framework for building AI systems that can be deployed at scale without compromising on safety or performance.

Amdocs, a leading global provider of software and services to communications and media companies, is harnessing NeMo Guardrails to enhance AI-driven customer interactions by delivering safer, more accurate and contextually appropriate responses.

“Technologies like NeMo Guardrails are essential for safeguarding generative AI applications, helping make sure they operate securely and ethically,” said Anthony Goonetilleke, group president of technology and head of strategy at Amdocs. “By integrating NVIDIA NeMo Guardrails into our amAIz platform, we are enhancing the platform’s ‘Trusted AI’ capabilities to deliver agentic experiences that are safe, reliable and scalable. This empowers service providers to deploy AI solutions safely and with confidence, setting new standards for AI innovation and operational excellence.”

Cerence AI, a company specializing in AI solutions for the automotive industry, is using NVIDIA NeMo Guardrails to help ensure its in-car assistants deliver contextually appropriate, safe interactions powered by its CaLLM family of large and small language models.

“Cerence AI relies on high-performing, secure solutions from NVIDIA to power our in-car assistant technologies,” said Nils Schanz, executive vice president of product and technology at Cerence AI. “Using NeMo Guardrails helps us deliver trusted, context-aware solutions to our automaker customers and provide sensible, mindful and hallucination-free responses. In addition, NeMo Guardrails is customizable for our automaker customers and helps us filter harmful or unpleasant requests, securing our CaLLM family of language models from unintended or inappropriate content delivery to end users.”

Lowe’s, a leading home improvement retailer, is leveraging generative AI to build on the deep expertise of its store associates. By providing enhanced access to comprehensive product knowledge, these tools empower associates to answer customer questions, helping them find the right products to complete their projects and setting a new standard for retail innovation and customer satisfaction.

“We’re always looking for ways to help associates to above and beyond for our customers,” said Chandhu Nair, senior vice president of data, AI and innovation at Lowe’s. “With our recent deployments of NVIDIA NeMo Guardrails, we ensure AI-generated responses are safe, secure and reliable, enforcing conversational boundaries to deliver only relevant and appropriate content.”

To further accelerate AI safeguards adoption in AI application development and deployment in retail, NVIDIA recently announced at the NRF show that its NVIDIA AI Blueprint for retail shopping assistants incorporates NeMo Guardrails microservices for creating more reliable and controlled customer interactions during digital shopping experiences.

Consulting leaders Taskus, Tech Mahindra and Wipro are also integrating NeMo Guardrails into their solutions to provide their enterprise clients safer, more reliable and controlled generative AI applications.

NeMo Guardrails is open and extensible, offering integration with a robust ecosystem of leading AI safety model and guardrail providers, as well as AI observability and development tools. It supports integration with ActiveFence’s ActiveScore, which filters harmful or inappropriate content in conversational AI applications, and provides visibility, analytics and monitoring.

Hive, which provides its AI-generated content detection models for images, video and audio content as NIM microservices, can be easily integrated and orchestrated in AI applications using NeMo Guardrails.

The Fiddler AI Observability platform easily integrates with NeMo Guardrails to enhance AI guardrail monitoring capabilities. And Weights & Biases, an end-to-end AI developer platform, is expanding the capabilities of W&B Weave by adding integrations with NeMo Guardrails microservices. This enhancement builds on Weights & Biases’ existing portfolio of NIM integrations for optimized AI inferencing in production.

NeMo Guardrails Offers Open-Source Tools for AI Safety Testing

Developers ready to test the effectiveness of applying safeguard models and other rails can use NVIDIA Garak — an open-source toolkit for LLM and application vulnerability scanning developed by the NVIDIA Research team.

With Garak, developers can identify vulnerabilities in systems using LLMs by assessing them for issues such as data leaks, prompt injections, code hallucination and jailbreak scenarios. By generating test cases involving inappropriate or incorrect outputs, Garak helps developers detect and address potential weaknesses in AI models to enhance their robustness and safety.

Availability

NVIDIA NeMo Guardrails microservices, as well as NeMo Guardrails for rail orchestration and the NVIDIA Garak toolkit, are now available for developers and enterprises. Developers can get started building AI safeguards into AI agents for customer service using NeMo Guardrails with this tutorial.

See notice regarding software product information.

Read More

Tank

Tank Damage

Damage Support

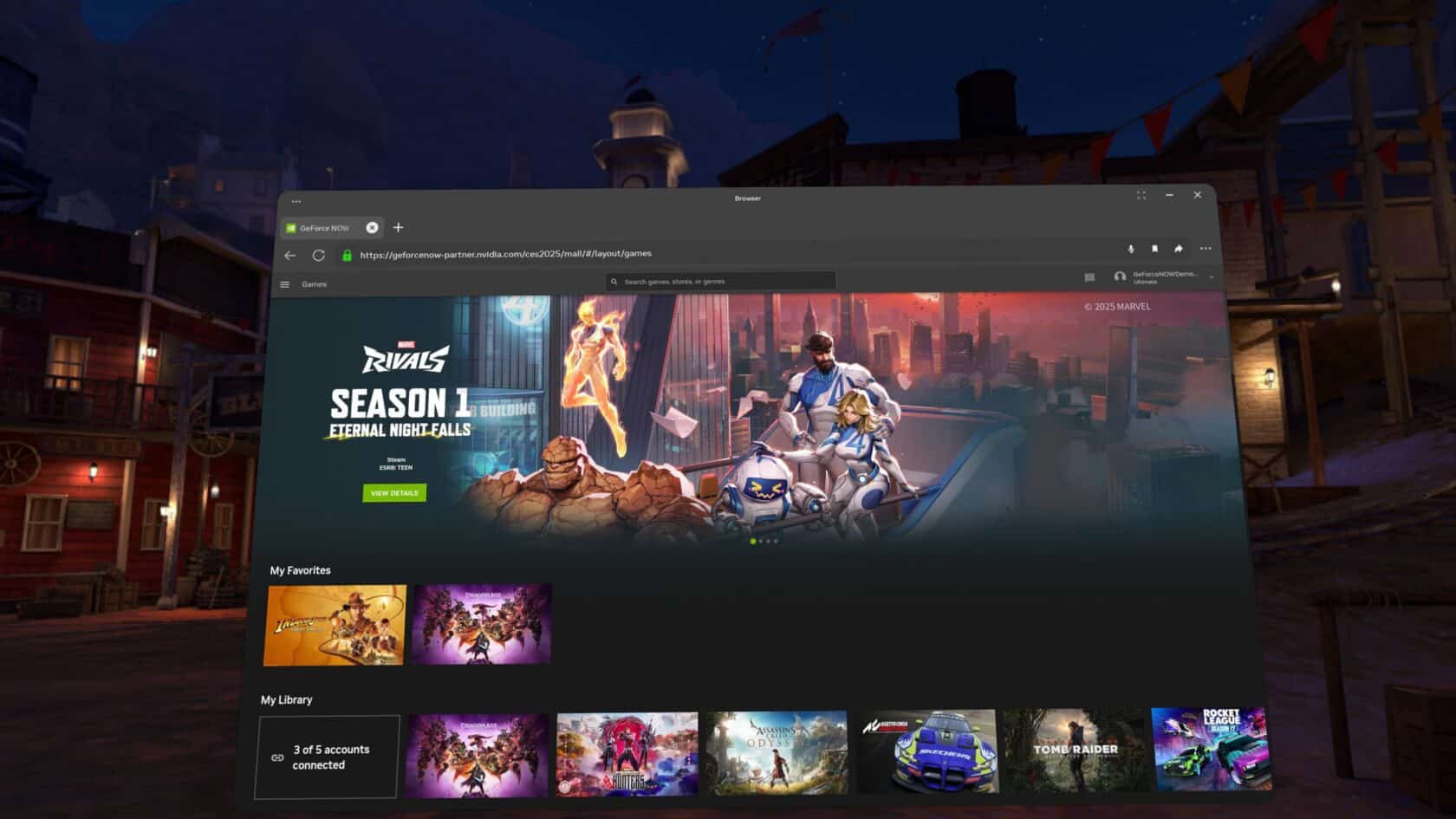

Support NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)