NVIDIA founder and CEO Jensen Huang kicked off CES 2025 with a 90-minute keynote that included new products to advance gaming, autonomous vehicles, robotics, and agentic AI.

AI has been “advancing at an incredible pace,” he said before an audience of more than 6,000 packed into the Michelob Ultra Arena in Las Vegas.

“It started with perception AI — understanding images, words, and sounds. Then generative AI — creating text, images and sound,” Huang said. Now, we’re entering the era of “physical AI, AI that can proceed, reason, plan and act.”

NVIDIA GPUs and platforms are at the heart of this transformation, Huang explained, enabling breakthroughs across industries, including gaming, robotics and autonomous vehicles (AVs).

Huang’s keynote showcased how NVIDIA’s latest innovations are enabling this new era of AI, with several groundbreaking announcements, including:

Huang started off his talk by reflecting on NVIDIA’s three-decade journey. In 1999, NVIDIA invented the programmable GPU. Since then, modern AI has fundamentally changed how computing works, he said. “Every single layer of the technology stack has been transformed, an incredible transformation, in just 12 years.”

Revolutionizing Graphics With GeForce RTX 50 Series

“GeForce enabled AI to reach the masses, and now AI is coming home to GeForce,” Huang said.

With that, he introduced the NVIDIA GeForce RTX 5090 GPU, the most powerful GeForce RTX GPU so far, with 92 billion transistors and delivering 3,352 trillion AI operations per second (TOPS).

“Here it is — our brand-new GeForce RTX 50 series, Blackwell architecture,” Huang said, holding the blacked-out GPU aloft and noting how it’s able to harness advanced AI to enable breakthrough graphics. “The GPU is just a beast.”

“Even the mechanical design is a miracle,” Huang said, noting that the graphics card has two cooling fans.

More variations in the GPU series are coming. The GeForce RTX 5090 and GeForce RTX 5080 desktop GPUs are scheduled to be available Jan. 30. The GeForce RTX 5070 Ti and the GeForce RTX 5070 desktops are slated to be available starting in February. Laptop GPUs are expected in March.

DLSS 4 introduces Multi Frame Generation, working in unison with the complete suite of DLSS technologies to boost performance by up to 8x. NVIDIA also unveiled NVIDIA Reflex 2, which can reduce PC latency by up to 75%.

The latest generation of DLSS can generate three additional frames for every frame we calculate, Huang explained. “As a result, we’re able to render at incredibly high performance, because AI does a lot less computation.”

RTX Neural Shaders use small neural networks to improve textures, materials and lighting in real-time gameplay. RTX Neural Faces and RTX Hair advance real-time face and hair rendering, using generative AI to animate the most realistic digital characters ever. RTX Mega Geometry increases the number of ray-traced triangles by up to 100x, providing more detail.

Advancing Physical AI With Cosmos|

In addition to advancements in graphics, Huang introduced the NVIDIA Cosmos world foundation model platform, describing it as a game-changer for robotics and industrial AI.

The next frontier of AI is physical AI, Huang explained. He likened this moment to the transformative impact of large language models on generative AI.

“The ChatGPT moment for general robotics is just around the corner,” he explained.

Like large language models, world foundation models are fundamental to advancing robot and AV development, yet not all developers have the expertise and resources to train their own, Huang said.

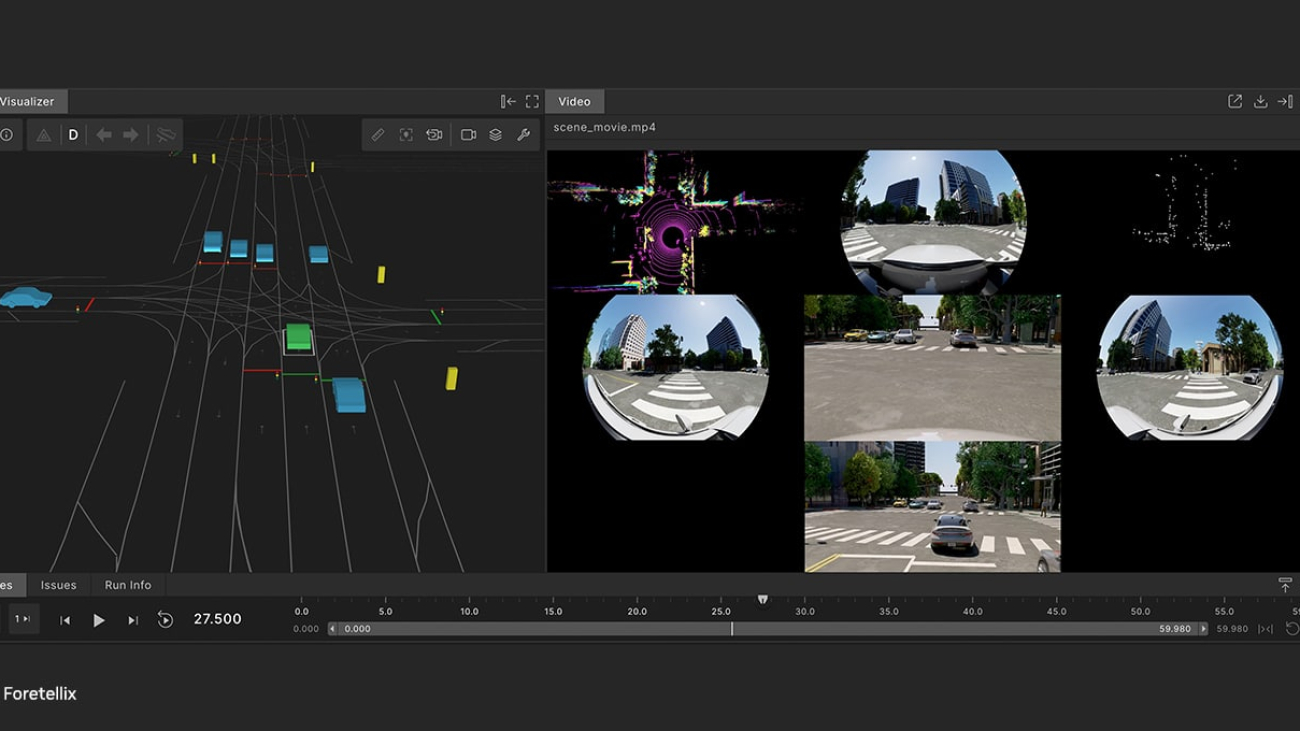

Cosmos integrates generative models, tokenizers, and a video processing pipeline to power physical AI systems like AVs and robots.

Cosmos aims to bring the power of foresight and multiverse simulation to AI models, enabling them to simulate every possible future and select optimal actions.

Cosmos models ingest text, image or video prompts and generate virtual world states as videos, Huang explained. “Cosmos generations prioritize the unique requirements of AV and robotics use cases like real-world environments, lighting and object permanence.”

Leading robotics and automotive companies, including 1X, Agile Robots, Agility, Figure AI, Foretellix, Fourier, Galbot, Hillbot, IntBot, Neura Robotics, Skild AI, Virtual Incision, Waabi and XPENG, along with ridesharing giant Uber, are among the first to adopt Cosmos.

In addition, Hyundai Motor Group is adopting NVIDIA AI and Omniverse to create safer, smarter vehicles, supercharge manufacturing and deploy cutting-edge robotics.

Cosmos is open license and available on GitHub.

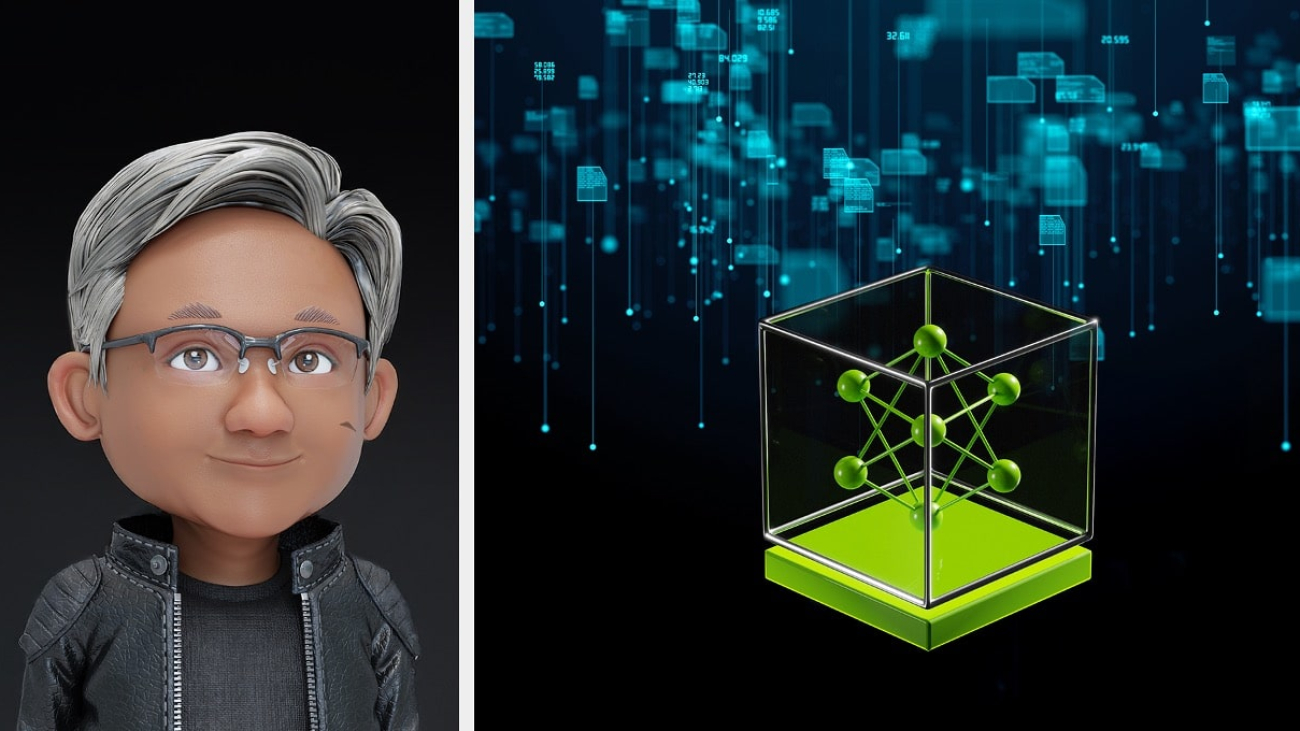

Empowering Developers With AI Foundation Models

Beyond robotics and autonomous vehicles, NVIDIA is empowering developers and creators with AI foundation models.

Huang introduced AI foundation models for RTX PCs that supercharge digital humans, content creation, productivity and development.

“These AI models run in every single cloud because NVIDIA GPUs are now available in every single cloud,” Huang said. “It’s available in every single OEM, so you could literally take these models, integrate them into your software packages, create AI agents and deploy them wherever the customers want to run the software.”

These models — offered as NVIDIA NIM microservices — are accelerated by the new GeForce RTX 50 Series GPUs.

The GPUs have what it takes to run these swiftly, adding support for FP4 computing, boosting AI inference by up to 2x and enabling generative AI models to run locally in a smaller memory footprint compared with previous-generation hardware.

Huang explained the potential of new tools for creators: “We’re creating a whole bunch of blueprints that our ecosystem could take advantage of. All of this is completely open source, so you could take it and modify the blueprints.”

Top PC manufacturers and system builders are launching NIM-ready RTX AI PCs with GeForce RTX 50 Series GPUs. “AI PCs are coming to a home near you,” Huang said.

While these tools bring AI capabilities to personal computing, NVIDIA is also advancing AI-driven solutions in the automotive industry, where safety and intelligence are paramount.

Innovations in Autonomous Vehicles

Huang announced the NVIDIA DRIVE Hyperion AV platform, built on the new NVIDIA AGX Thor system-on-a-chip (SoC), designed for generative AI models and delivering advanced functional safety and autonomous driving capabilities.

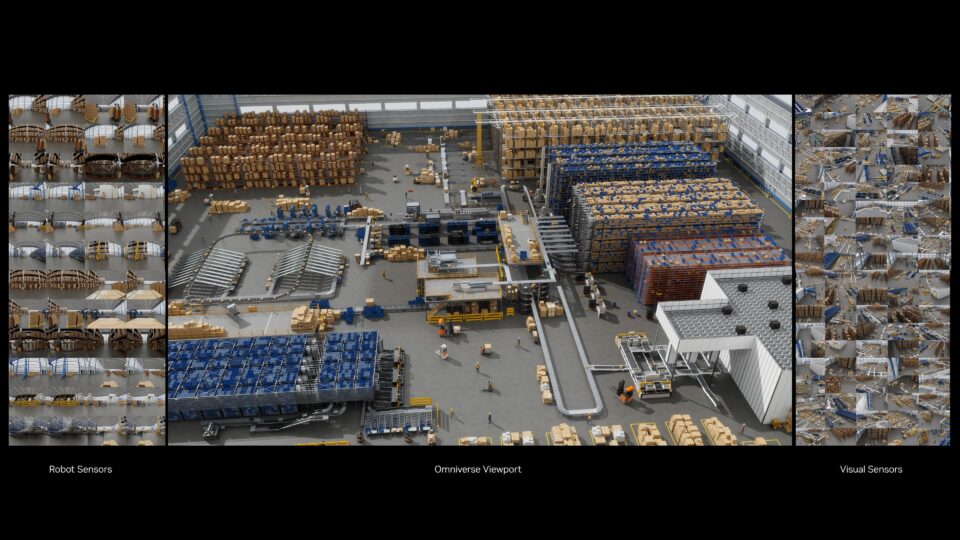

“The autonomous vehicle revolution is here,” Huang said. “Building autonomous vehicles, like all robots, requires three computers: NVIDIA DGX to train AI models, Omniverse to test drive and generate synthetic data, and DRIVE AGX, a supercomputer in the car.”

DRIVE Hyperion, the first end-to-end AV platform, integrates advanced SoCs, sensors, and safety systems for next-gen vehicles, a sensor suite and an active safety and level 2 driving stack, with adoption by automotive safety pioneers such as Mercedes-Benz, JLR and Volvo Cars.

Huang highlighted the critical role of synthetic data in advancing autonomous vehicles. Real-world data is limited, so synthetic data is essential for training the autonomous vehicle data factory, he explained.

Powered by NVIDIA Omniverse AI models and Cosmos, this approach “generates synthetic driving scenarios that enhance training data by orders of magnitude.”

Using Omniverse and Cosmos, NVIDIA’s AI data factory can scale “hundreds of drives into billions of effective miles,” Huang said, dramatically increasing the datasets needed for safe and advanced autonomous driving.

“We are going to have mountains of training data for autonomous vehicles,” he added.

Toyota, the world’s largest automaker, will build its next-generation vehicles on the NVIDIA DRIVE AGX Orin, running the safety-certified NVIDIA DriveOS operating system, Huang said.

“Just as computer graphics was revolutionized at such an incredible pace, you’re going to see the pace of AV development increasing tremendously over the next several years,” Huang said. These vehicles will offer functionally safe, advanced driving assistance capabilities.

Agentic AI and Digital Manufacturing

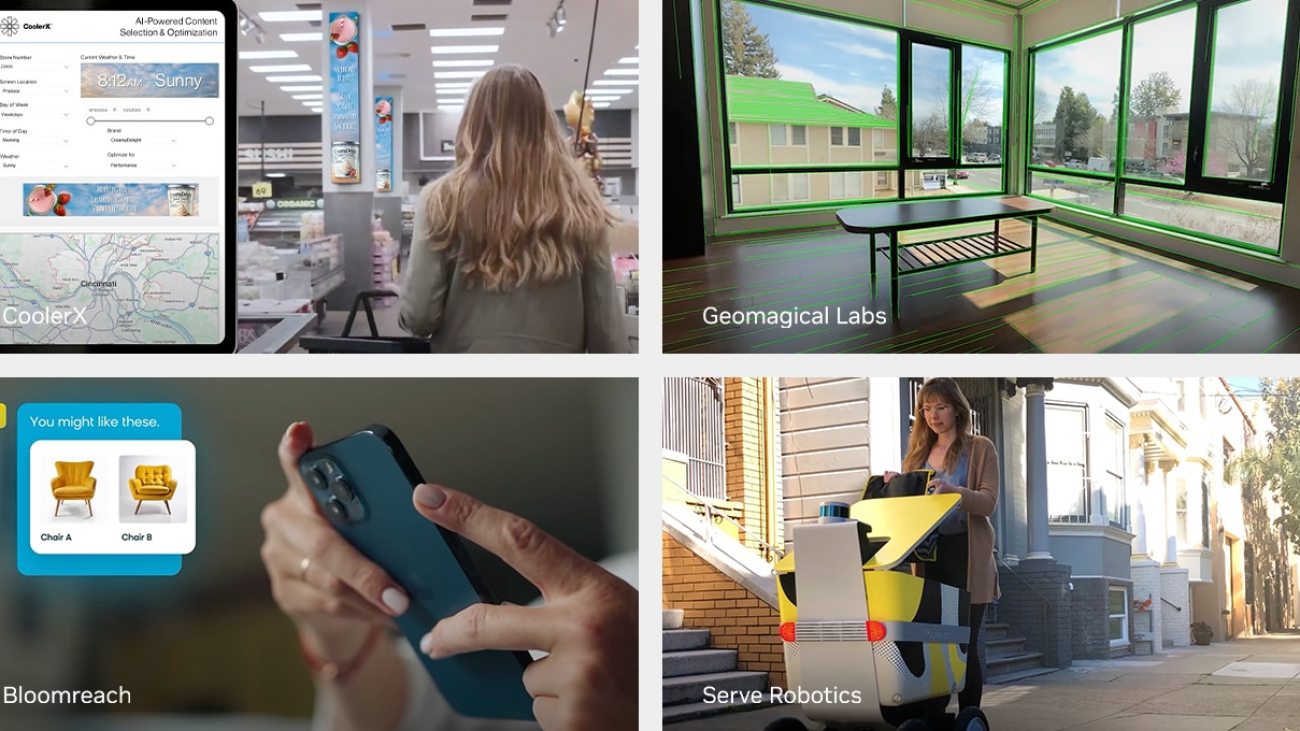

NVIDIA and its partners have launched AI Blueprints for agentic AI, including PDF-to-podcast for efficient research and video search and summarization for analyzing large quantities of video and images — enabling developers to build, test and run AI agents anywhere.

AI Blueprints empower developers to deploy custom agents for automating enterprise workflows This new category of partner blueprints integrates NVIDIA AI Enterprise software, including NVIDIA NIM microservices and NVIDIA NeMo, with platforms from leading providers like CrewAI, Daily, LangChain, LlamaIndex and Weights & Biases.

Additionally, Huang announced new Llama Nemotron.

Developers can use NVIDIA NIM microservices to build AI agents for tasks like customer support, fraud detection, and supply chain optimization.

Available as NVIDIA NIM microservices, the models can supercharge AI agents on any accelerated system.

NVIDIA NIM microservices streamline video content management, boosting efficiency and audience engagement in the media industry.

Moving beyond digital applications, NVIDIA’s innovations are paving the way for AI to revolutionize the physical world with robotics.

“All of the enabling technologies that I’ve been talking about are going to make it possible for us in the next several years to see very rapid breakthroughs, surprising breakthroughs, in general robotics.”

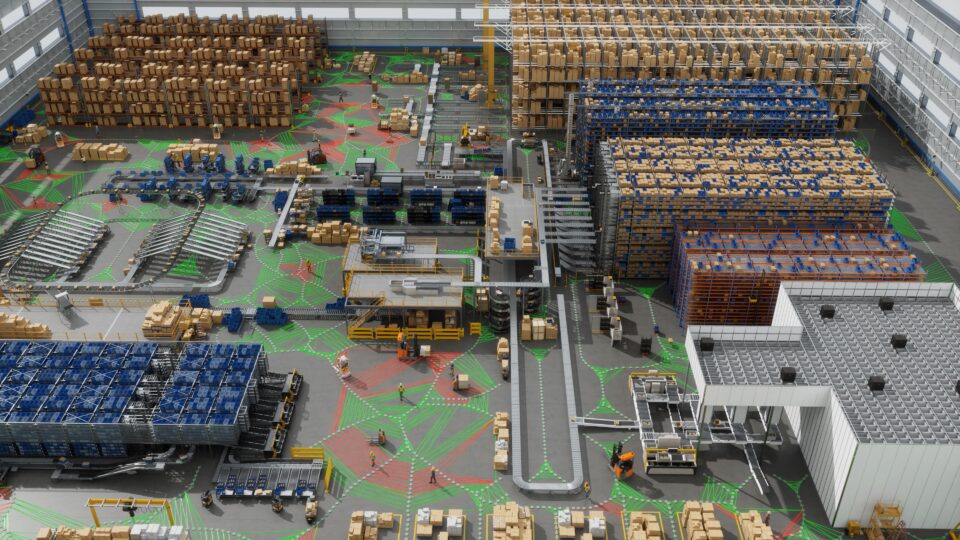

In manufacturing, the NVIDIA Isaac GR00T Blueprint for synthetic motion generation will help developers generate exponentially large synthetic motion data to train their humanoids using imitation learning.

Huang emphasized the importance of training robots efficiently, using NVIDIA’s Omniverse to generate millions of synthetic motions for humanoid training.

The Mega blueprint enables large-scale simulation of robot fleets, adopted by leaders like Accenture and KION for warehouse automation.

These AI tools set the stage for NVIDIA’s latest innovation: a personal AI supercomputer called Project DIGITS.

NVIDIA Unveils Project Digits

Putting NVIDIA Grace Blackwell on every desk and at every AI developer’s fingertips, Huang unveiled NVIDIA Project DIGITS.

“I have one more thing that I want to show you,” Huang said. “None of this would be possible if not for this incredible project that we started about a decade ago. Inside the company, it was called Project DIGITS — deep learning GPU intelligence training system.”

Huang highlighted the legacy of NVIDIA’s AI supercomputing journey, telling the story of how in 2016 he delivered the first NVIDIA DGX system to OpenAI. “And obviously, it revolutionized artificial intelligence computing.”

The new Project DIGITS takes this mission further. “Every software engineer, every engineer, every creative artist — everybody who uses computers today as a tool — will need an AI supercomputer,” Huang said.

Huang revealed that Project DIGITS, powered by the GB10 Grace Blackwell Superchip, represents NVIDIA’s smallest yet most powerful AI supercomputer. “This is NVIDIA’s latest AI supercomputer,” Huang said, showcasing the device. “It runs the entire NVIDIA AI stack — all of NVIDIA software runs on this. DGX Cloud runs on this.”

The compact yet powerful Project DIGITS is expected to be available in May.

A Year of Breakthroughs

“It’s been an incredible year,” Huang said as he wrapped up the keynote. Huang highlighted NVIDIA’s major achievements: Blackwell systems, physical AI foundation models, and breakthroughs in agentic AI and robotics

“I want to thank all of you for your partnership,” Huang said.

See notice regarding software product information.

Read More