TLDR: We introduce RoboTool, enabling robots to use tools creatively with large language models, which solves long-horizon hybrid discrete-continuous planning problems with the environment- and embodiment-related constraints.

Tool use is an essential hallmark of advanced intelligence. Some animals can use tools to achieve goals that are infeasible without tools. For example, crows solve a complex physical puzzle using a series of tools, and apes use a tree branch to crack open nuts or fish termites with a stick. Beyond using tools for their intended purpose and following established procedures, using tools in creative and unconventional ways provides more flexible solutions, albeit presents far more challenges in cognitive ability.

In robotics, creative tool use is also a crucial yet very demanding capability because it necessitates the all-around ability to predict the outcome of an action, reason what tools to use, and plan how to use them. In this work, we want to explore the question, can we enable such creative tool-use capability in robots? We identify that creative robot tool use solves a complex long-horizon planning task with constraints related to environment and robot capacity. For example, ”grasp a milk carton” while the milk carton’s location is out of the robotic arm’s workspace or ”walking to the other sofa” while there exists a gap in the way that exceeds the quadrupedal robot’s walking capability.

Task and motion planning (TAMP) is a common framework for solving such long-horizon planning tasks. It combines low-level continuous motion planning in classic robotics and high-level discrete task planning to solve complex planning tasks that are difficult to address by any of these domains alone. Existing literature shows that it can handle tool use in a static environment with optimization-based approaches such as logic-geometric programming. However, this optimization approach generally requires a long computation time for tasks with many objects and task planning steps due to the increasing search space. In addition, classical TAMP methods are limited to the family of tasks that can be expressed in formal logic and symbolic representation, making them not user-friendly for non-experts.

Recently, large language models (LLMs) have been shown to encode vast knowledge beneficial to robotics tasks in reasoning, planning, and acting. TAMP methods with LLMs can bypass the computation burden of the explicit optimization process in classical TAMP. Prior works show that LLMs can adeptly dissect tasks given either clear or ambiguous language descriptions and instructions. However, it is still unclear how to use LLMs to solve more complex tasks that require reasoning with implicit constraints imposed by the robot’s embodiment and its surrounding physical world.

Methods

In this work, we are interested in solving language-instructed long-horizon robotics tasks with implicitly activated physical constraints. By providing LLMs with adequate numerical semantic information in natural language, we observe that LLMs can identify the activated constraints induced by the spatial layout of objects in the scene and the robot’s embodiment limits, suggesting that LLMs may maintain knowledge and reasoning capability about the 3D physical world. Furthermore, our comprehensive tests reveal that LLMs are not only adept at employing tools to transform otherwise unfeasible tasks into feasible ones but also display creativity in using tools beyond their conventional functions, based on their material, shape, and geometric features.

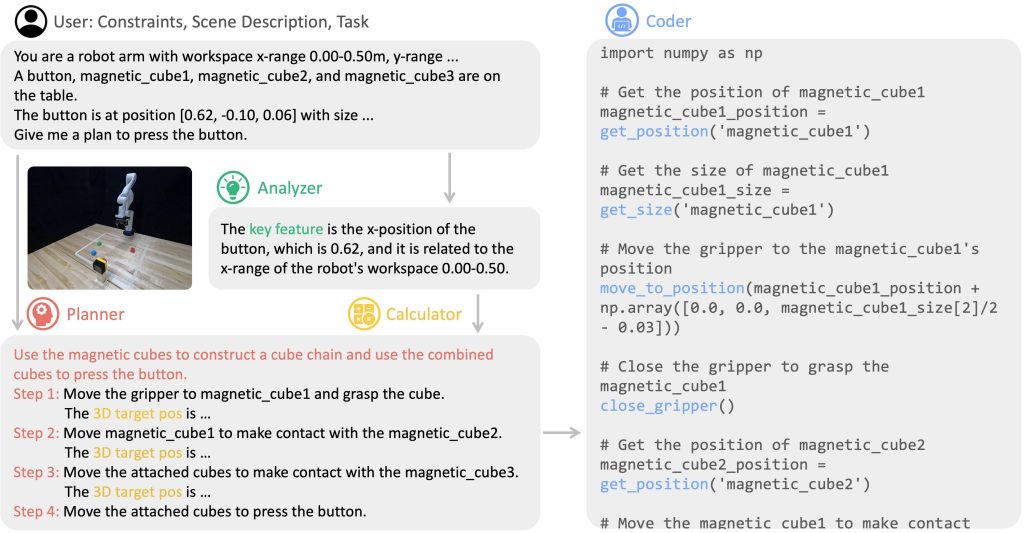

To solve the aforementioned problem, we introduce RoboTool, a creative robot tool user built on LLMs, which uses tools beyond their standard affordances. RoboTool accepts natural language instructions comprising textual and numerical information about the environment, robot embodiments, and constraints to follow. RoboTool produces code that invokes the robot’s parameterized low-level skills to control both simulated and physical robots. RoboTool consists of four central components, with each handling one functionality, as depicted below:

- Analyzer, which processes the natural language input to identify key concepts that could impact the task’s feasibility.

- Planner, which receives both the original language input and the identified key concepts to formulate a comprehensive strategy for completing the task.

- Calculator, which is responsible for determining the parameters, such as the target positions required for each parameterized skill.

- Coder, which converts the comprehensive plan and parameters into executable code. All of these components are constructed using GPT-4.

Benchmark

In this work, we aim to explore three challenging categories of creative tool use for robots: tool selection, sequential tool use, and tool manufacturing. We design six tasks for two different robot embodiments: a quadrupedal robot and a robotic arm.

- Tool selection (Sofa-Traversing and Milk-Reaching) requires the reasoning capability to choose the most appropriate tools among multiple options. It demands a broad understanding of object attributes such as size, material, and shape, as well as the ability to analyze the relationship between these properties and the intended objective.

- Sequential tool use (Sofa-Climbing and Can-Grasping) entails utilizing a series of tools in a specific order to reach a desired goal. Its complexity arises from the need for long-horizon planning to determine the best sequence for tool use, with successful completion depending on the accuracy of each step in the plan.

- Tool manufacturing (Cube-Lifting and Button-Pressing) involves accomplishing tasks by crafting tools from available materials or adapting existing ones. This procedure requires the robot to discern implicit connections among objects and assemble components through manipulation.

Results

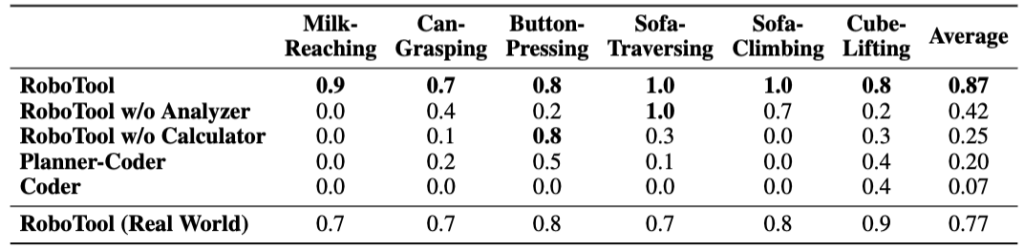

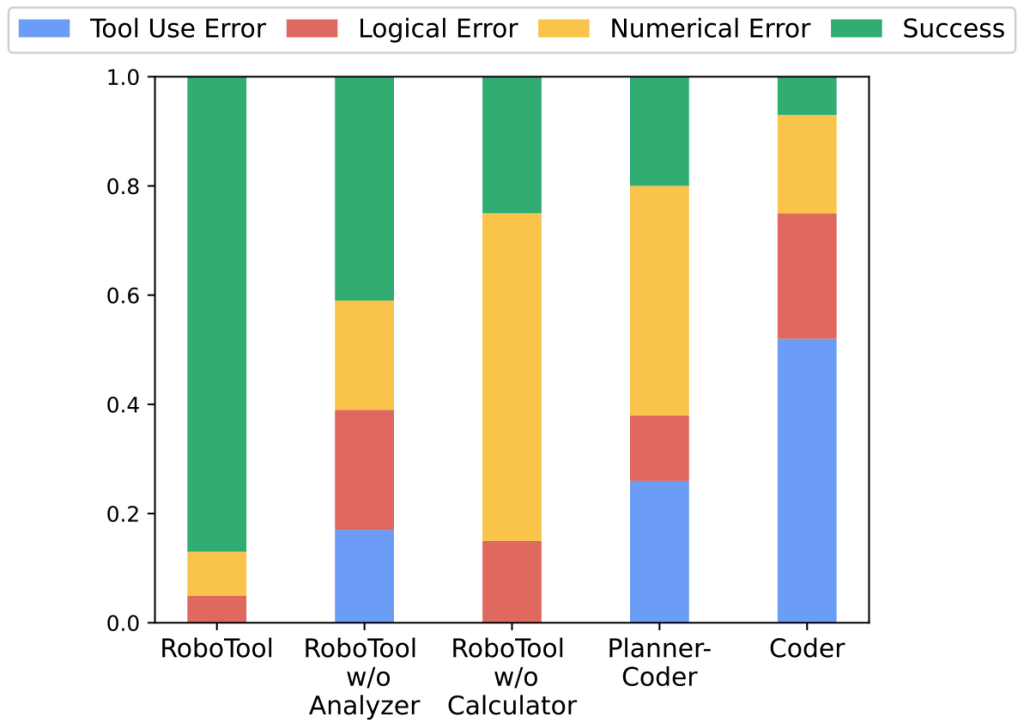

We compare RoboTool with four baselines, including one variant of Code-as-Policies (Coder) and three variants of our proposed, including RoboTool without Analyzer, RoboTool without Calculator, and Planner-Coder. Our evaluation results show that RoboTool consistently achieves success rates that are either comparable to or exceed those of the baselines across six tasks in simulation. RoboTool’s performance in the real world drops by 0.1 in comparison to the simulation result, mainly due to the perception errors and execution errors associated with parameterized skills, such as the quadrupedal robot falling down the soft sofa. Nonetheless, RoboTool (Real World) still surpasses the simulated performance of all baselines.

We define three types of errors: tool-use error indicating whether the correct tool is used, logical error focusing on planning errors such as using tools in the wrong order or ignoring the provided constraints, and numerical error including calculating the wrong target positions or adding incorrect offsets. By comparing RoboTool and RoboTool w/o Analyzer, we show that the Analyzer helps reduce the tool-use error. Moreover, the Calculator significantly reduces the numerical error.

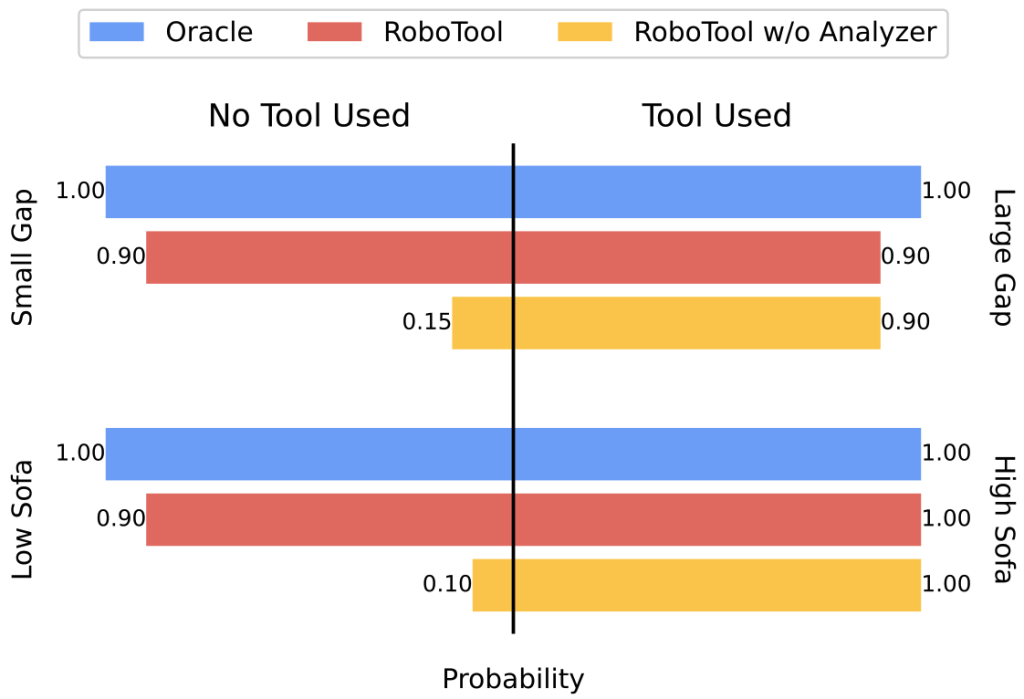

By discerning the critical concept, RoboTool enables discriminative tool-use behaviors — using tools only when necessary — showing more accurate grounding related to the environment and embodiment instead of being purely dominated by the prior knowledge in the LLMs.

Takeaways

- Our proposed RoboTool can solve long-horizon hybrid discrete-continuous planning problems with the environment- and embodiment-related constraints in a zero-shot manner.

- We provide an evaluation benchmark to test various aspects of creative tool-use capability, including tool selection, sequential tool use, and tool manufacturing.

Paper: https://arxiv.org/pdf/2310.13065.pdf

Website: https://creative-robotool.github.io/

Twitter: https://x.com/mengdibellaxu/status/1716447045052215423?s=20