Google’s focus on AI stems from the conviction that this transformational technology will benefit society through its capacity to assist, complement, and empower people in almost every field and sector. In no area is the magnitude of this opportunity greater than in the spheres of healthcare and medicine. Commensurate with our mission to demonstrate these societal benefits, Google Research’s programs in applied machine learning (ML) have helped place Alphabet among the top five most impactful corporate research institutions in the health and life sciences publications on the Nature Impact Index in every year from 2019 through 2022.

Our Health research publications have had broad impact, spanning the fields of biomarkers, consumer sensors, dermatology, endoscopy, epidemiology, medicine, genomics, oncology, ophthalmology, pathology, public & environmental health, and radiology. Today we examine three specific themes that came to the fore in the last year:

| · Criticality of technology partnerships |

| · Shift towards mobile health |

| · Generative ML in health applications |

In each section, we emphasize the importance of a measured and collaborative approach to innovation in health. Unlike the “launch and iterate” approach typical in consumer product development, applying ML to health requires thoughtful assessment, ecosystem awareness, and rigorous testing. All healthcare technologies must demonstrate to regulators that they are safe and effective prior to deployment and need to meet rigorous patient privacy and performance monitoring standards. But ML systems, as new entrants to the field, additionally must discover their best uses in the health workflows and earn the trust of healthcare professionals and patients. This domain-specific integration and validation work is not something tech companies should embark upon alone, but should do so only in close collaboration with expert health partners.

Criticality of technology partnerships

Responsible innovation requires the patience and sustained investment to collectively follow the long arc from primary research to human impact. In our own journey to promote the use of ML to prevent blindness in underserved diabetic populations, six years elapsed between our publication of the primary algorithmic research, and the recent deployment study demonstrating the real-world accuracy of the integrated ML solution in a community-based screening setting. Fortunately, we have found that we can radically accelerate this journey from benchtop-ML to AI-at-the-bedside with thoughtfully constructed technology partnerships.

The need for accelerated release of health-related ML technologies is apparent, for example, in oncology. Breast cancer and lung cancer are two of the most common cancer types, and for both, early detection is key. If ML can yield greater accuracy and expanded availability of screening for these cancers, patient outcomes will improve — but the longer we wait to deploy these advances, the fewer people will be helped. Partnership can allow new technologies to safely reach patients with less delay — established med-tech companies can integrate new AI capabilities into existing product suites, seek the appropriate regulatory clearances, and use their existing customer base to rapidly deploy these technologies.

We’ve seen this play out first hand. Just two and half years after sharing our primary research using ML to improve breast cancer screening, we partnered with iCAD, a leading purveyor of mammography software, to begin integrating our technology into their products. We see this same accelerated pattern in translating our research on deep learning for low-dose CT scans to lung cancer screening workflows through our partnership with RadNet’s Aidence.

Genomics is another area where partnership has proven a powerful accelerant for ML technology. This past year, we collaborated with Stanford University to rapidly diagnose genetic disease by combining novel sequencing technologies and ML to sequence a patient’s entire genome in record-setting time, allowing life-saving interventions. Separately, we announced a partnership with Pacific Biosciences to further advance genomic technologies in research and the clinic by layering our ML techniques on top of their sequencing methods, building on our long running open source projects in deep learning genomics. Later in the same year PacBio announced Revio, a new genome sequencing tool powered by our technology.

<!–

|

| Diagnosing a rare genetic disease may depend on finding a handful of novel mutations in out of billions of base pairs in the patient’s genome. |

–>

Partnerships between med-tech companies and AI-tech companies can accelerate translation of technology, but these partnerships are a complement to, not a substitute for, open research and open software that moves the entire field forward. For example, within our medical imaging portfolio, we introduced a new approach to simplify transfer learning for chest x-ray model development, methods to accelerate the life-cycle of ML systems for medical imaging via robust and efficient self-supervision, and techniques to make medical imaging systems more robust to outliers — all within 2022.

Moving forward, we believe this mix of scientific openness and cross-industry partnerships will be a critical catalyst in realizing the benefits of human-centered AI in healthcare and medicine.

Shift towards mobile medicine

In healthcare overall, and recapitulated in ML research in health applications, there has been a shift in emphasis away from concentrated centralized care (e.g., hospitalizations) and towards distributed care (e.g., reaching patients in their communities). Thus, we’re working to develop mobile ML-solutions that can be brought to the patient, rather than bringing the patient to the (ML-powered) clinic. In 2021, we shared some of our early work using smartphone cameras to measure heart rate and to help identify skin conditions. In 2022, we shared new research on the potential for smartphone camera selfies to assess cardiovascular health and metabolic risks to eyesight and the potential for smartphone microphones held to the chest to help interpret heart and lung sounds.

These examples all use the sensors that already exist on every smartphone. While these advances are valuable, there is still great potential in extending mobile health capabilities by developing new sensing technologies. One of our most exciting research projects in this area leverages new sensors that easily connect to modern smartphones to enable mobile maternal ultrasound in under-resourced communities.

Each year, complications from pregnancy & childbirth contribute to 295,000 maternal deaths and 2.4 million neonatal deaths, disproportionately impacting low income populations globally. Obstetric ultrasound is an important component of quality antenatal care, but up to 50% of women in low-and-middle-income countries receive no ultrasound screening during pregnancy. Innovators in ultrasound hardware have made rapid progress towards low-cost, handheld, portable ultrasound probes that can be driven with just a smartphone, but there’s a critical missing piece — a shortage of field technicians with the skills and expertise to operate the ultrasound probe and interpret its shadowy images. Remote interpretation is feasible of course, but is impractical in settings with unreliable or slow internet connectivity.

With the right ML-powered mobile ultrasounds, providers such as midwives, nurses, and community health workers could have the potential to bring obstetric ultrasound to those most in need and catch problems before it’s too late. Previous work had shown that convolutional neural networks (CNNs) could interpret ultrasounds acquired by trained sonographers using a standardized acquisition protocol. Recognizing this opportunity for AI to unblock access to potentially lifesaving information, we’ve spent the last couple of years working in collaboration with academic partners and researchers in the US and Zambia to improve and expand the ability to automatically interpret ultrasound video captures acquired by simply sweeping an ultrasound probe across the mother’s abdomen, a procedure that can easily be taught to non-experts.

|

|

| This ultrasound acquisition procedure can be performed by novices with a few hours of ultrasound training. |

Using just a low cost, battery-powered ultrasound device and a smartphone, the accuracy of this method is on par with existing clinical standards for professional sonographers to estimate gestational age and fetal malpresentation.

|

| The accuracy of this AI enabled procedure is on-par with the clinical standard for estimating gestational age. |

We are in the early stages of a wide-spread transformation in portable medical imaging. In the future, ML-powered mobile ultrasound will augment the phone’s built-in sensors to allow in-the-field triage and screening for a wide range of medical issues, all with minimal training, extending access to care for millions.

Generative ML in Health

As the long arc of the application of ML to health plays out, we expect generative modeling to settle into a role complementary to the pattern recognition systems that are now relatively commonplace. In the past we’ve explored the suitability of generative image models in data augmentation, discussed how generative models might be used to capture interactions among correlated clinical events, and even used it to generate realistic, but entirely synthetic electronic medical records for research purposes.

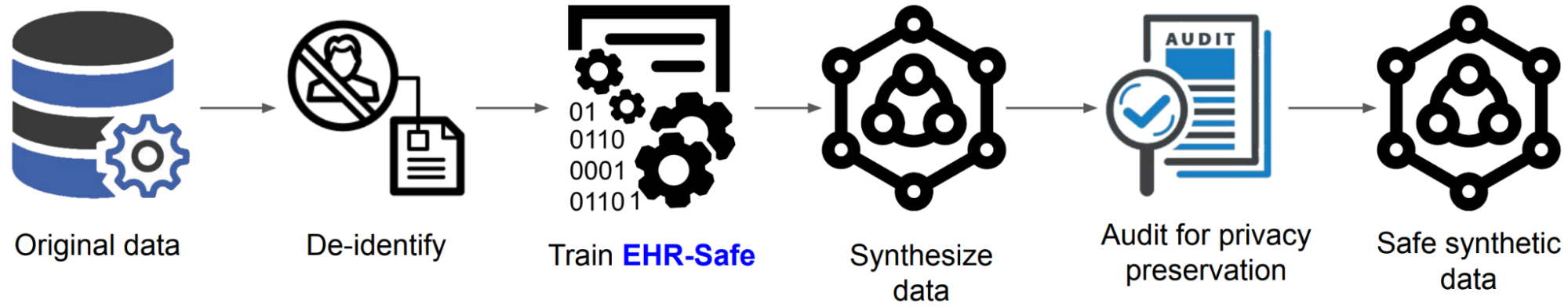

|

| Generating synthetic data from the original data with EHR-Safe. |

Any discussion of today’s outlook on applied generative modeling would be incomplete without mention of recent developments in the field of large language models (LLMs). Nearly a decade of research in the making, publicly available demonstrations of text synthesis via generative recurrent neural networks have captured the world’s imagination. These technologies undoubtedly have real world applications — in fact, Google was among the first to deploy earlier variants of these networks in live consumer products. But when considering their applications to health, we must again return to our mantra of measurement — we have fundamental responsibility to test technologies responsibly and proceed with caution. The gravity of building an ML system that might one day impact real people with real health issues cannot be underestimated.

To that end, in December of last year we published a pre-print on LLMs and the encoding of clinical knowledge which (1) collated and expanded benchmarks for evaluating automated medical question answering systems, and (2) introduced our own research-grade medical question answering LLM, Med-PaLM. For example if one asked Med-Palm, “Does stress cause nosebleeds?” the LLM would generate a response explaining that yes, stress can cause nosebleeds, and detail some possible mechanisms. The purpose of Med-PaLM is to allow researchers to experiment with and improve upon the representation, retrieval, and communication of health information by LLMs, but is not a finished medical question answering product.

We were excited to report that Med-PaLM substantially outperformed other systems on these benchmarks, across the board. That said, a critical take-away of our paper is that merely receiving a “passing” mark on a set of medical exam questions (which ours and some other ML systems do) still falls well short of the safety and accuracy required to support real-world use for medical question answering. We expect that progress in this area will be brisk — but that much like our journey bringing CNNs to medical imaging, the maturation of LLMs for applications in health will require further research, partnership, care, and patience.

|

| Our model, Med-PaLM, obtains state-of-the-art performance on the MedQA USMLE dataset exceeding previous best by 7%. |

Concluding thoughts

We expect all these trends to continue, and perhaps even accelerate, in 2023. In a drive to more efficiently map the arc from innovation to impact in AI for healthcare, we will see increased collaboration between academic, med-tech, AI-tech, and healthcare organizations. This is likely to interact positively with the measured, but nonetheless transformational, expansion of the role of phones and mobile sensors in the provisioning of care, potentially well beyond what we presently imagine telehealth to be. And of course, it’s hard to be in the field of AI these days, and not be excited at the prospects for generative AI and large language models. But particularly in the health domain, it is essential that we use the tools of partnership, and the highest standards of testing to realize this promise. Technology will keep changing, and what we know about human health will keep changing too. What will remain the same is the people caring for each other, and trying to do things better than before. We are excited about the role AI can play in improving healthcare in years to come.

Google Research, 2022 & beyond

This was the seventh blog post in the “Google Research, 2022 & Beyond” series. Other posts in this series are listed in the table below:

| * Articles will be linked as they are released. |