A guest post by Narine Hall, Assistant Professor at Champlain College, CEO of InSpace

InSpace is a communication and virtual learning platform that gives people the ability to interact, collaborate, and educate in familiar physical ways, but in a virtual space. InSpace is built by educators for educators, putting education at the center of the platform.

- InSpace is designed to mirror the fluid, personal, and interactive nature of a real classroom. It allows participants to break free of “Brady Bunch” boxes in existing conference solutions to create a fun, natural, and engaging environment that fosters interaction and collaboration.

- Each person is represented in a video circle that can freely move around the space. When people are next to each other, they can hear and engage in conversation, and as they move away, the audio fades, allowing them to find new conversations.

- As participants zoom out, they can see the entire space, which provides visual social cues. People can seamlessly switch from class discussions to private conversations or group/team-based work, similar to the format of a lab or classroom.

- Teachers can speak to everyone when needed, move between individual students and groups for more private discussion, and place groups of students in audio-isolated rooms for collaboration while still belonging to one virtual space.

Being a collaboration platform, a fundamental InSpace feature is chat. From day one, we wanted to provide a mechanism to help warn users from sending and receiving toxic messages. For example, a teacher in a classroom setting with young people may want a way to prevent students from typing inappropriate comments. Or, a moderator of a large discussion may want a way to reduce inappropriate spam. Or individual users may want to filter them out on their own.

A simple way to identify toxic comments would be to check for the presence of a list of words, including profanity. Moving a step beyond this, we did not want to identify toxic messages just by words contained in the message, we also wanted to consider the context. So, we decided to use machine learning to accomplish that goal.

After some research, we found a pre-trained model for toxicity detection in TensorFlow.js that could be easily integrated into our platform. Importantly, this model runs entirely in the browser, which means that we can warn users against sending toxic comments without their messages ever being stored or processed by a server.

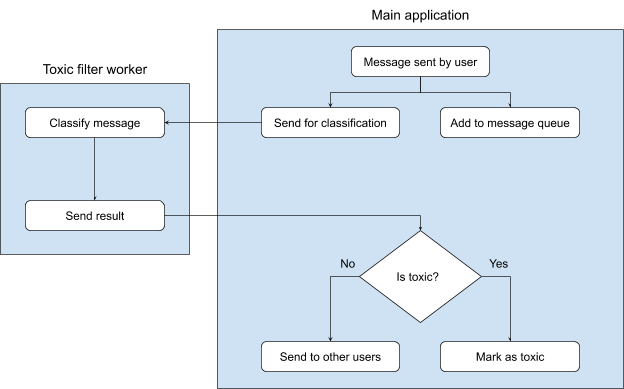

Performance wise, we found that running the toxicity process in a browser’s main thread would be detrimental to the user experience. We decided a good approach was to use the Web Workers API to separate message toxicity detection from the main application so the processes are independent and non-blocking.

Web Workers connect to the main application by sending and receiving messages, in which you can wrap your data. Whenever a user sends a message, it is automatically added to a so-called queue and is sent from the main application to the web worker. When the web worker receives the message from the main app, it starts classification of the message, and when the output is ready, it sends the results back to the main application. Based on the results from the web worker, the main app either sends the message to all participants or warns the user that it is toxic.

Below is the pseudocode for the main application, where we initialize the web worker by providing its path as an argument, then set the callback that will be called each time the worker sends a message, and also we declare the callback that will be called when the user submits a message.

// main application

// initializing the web worker

const toxicityFilter = new Worker('toxicity-filter.worker.js'));

// now we need to set the callback which will process the data from the worker

worker.onMessage = ({ data: { message, isToxic } }) => {

if (isToxic) {

markAsToxic(message);

} else {

sendToAll(message);

}

}When the user sends the message, we pass it to the web worker:

onMessageSubmit = message => {

worker.postMessage(message);

addToQueue(message);

}After the worker is initialized, it starts listening to the data messages from the main app, and handling them using the declared onmessage callback, which then sends a message back to the main app.

// toxicity-filter worker

// here we import dependencies

importScripts(

// the main library to run Tenser Flow in the browser

'https://cdn.jsdelivr.net/npm/@tensorflow/tfjs',

// trained models for toxicity detection

'https://cdn.jsdelivr.net/npm/@tensorflow-models/toxicity',

);

// threshold point for the decision

const threshold = 0.9;

// the main model promise which would be used to classify the message

const modelPromise = toxicity.load(threshold);

// registered callback to run when the main app sends the data message

onmessage = ({ data: message }) => {

modelPromise.then(model => {

model.classify([message.body]).then(predictions => {

// as we want to check the toxicity for all labels,

// `predictions` will contain the results for all 7 labels

// so we check, whether there is a match for any of them

const isToxic = predictions.some(prediction => prediction.results[0].match);

// here we send the data message back to the main app with the results

postMessage({ message, isToxic });

});

});

};

As you can see, the toxicity detector is straightforward to integrate the package with an app, and does not require significant changes to existing architecture. The main application only needs a small “connector,” and the logic of the filter is written in a separate file.

To learn more about InSpace visit https://inspace.chat.