NVIDIA researchers have pumped a double shot of acceleration into their latest text-to-3D generative AI model, dubbed LATTE3D.

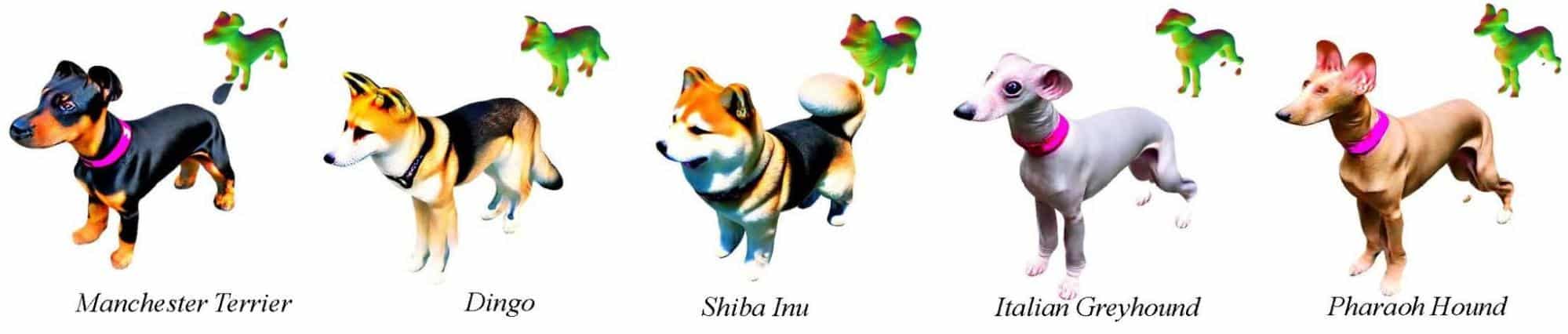

Like a virtual 3D printer, LATTE3D turns text prompts into 3D representations of objects and animals within a second.

Crafted in a popular format used for standard rendering applications, the generated shapes can be easily served up in virtual environments for developing video games, ad campaigns, design projects or virtual training grounds for robotics.

“A year ago, it took an hour for AI models to generate 3D visuals of this quality — and the current state of the art is now around 10 to 12 seconds,” said Sanja Fidler, vice president of AI research at NVIDIA, whose Toronto-based AI lab team developed LATTE3D. “We can now produce results an order of magnitude faster, putting near-real-time text-to-3D generation within reach for creators across industries.”

This advancement means that LATTE3D can produce 3D shapes near instantly when running inference on a single GPU, such as the NVIDIA RTX A6000, which was used for the NVIDIA Research demo.

Ideate, Generate, Iterate: Shortening the Cycle

Instead of starting a design from scratch or combing through a 3D asset library, a creator could use LATTE3D to generate detailed objects as quickly as ideas pop into their head.

The model generates a few different 3D shape options based on each text prompt, giving a creator options. Selected objects can be optimized for higher quality within a few minutes. Then, users can export the shape into graphics software applications or platforms such as NVIDIA Omniverse, which enables Universal Scene Description (OpenUSD)-based 3D workflows and applications.

While the researchers trained LATTE3D on two specific datasets — animals and everyday objects — developers could use the same model architecture to train the AI on other data types.

If trained on a dataset of 3D plants, for example, a version of LATTE3D could help a landscape designer quickly fill out a garden rendering with trees, flowering bushes and succulents while brainstorming with a client. If trained on household objects, the model could generate items to fill in 3D simulations of homes, which developers could use to train personal assistant robots before they’re tested and deployed in the real world.

LATTE3D was trained using NVIDIA A100 Tensor Core GPUs. In addition to 3D shapes, the model was trained on diverse text prompts generated using ChatGPT to improve the model’s ability to handle the various phrases a user might come up with to describe a particular 3D object — for example, understanding that prompts featuring various canine species should all generate doglike shapes.

NVIDIA Research comprises hundreds of scientists and engineers worldwide, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics.

Researchers shared work at NVIDIA GTC this week that advances the state of the art for training diffusion models. Read more on the NVIDIA Technical Blog, and see the full list of NVIDIA Research sessions at GTC, running in San Jose, Calif., and online through March 21.

For the latest NVIDIA AI news, watch the replay of NVIDIA founder and CEO Jensen Huang’s keynote address at GTC: