Promising to transform trillion-dollar industries and address the “grand challenges” of our time, NVIDIA founder and CEO Jensen Huang Tuesday shared a vision of an era where intelligence is created on an industrial scale and woven into real and virtual worlds.

Kicking off NVIDIA’s GTC conference, Huang introduced new silicon — including the new Hopper GPU architecture and new H100 GPU, new AI and accelerated computing software and powerful new data-center-scale systems.

”Companies are processing, refining their data, making AI software, becoming intelligence manufacturers,” Huang said, speaking from a virtual environment in the NVIDIA Omniverse real-time 3D collaboration and simulation platform as he described how AI is “racing in every direction.”

And all of it will be brought together by Omniverse to speed collaboration between people and AIs, better model and understand the real world, and serve as a proving ground for new kinds of robots, “the next wave of AI.”

Huang shared his vision with a gathering that has become one of the world’s most important AI conferences, bringing together leading developers, scientists and researchers.

The conference features more 1,600 speakers including from companies such as American Express, DoorDash, LinkedIn, Pinterest, Salesforce, ServiceNow, Snap and Visa, as well as 200,000 registered attendees.

Huang’s presentation began with a spectacular flythrough of NVIDIA’s new campus, rendered in Omniverse, including buzzing labs working on advanced robotics projects.

He shared how the company’s work with the broader ecosystem is saving lives by advancing healthcare and drug discovery, and even helping save our planet.

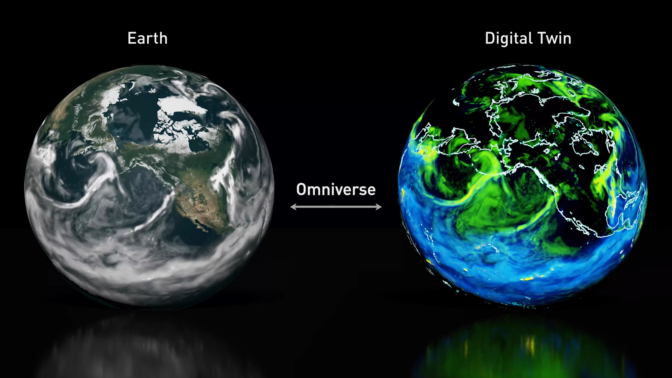

“Scientists predict that a supercomputer a billion times larger than today’s is needed to effectively simulate regional climate change,” Huang said.

“NVIDIA is going to tackle this grand challenge with our Earth-2, the world’s first AI digital twin supercomputer, and invent new AI and computing technologies to give us a billion-X before it’s too late,” he said.

New Silicon — NVIDIA H100: A “New Engine of the World’s AI Infrastructure”

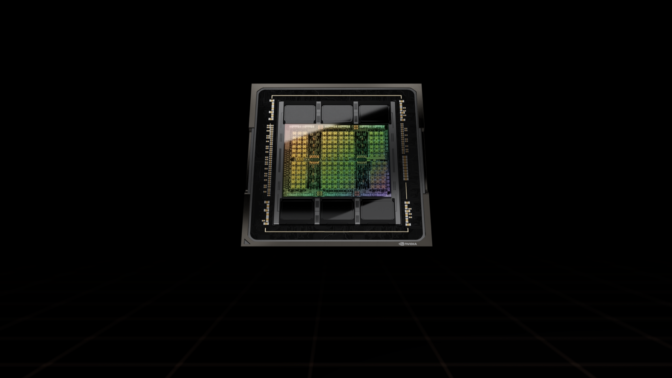

To power these ambitious efforts, Huang introduced the NVIDIA H100 built on the Hopper architecture, as the “new engine of the world’s AI infrastructures.”

AI applications like speech, conversation, customer service and recommenders are driving fundamental changes in data center design, he said.

“AI data centers process mountains of continuous data to train and refine AI models,” Huang said. “Raw data comes in, is refined, and intelligence goes out — companies are manufacturing intelligence and operating giant AI factories.”

The factory operation is 24/7 and intense, Huang said. Minor improvements in quality drive a significant increase in customer engagement and company profits, Huang explained.

H100 will help these factories move faster. The “massive” 80 billion transistor chip uses TSMC’s 4N process.

“Hopper H100 is the biggest generational leap ever — 9x at-scale training performance over A100 and 30x large-language-model inference throughput,” Huang said.

Hopper is packed with technical breakthroughs, including a new Transformer Engine to speed up these networks 6x without losing accuracy.

“Transformer model training can be reduced from weeks to days” Huang said.

H100 is in production, with availability starting in Q3, Huang announced.

Huang also announced the Grace CPU Superchip, NVIDIA’s first discrete data center CPU for high-performance computing.

It comprises two CPU chips connected over a 900 gigabytes per second NVLink chip-to-chip interconnect to make a 144-core CPU with 1 terabyte per second of memory bandwidth, Huang explained.

“Grace is the ideal CPU for the world’s AI infrastructures,” Huang said.

Huang also announced new Hopper GPU-based AI supercomputers — DGX H100, H100 DGX POD and DGX SuperPOD.

To connect it all, NVIDIA’s new NVLink high-speed interconnect technology will be coming to all future NVIDIA chips — CPUs, GPUs, DPUs and SOCs, Huang said.

He also announced NVIDIA will make NVLink available to customers and partners to build companion chips.

“NVLink opens a new world of opportunities for customers to build semi-custom chips and systems that leverage NVIDIA’s platforms and ecosystems,” Huang said.

New Software — AI Has “Fundamentally Changed” Software

Thanks to acceleration unleashed by accelerated computing, the progress of AI is “stunning,” Huang declared.

“AI has fundamentally changed what software can make and how you make software,” Huang said.

Transformers, Huang explained, have opened self-supervised learning and unblocked the need for human-labeled data. As a result, Transformers are being unleashed in a growing array of fields.

“Transformers made self-supervised learning possible, and AI jumped to warp speed,” Huang said.

Google BERT for language understanding, NVIDIA MegaMolBART for drug discovery, and DeepMind AlphaFold2 are all breakthroughs traced to Transformers, Huang said.

Huang walked through new deep learning models for natural language understanding, physics, creative design, character animation and even — with NVCell — chip layout.

“AI is racing in every direction — new architectures, new learning strategies, larger and more robust models, new science, new applications, new industries — all at the same time,” Huang said.

NVIDIA is “all hands on deck” to speed new breakthroughs in AI and speed the adoption of AI and machine learning to every industry, Huang said.

The NVIDIA AI platform is getting major updates, Huang said, including Triton Inference Server, the NeMo Megatron 0.9 framework for training large language models, and the Maxine framework for audio and video quality enhancement.

The platform includes NVIDIA AI Enterprise 2.0, an end-to-end, cloud-native suite of AI and data analytics tools and frameworks, optimized and certified by NVIDIA and now supported across every major data center and cloud platform.

“We updated 60 SDKs at this GTC,” Huang said. “For our 3 million developers, scientists and AI researchers, and tens of thousands of startups and enterprises, the same NVIDIA systems you run just got faster.”

NVIDIA AI software and accelerated computing SDKs are now relied on by some of the world’s largest companies.

- Microsoft Translator accelerates global communications with real-time translation capabilities powered by NVIDIA Triton.

- AT&T accelerates their data science teams with NVIDIA RAPIDS software that makes it easier to process trillions of records.

“NVIDIA SDKs serve healthcare, energy, transportation, retail, finance, media and entertainment — a combined $100 trillion of industries,” Huang said.

‘The Next Evolution’: Omniverse for Virtual Worlds

Half a century ago, the Apollo 13 lunar mission ran into trouble. To save the crew, Huang said, NASA engineers created a model of the crew capsule back on Earth to “work the problem.”

“Extended to vast scales, a digital twin is a virtual world that’s connected to the physical world,” Huang said. “And in the context of the internet, it is the next evolution.”

NVIDIA Omniverse software for building digital twins, and new data-center-scale NVIDIA OVX systems, will be integral for “action-oriented AI.”

“Omniverse is central to our robotics platforms,” Huang said, announcing new releases and updates for Omniverse. “And like NASA and Amazon, we and our customers in robotics and industrial automation realize the importance of digital twins and Omniverse.”

OVX will run Omniverse digital twins for large-scale simulations with multiple autonomous systems operating in the same space-time, Huang explained.

The backbone of OVX is its networking fabric, Huang said, announcing the NVIDIA Spectrum-4 high-performance data networking infrastructure platform.

The world’s first 400Gbps end-to-end networking platform, NVIDIA Spectrum-4 consists of the Spectrum-4 switch family, NVIDIA ConnectX-7 SmartNIC, NVIDIA BlueField-3 DPU and NVIDIA DOCA data center infrastructure software.

And to make Omniverse accessible to even more users, Huang announced Omniverse Cloud. Now, with just a few clicks, collaborators can connect through Omniverse on the cloud.

Huang showed how this works with a demo of four designers, one an AI, collaborating to build a virtual world.

He also showed how Amazon uses Omniverse Enterprise “to design and optimize their incredible fulfillment center operations.”

“Modern fulfillment centers are evolving into technical marvels — facilities operated by humans and robots working together,” Huang said.

The ‘Next Wave of AI’: Robots and Autonomous Vehicles

New silicon, new software and new simulation capabilities will unleash “the next wave of AI,” Huang said, robots able to “devise, plan and act.”

NVIDIA Avatar, DRIVE, Metropolis, Isaac and Holoscan are robotics platforms built end to end and full stack around “four pillars”: ground-truth data generation, AI model training, the robotics stack and Omniverse digital twins, Huang explained.

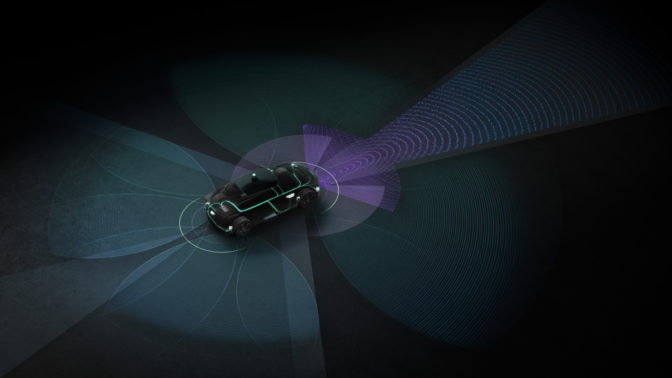

The NVIDIA DRIVE autonomous vehicle system is essentially an “AI chauffeur,” Huang said.

And Hyperion 8 — NVIDIA’s hardware architecture for self-driving cars on which NVIDIA DRIVE is built — can achieve full self-driving with a 360-degree camera, radar, lidar and ultrasonic sensor suite.

Hyperion 8 will ship in Mercedes-Benz cars starting in 2024, followed by Jaguar Land Rover in 2025, Huang said.

Huang announced that NVIDIA Orin, a centralized AV and AI computer that acts as the engine of new-generation EVs, robotaxis, shuttles, and trucks started shipping this month.

And Huang announced Hyperion 9, featuring the coming DRIVE Atlan SoC for double the performance of the current DRIVE Orin-based architecture, which will ship starting in 2026.

BYD, the second-largest EV maker globally, will adopt the DRIVE Orin computer for cars starting production in the first half of 2023, Huang announced.

Overall, NVIDIA’s automotive pipeline has increased to over $11 billion over the next six years.

Clara Holoscan puts much of the real-time computing muscle used in DRIVE to work supporting medical instruments and real-time sensors, such as RF ultrasound, 4K surgical video, high-throughput cameras and lasers.

Huang showed a video of Holoscan accelerating images from a light-sheet microscope — which creates a “movie” of cells moving and dividing.

It typically takes an entire day to process the 3TB of data these instruments produce in an hour.

At the Advanced Bioimaging Center at UC Berkeley, however, researchers using Holoscan are able to process this data in real-time, enabling them to auto-focus the microscope while experiments are running.

Holoscan development platforms are available for early access customers today, generally available in May, and medical-grade readiness in the first quarter of 2023.

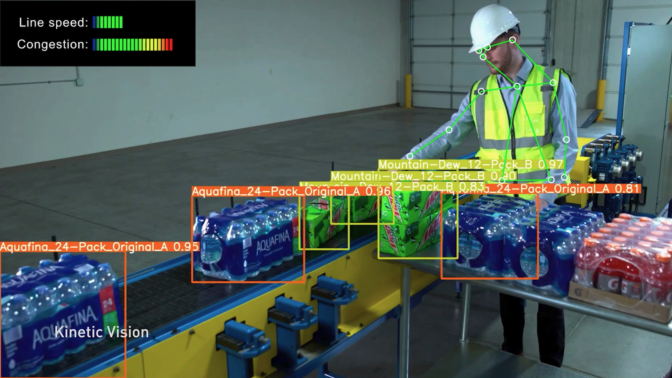

NVIDIA is also working with thousands of customers and developers who are building robots for manufacturing, retail, healthcare, agriculture, construction, airports and entire cities, Huang said.

NVIDIA’s robotics platforms consist of Metropolis and Isaac — Metropolis is a stationary robot tracking moving things, while Isaac is a platform for things that move, Huang explained.

To help robots navigate indoor spaces — like factories and warehouses — NVIDIA announced Isaac Nova Orin, built on Jetson AGX Orin, a state-of-the-art compute and sensor reference platform to accelerate autonomous mobile robot development and deployment.

In a video, Huang showed how PepsiCo uses Metropolis and an Omniverse digital twin together.

Four Layers, Five Dynamics

Huang ended by tying all the technologies, product announcements and demos back into a vision of how NVIDIA will drive forward the next generation of computing.

NVIDIA announced new products across its four-layer stack: hardware, system software and libraries, software platforms NVIDIA HPC, NVIDIA AI, and NVIDIA Omniverse; and AI and robotics application frameworks, Huang explained.

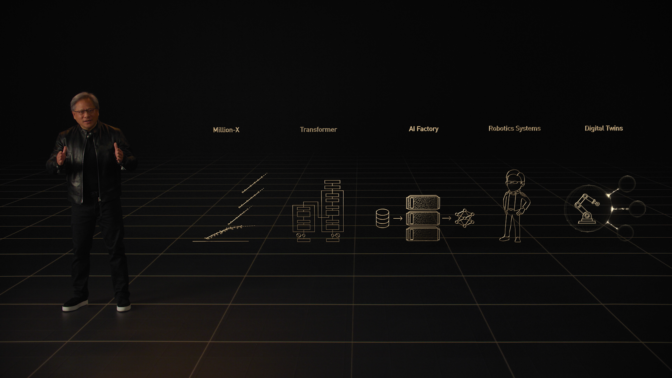

Huang also ticked through the five dynamics shaping the industry: million-X computing speedups, transformers turbocharging AI, data centers becoming AI factories, which is exponentially increasing demand for robotics systems, and digital twins for the next era of AI.

“Accelerating across the full stack and at data center scale, we will strive for yet another million-X in the next decade,” Huang said, concluding his talk. “I can’t wait to see what the next million-X brings.”

Noting that Omniverse generated “every rendering and simulation you saw today,” Huang then introduced a stunning video put together by NVIDIA’s creative team featuring viewers “on one more trip into Omniverse” for a surprising musical jazz number set in the heart of NVIDIA’s campus featuring a cameo from Huang’s digital counterpart, Toy Jensen.

The post Keynote Wrap Up: Turning Data Centers into ‘AI Factories,’ NVIDIA CEO Intros Hopper Architecture, H100 GPU, New Supercomputers, Software appeared first on NVIDIA Blog.