NVIDIA researchers are defining ways to make faster AI chips in systems with greater bandwidth that are easier to program, said Bill Dally, NVIDIA’s chief scientist, in a keynote released today for a virtual GTC China event.

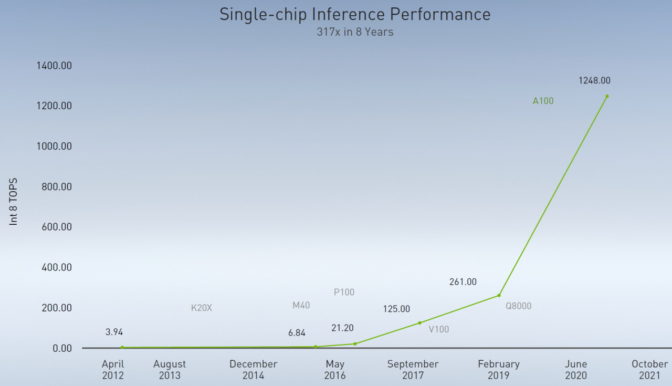

He described three projects as examples of how the 200-person research team he leads is working to stoke Huang’s Law — the prediction named for NVIDIA CEO Jensen Huang that GPUs will double AI performance every year.

“If we really want to improve computer performance, Huang’s Law is the metric that matters, and I expect it to continue for the foreseeable future,” said Dally, who helped direct research at NVIDIA in AI, ray tracing and fast interconnects.

An Ultra-Efficient Accelerator

Toward that end, NVIDIA researchers created a tool called MAGNet that generated an AI inference accelerator that hit 100 tera-operations per watt in a simulation. That’s more than an order of magnitude greater efficiency than today’s commercial chips.

MAGNet uses new techniques to orchestrate the flow of information through a device in ways that minimize the data movement that burns most of the energy in today’s chips. The research prototype is implemented as a modular set of tiles so it can scale flexibly.

A separate effort seeks to replace today’s electrical links inside systems with faster optical ones.

Firing on All Photons

“We can see our way to doubling the speed of our NVLink [that connects GPUs] and maybe doubling it again, but eventually electrical signaling runs out of gas,” said Dally, who holds more than 120 patents and chaired the computer science department at Stanford before joining NVIDIA in 2009.

The team is collaborating with researchers at Columbia University on ways to harness techniques telecom providers use in their core networks to merge dozens of signals onto a single optical fiber.

Called dense wavelength division multiplexing, it holds the potential to pack multiple terabits per second into links that fit into a single millimeter of space on the side of a chip, more than 10x the density of today’s interconnects.

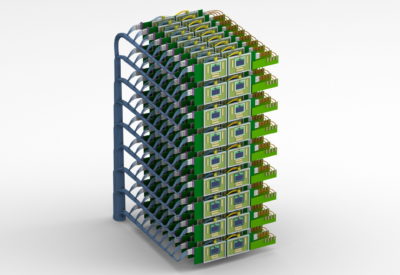

Besides faster throughput, the optical links enable denser systems. For example, Dally showed a mockup (below) of a future NVIDIA DGX system with more than 160 GPUs.

In software, NVIDIA’s researchers have prototyped a new programming system called Legate. It lets developers take a program written for a single GPU and run it on a system of any size — even a giant supercomputer like Selene that packs thousands of GPUs.

Legate couples a new form of programming shorthand with accelerated software libraries and an advanced runtime environment called Legion. It’s already being put to the test at U.S. national labs.

Rendering a Vivid Future

The three research projects make up just one part of Dally’s keynote, which describes NVIDIA’s domain-specific platforms for a variety of industries such as healthcare, self-driving cars and robotics. He also delves into data science, AI and graphics.

“In a few generations our products will produce amazing images in real time using path tracing with physically based rendering, and we’ll be able to generate whole scenes with AI,” said Dally.

He showed the first public demonstration (below) that combines NVIDIA’s conversational AI framework called Jarvis with GauGAN, a tool that uses generative adversarial networks to create beautiful landscapes from simple sketches. The demo lets users instantly generate photorealistic landscapes using simple voice commands.

In an interview between recording sessions for the keynote, Dally expressed particular pride for the team’s pioneering work in several areas.

“All our current ray tracing started in NVIDIA Research with prototypes that got our product teams excited. And in 2011, I assigned [NVIDIA researcher] Bryan Catanzaro to work with [Stanford professor] Andrew Ng on a project that became CuDNN, software that kicked off much of our work in deep learning,” he said.

A First Foothold in Networking

Dally also spearheaded a collaboration that led to the first prototypes of NVLink and NVSwitch, interconnects that link GPUs running inside some of the world’s largest supercomputers today.

“The product teams grabbed the work out of our hands before we were ready to let go of it, and now we’re considered one of the most advanced networking companies,” he said.

With his passion for technology, Dally said he often feels like a kid in a candy store. He may hop from helping a group with an AI accelerator one day to helping another team sort through a complex problem in robotics the next.

“I have one of the most fun jobs in the company if not in the world because I get to help shape the future,” he said.

The keynote is just one of more than 220 sessions at GTC China. All the sessions are free and most are conducted in Mandarin.

Panel, Startup Showcase at GTC China

Following the keynote, a panel of senior NVIDIA executives will discuss how the company’s technologies in AI, data science, healthcare and other fields are being adopted in China.

The event also includes a showcase of a dozen top startups in China, hosted by NVIDIA Inception, an acceleration program for AI and data science startups.

Companies participating in GTC China include Alibaba, AWS, Baidu, ByteDance, China Telecom, Dell Technologies, Didi, H3C, Inspur, Kuaishou, Lenovo, Microsoft, Ping An, Tencent, Tsinghua University and Xiaomi.

The post NVIDIA Chief Scientist Highlights New AI Research in GTC Keynote appeared first on The Official NVIDIA Blog.