Human thumb next to our OmniTact sensor, and a US penny for scale.

Touch has been shown to be important for dexterous manipulation in

robotics. Recently, the GelSight sensor has caught significant interest

for learning-based robotics due to its low cost and rich signal. For example,

GelSight sensors have been used for learning inserting USB cables (Li et al,

2014), rolling a die (Tian et al. 2019) or grasping objects (Calandra

et al. 2017).

The reason why learning-based methods work well with GelSight sensors is that

they output high-resolution tactile images from which a variety of features

such as object geometry, surface texture, normal and shear forces can be

estimated that often prove critical to robotic control. The tactile images

can be fed into standard CNN-based computer vision pipelines allowing the use

of a variety of different learning-based techniques: In Calandra et al.

2017 a grasp-success classifier is trained on GelSight data collected in

self-supervised manner, in Tian et al. 2019 Visual Foresight, a

video-prediction-based control algorithm is used to make a robot roll a die

purely based on tactile images, and in Lambeta et al. 2020 a model-based

RL algorithm is applied to in-hand manipulation using GelSight images.

Unfortunately applying GelSight sensors in practical real-world scenarios is

still challenging due to its large size and the fact that it is only sensitive

on one side. Here we introduce a new, more compact tactile sensor design based

on GelSight that allows for omnidirectional sensing, i.e. making the sensor

sensitive on all sides like a human finger, and show how this opens up new

possibilities for sensorimotor learning. We demonstrate this by teaching a

robot to pick up electrical plugs and insert them purely based on tactile

feedback.

GelSight Sensors

A standard GelSight sensor, shown in the figure below on the left, uses an

off-the-shelf webcam to capture high-resolution images of deformations on the

silicone gel skin. The inside surface of the gel skin is illuminated with

colored LEDs, providing sufficient lighting for the tactile image.

Comparison of GelSight-style sensor (left side) to our OmniTact sensor (right

side).

Existing GelSight designs are either flat, have small sensitive fields or only

provide low-resolution signals. For example, prior versions of the GelSight

sensor, provide high resolution (400×400 pixel) images but are large and flat,

providing sensitivity on only one side, while the commercial OptoForce

sensor (recently discontinued by OnRobot) is curved, but only provides force

readings as a single 3-dimensional force vector.

The OmniTact Sensor

Our OmniTact sensor design aims to address these limitations. It provides both

multi-directional and high-resolution sensing on its curved surface in a

compact form factor. Similar to GelSight, OmniTact uses cameras embedded into

a silicone gel skin to capture deformation of the skin, providing a rich signal

from which a wide range of features such as shear and normal forces, object

pose, geometry and material properties can be inferred. OmniTact uses multiple

cameras giving it both high-resolution and multi-directional capabilities. The

sensor itself can be used as a “finger” and can be integrated into a gripper or

robotic hand. It is more compact than previous GelSight sensors, which is

accomplished by utilizing micro-cameras typically used in endoscopes, and by

casting the silicone gel directly onto the cameras. Tactile images from

OmniTact are shown in the figures below.

Tactile readings from OmniTact with various objects. From left to right: M3

Screw Head, M3 Screw Threads, Combination Lock with numbers 4 3 9, Printed

Circuit Board (PCB), Wireless Mouse USB. All images are taken from the

upward-facing camera.

Tactile readings from the OmniTact being rolled over a gear rack. The

multi-directional capabilities of OmniTact keep the gear rack in view as the

sensor is rotated.

Design Highlights

One of our primary goals throughout the design process was to make OmniTact as

compact as possible. To accomplish this goal, we used micro-cameras with large

viewing angles and a small focus distance. Specifically we picked cameras that

are commonly used in medical endoscopes measuring just (1.35 x 1.35 x 5 mm) in

size with a focus distance of 5 mm. These cameras were arranged in a 3D printed

camera mount as shown in the figure below which allowed us to minimize blind

spots on the surface of the sensor and reduce the diameter (D) of the sensor to

30 mm.

This image shows the fields of view and arrangement of the 5 micro-cameras

inside the sensor. Using this arrangement, most of the fingertip can be made

sensitive effectively. In the vertical plane, shown in A, we obtain

$alpha=270$ degrees of sensitivity. In the horizontal plane, shown in B, we

obtain 360 degrees of sensitivity, except for small blind spots between the

fields of view.

Electrical Connector Insertion Task

We show that OmniTact’s multi-directional tactile sensing capabilities can be

leveraged to solve a challenging robotic control problem: Inserting an

electrical connector blindly into a wall outlet purely based on information

from the multi-directional touch sensor (shown in the figure below). This task

is challenging since it requires localizing the electrical connector relative

to the gripper and localizing the gripper relative to the wall outlet.

To learn the insertion task, we used a simple imitation learning algorithm that

estimates the end-effector displacement required for inserting the plug into

the outlet based on the tactile images from the OmniTact sensor. Our model was

trained with just 100 demonstrations of insertion by controlling the robot

using keyboard control. Successful insertions obtained by running the trained

policy are shown in the gifs below.

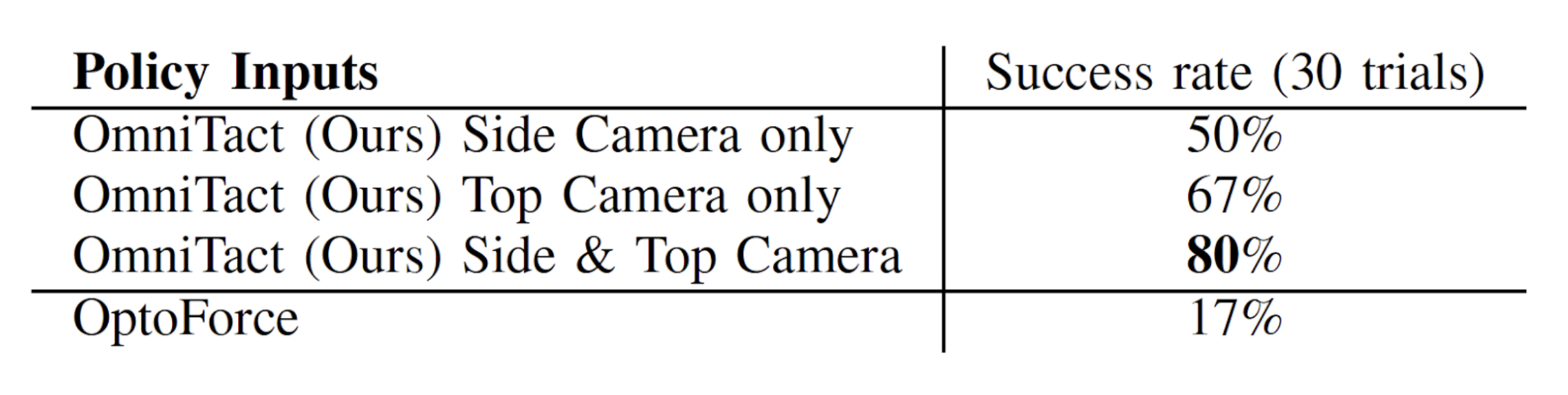

As shown in the table below, using the multi-directional capabilities (both the

top and side camera) of our sensor allowed for the highest success rate (80%)

in comparison to using just one camera from the sensor, indicating that

multi-directional touch sensing is indeed crucial for solving this task. We

additionally compared performance with another multi-directional tactile

sensor, the OptoForce sensor, which only had a success rate of 17%.

What’s Next?

We believe that compact, high resolution and multi-directional touch sensing

has the potential to transform the capabilities of current robotic manipulation

systems. We suspect that multi-directional tactile sensing could be an

essential element in general-purpose robotic manipulation in addition to

applications such as robotic teleoperation in surgery, as well as in sea and

space missions. In the future, we plan to make OmniTact cheaper and more

compact, allowing it to be used in a wider range of tasks. Our team

additionally plans to conduct more robotic manipulation research that will

inform future generations of tactile sensors.

This blog post is based on the following paper which will be presented at the

International Conference on Robotics and Automation 2020:

- OmniTact: A Multi-Directional High-Resolution Touch Sensor

Akhil Padmanabha, Frederik Ebert, Stephen Tian, Roberto Calandra, Chelsea Finn, Sergey Levine

Paper Link: https://arxiv.org/abs/2003.06965

Research Website: https://sites.google.com/berkeley.edu/omnitact/home

We would like to thank Professor Sergey Levine, Professor Chelsea Finn, and

Stephen Tian for their valuable feedback when preparing this blog post.