Autonomous vehicle simulation poses two challenges: generating a world with enough detail and realism that the AI driver perceives the simulation as real, as well as creating simulations at a large enough scale to cover all the cases on which the AI driver needs to be fully trained and tested.

To address these challenges, NVIDIA researchers have created new AI-based tools to build simulations directly from real-world data. NVIDIA founder and CEO Jensen Huang previewed the breakthrough during the GTC keynote.

This research includes award-winning work first published at SIGGRAPH, a computer graphics conference held last month.

Neural Reconstruction Engine

The Neural Reconstruction Engine is a new AI toolset for the NVIDIA DRIVE Sim simulation platform that uses multiple AI networks to turn recorded video data into simulation.

The new pipeline uses AI to automatically extract the key components needed for simulation, including the environment, 3D assets and scenarios. These pieces are then reconstructed into simulation scenes that have the realism of data recordings, but are fully reactive and can be manipulated as needed. Achieving this level of detail and diversity by hand is costly, time consuming and not scalable.

Environments and Assets

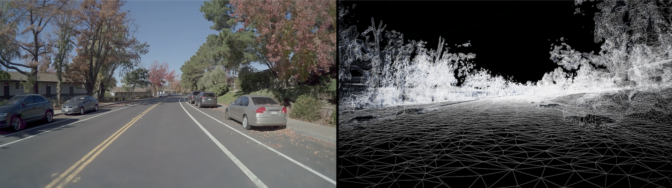

A simulation needs an environment in which to operate. The AI pipeline converts 2D video data from a real-world drive to a dynamic, 3D digital twin environment that can be loaded into DRIVE Sim.

The DRIVE Sim AI pipeline follows a similar process to reconstruct other 3D assets. Engineers can use the assets to reconstruct the current scene or place them in a larger library of assets to be used in any simulation.

Using the asset-harvesting pipeline is key to growing the DRIVE Sim library and ensuring it matches the diversity and distribution of the real world.

Scenarios

Scenarios are the events that take place during a simulation in an environment combined with assets.

The Neural Reconstruction Engine assigns AI-based behaviors to the actors in the scene, so that when presented with the original events, they behave precisely as they did in the real drive. However, since they have an AI behavior model, the figures in the simulation can respond and react to changes by the AV or other scene elements.

Because these scenarios are all occurring in simulation, they can also be manipulated to add new situations. Timing and location of events can be altered. Developers can even incorporate entirely new elements, synthetic or real, to make a scenario more challenging, such as the addition of a child chasing a ball to the scene below.

Integration Into DRIVE Sim

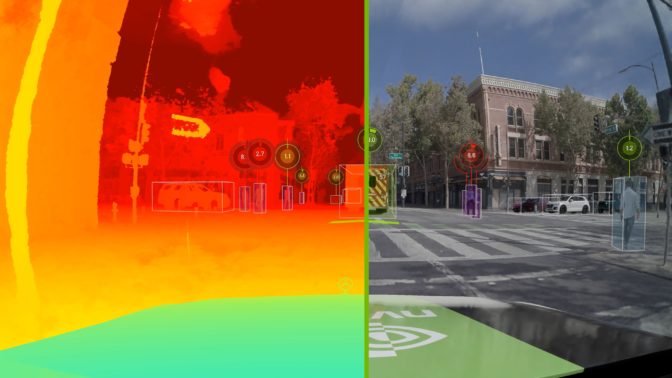

Once the environment, assets and scenario have been extracted, they’re reassembled in DRIVE Sim to create a 3D simulation of the recorded scene or mixed with other assets to create a completely new scene.

DRIVE Sim provides the tools for developers to adjust dynamic and static objects, the vehicle’s path, and the location, orientation and parameters of the vehicle sensors.

The same scenes in DRIVE Sim are also used to generate pre-labeled synthetic data to train perception systems. Randomizations are applied on top of recreated scenes to add diversity to the training data. Building scenes out of real-world data greatly reduces the sim-to-real gap.

The ability to mix and match simulation formats is a significant advantage in comprehensively testing and validating AVs at scale. Engineers can manipulate events in a world that is responsive and matches their needs precisely.

The Neural Reconstruction Engine is the result of work by the research team at NVIDIA, and will be integrated into future releases of DRIVE Sim. This breakthrough will enable developers to take advantage of both physics-based and neural-driven simulation on the same cloud-based platform.

The post Reconstructing the Real World in DRIVE Sim With AI appeared first on NVIDIA Blog.