Ever queried a recommender system and found that the same search only a few moments later or on a different device yields very different results? This is not uncommon and can be frustrating if a person is looking for something specific. As a designer of such a system, it is also not uncommon for the metrics measured to change from design and testing to deployment, bringing into question the utility of the experimental testing phase. Some level of such irreproducibility can be expected as the world changes and new models are deployed. However, this also happens regularly as requests hit duplicates of the same model or models are being refreshed.

Lack of replicability, where researchers are unable to reproduce published results with a given model, has been identified as a challenge in the field of machine learning (ML). Irreproducibility is a related but more elusive problem, where multiple instances of a given model are trained on the same data under identical training conditions, but yield different results. Only recently has irreproducibility been identified as a difficult problem, but due to its complexity, theoretical studies to understand this problem are extremely rare.

In practice, deep network models are trained in highly parallelized and distributed environments. Nondeterminism in training from random initialization, parallelism, distributed training, data shuffling, quantization errors, hardware types, and more, combined with objectives with multiple local optima contribute to the problem of irreproducibility. Some of these factors, such as initialization, can be controlled, but it is impractical to control others. Optimization trajectories can diverge early in training by following training examples in the order seen, leading to very different models. Several recently published solutions [1, 2, 3] based on advanced combinations of ensembling, self-ensembling, and distillation can mitigate the problem, but usually at the cost of accuracy and increased complexity, maintenance and improvement costs.

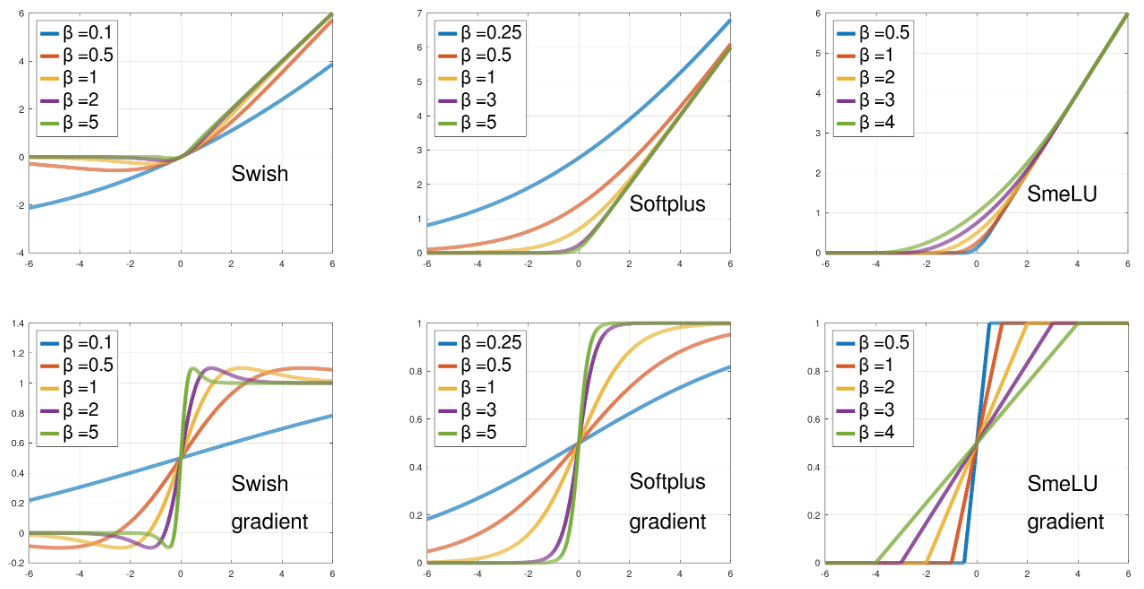

In “Real World Large Scale Recommendation Systems Reproducibility and Smooth Activations”, we consider a different practical solution to this problem that does not incur the costs of other solutions, while still improving reproducibility and yielding higher model accuracy. We discover that the Rectified Linear Unit (ReLU), which is very popular as the nonlinearity function (i.e., activation function) used to transform values in neural networks, exacerbates the irreproducibility problem. On the other hand, we demonstrate that smooth activation functions, which have derivatives that are continuous for the whole domain, unlike those of ReLU, are able to substantially reduce irreproducibility levels. We then propose the Smooth reLU (SmeLU) activation function, which gives comparable reproducibility and accuracy benefits to other smooth activations but is much simpler.

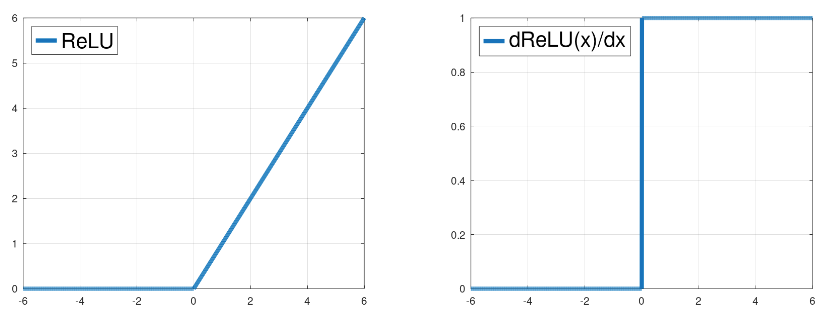

|

| The ReLU function (left) as function of the input signal, and its gradient (right) as function of the input. |

Smooth Activations

An ML model attempts to learn the best model parameters that fit the training data by minimizing a loss, which can be imagined as a landscape with peaks and valleys, where the lowest point attains an optimal solution. For deep models, the landscape may consist of many such peaks and valleys. The activation function used by the model governs the shape of this landscape and how the model navigates it.

ReLU, which is not a smooth function, imposes an objective whose landscape is partitioned into many regions with multiple local minima, each providing different model predictions. With this landscape, the order in which updates are applied is a dominant factor in determining the optimization trajectory, providing a recipe for irreproducibility. Because of its non-continuous gradient, functions expressed by a ReLU network will contain sudden jumps in the gradient, which can occur internally in different layers of the deep network, affecting updates of different internal units, and are likely strong contributors to irreproducibility.

Suppose a sequence of model updates attempts to push the activation of some unit down from a positive value. The gradient of the ReLU function is 1 for positive unit values, so with every update it pushes the unit to become smaller and smaller (to the left in the panel above). At the point the activation of this unit crosses the threshold from a positive value to a negative one, the gradient suddenly changes from magnitude 1 to magnitude 0. Training attempts to keep moving the unit leftwards, but due to the 0 gradient, the unit cannot move further in that direction. Therefore, the model must resort to updating other units that can move.

We find that networks with smooth activations (e.g., GELU, Swish and Softplus) can be substantially more reproducible. They may exhibit a similar objective landscape, but with fewer regions, giving a model fewer opportunities to diverge. Unlike the sudden jumps with ReLU, for a unit with decreasing activations, the gradient gradually reduces to 0, which gives other units opportunities to adjust to the changing behavior. With equal initialization, moderate shuffling of training examples, and normalization of hidden layer outputs, smooth activations are able to increase the chances of converging to the same minimum. Very aggressive data shuffling, however, loses this advantage.

The rate that a smooth activation function transitions between output levels, i.e., its “smoothness”, can be adjusted. Sufficient smoothness leads to improved accuracy and reproducibility. Too much smoothness, though, approaches linear models with a corresponding degradation of model accuracy, thus losing the advantages of using a deep network.

Smooth reLU (SmeLU)

Activations like GELU and Swish require complex hardware implementations to support exponential and logarithmic functions. Further, GELU must be computed numerically or approximated. These properties can make deployment error-prone, expensive, or slow. GELU and Swish are not monotonic (they start by slightly decreasing and then switch to increasing), which may interfere with interpretability (or identifiability), nor do they have a full stop or a clean slope 1 region, properties that simplify implementation and may aid in reproducibility.

The Smooth reLU (SmeLU) activation function is designed as a simple function that addresses the concerns with other smooth activations. It connects a 0 slope on the left with a slope 1 line on the right through a quadratic middle region, constraining continuous gradients at the connection points (as an asymmetric version of a Huber loss function).

SmeLU can be viewed as a convolution of ReLU with a box. It provides a cheap and simple smooth solution that is comparable in reproducibility-accuracy tradeoffs to more computationally expensive and complex smooth activations. The figure below illustrates the transition of the loss (objective) surface as we gradually transition from a non-smooth ReLU to a smoother SmeLU. A transition of width 0 is the basic ReLU function for which the loss objective has many local minima. As the transition region widens (SmeLU), the loss surface becomes smoother. If the transition is too wide, i.e., too smooth, the benefit of using a deep network wanes and we approach the linear model solution — the objective surface flattens, potentially losing the ability of the network to express much information.

<!–

–>

Performance

SmeLU has benefited multiple systems, specifically recommendation systems, increasing their reproducibility by reducing, for example, recommendation swap rates. While the use of SmeLU results in accuracy improvements over ReLU, it also replaces other costly methods to address irreproducibility, such as ensembles, which mitigate irreproducibility at the cost of accuracy. Moreover, replacing ensembles in sparse recommendation systems reduces the need for multiple lookups of model parameters that are needed to generate an inference for each of the ensemble components. This substantially improves training and inference efficiency.

To illustrate the benefits of smooth activations, we plot the relative prediction difference (PD) as a function of change in some loss for the different activations. We define relative PD as the ratio between the absolute difference in predictions of two models and their expected prediction, averaged over all evaluation examples. We have observed that in large scale systems, it is sufficient, and inexpensive, to consider only two models for very consistent results.

The figure below shows curves on the PD-accuracy loss plane. For reproducibility, being lower on the curve is better, and for accuracy, being on the left is better. Smooth activations can yield a ballpark 50% reduction in PD relative to ReLU, while still potentially resulting in improved accuracy. SmeLU yields accuracy comparable to other smooth activations, but is more reproducible (lower PD) while still outperforming ReLU in accuracy.

<!–

–>

Conclusion and Future Work

We demonstrated the problem of irreproducibility in real world practical systems, and how it affects users as well as system and model designers. While this particular issue has been given very little attention when trying to address the lack of replicability of research results, irreproducibility can be a critical problem. We demonstrated that a simple solution of using smooth activations can substantially reduce the problem without degrading other critical metrics like model accuracy. We demonstrate a new smooth activation function, SmeLU, which has the added benefits of mathematical simplicity and ease of implementation, and can be cheap and less error prone.

Understanding reproducibility, especially in deep networks, where objectives are not convex, is an open problem. An initial theoretical framework for the simpler convex case has recently been proposed, but more research must be done to gain a better understanding of this problem which will apply to practical systems that rely on deep networks.

Acknowledgements

We would like to thank Sergey Ioffe for early discussions about SmeLU; Lorenzo Coviello and Angel Yu for help in early adoptions of SmeLU; Shiv Venkataraman for sponsorship of the work; Claire Cui for discussion and support from the very beginning; Jeremiah Willcock, Tom Jablin, and Cliff Young for substantial implementation support; Yuyan Wang, Mahesh Sathiamoorthy, Myles Sussman, Li Wei, Kevin Regan, Steven Okamoto, Qiqi Yan, Todd Phillips, Ed Chi, Sunita Verna, and many many others for many discussions, and for integrations in many different systems; Matt Streeter and Yonghui Wu for feedback on the paper and this post; Tom Small for help with the illustrations in this post.