Despite recent progress in the field of medical artificial intelligence (AI), most existing models are narrow, single-task systems that require large quantities of labeled data to train. Moreover, these models cannot be easily reused in new clinical contexts as they often require the collection, de-identification and annotation of site-specific data for every new deployment environment, which is both laborious and expensive. This problem of data-efficient generalization (a model’s ability to generalize to new settings using minimal new data) continues to be a key translational challenge for medical machine learning (ML) models and has in turn, prevented their broad uptake in real world healthcare settings.

The emergence of foundation models offers a significant opportunity to rethink development of medical AI to make it more performant, safer, and equitable. These models are trained using data at scale, often by self-supervised learning. This process results in generalist models that can rapidly be adapted to new tasks and environments with less need for supervised data. With foundation models, it may be possible to safely and efficiently deploy models across various clinical contexts and environments.

In “Robust and Efficient MEDical Imaging with Self-supervision” (REMEDIS), to be published in Nature Biomedical Engineering, we introduce a unified large-scale self-supervised learning framework for building foundation medical imaging models. This strategy combines large scale supervised transfer learning with self-supervised learning and requires minimal task-specific customization. REMEDIS shows significant improvement in data-efficient generalization across medical imaging tasks and modalities with a 3–100x reduction in site-specific data for adapting models to new clinical contexts and environments. Building on this, we are excited to announce Medical AI Research Foundations (hosted by PhysioNet), an expansion of the public release of chest X-ray Foundations in 2022. Medical AI Research Foundations is a collection of open-source non-diagnostic models (starting with REMEDIS models), APIs, and resources to help researchers and developers accelerate medical AI research.

Large scale self-supervision for medical imaging

REMEDIS uses a combination of natural (non-medical) images and unlabeled medical images to develop strong medical imaging foundation models. Its pre-training strategy consists of two steps. The first involves supervised representation learning on a large-scale dataset of labeled natural images (pulled from Imagenet 21k or JFT) using the Big Transfer (BiT) method.

The second step involves intermediate self-supervised learning, which does not require any labels and instead, trains a model to learn medical data representations independently of labels. The specific approach used for pre-training and learning representations is SimCLR. The method works by maximizing agreement between differently augmented views of the same training example via a contrastive loss in a hidden layer of a feed-forward neural network with multilayer perceptron (MLP) outputs. However, REMEDIS is equally compatible with other contrastive self-supervised learning methods. This training method is applicable for healthcare environments as many hospitals acquire raw data (images) as a routine practice. While processes would have to be implemented to make this data usable within models (i.e., patient consent prior to gathering the data, de-identification, etc.), the costly, time-consuming, and difficult task of labeling that data could be avoided using REMEDIS.

|

| REMEDIS leverages large-scale supervised learning using natural images and self-supervised learning using unlabeled medical data to create strong foundation models for medical imaging. |

Given ML model parameter constraints, it is important that our proposed approach works when using both small and large model architecture sizes. To study this in detail, we considered two ResNet architectures with commonly used depth and width multipliers, ResNet-50 (1×) and ResNet-152 (2×) as the backbone encoder networks.

After pre-training, the model was fine-tuned using labeled task-specific medical data and evaluated for in-distribution task performance. In addition, to evaluate the data-efficient generalization, the model was also optionally fine-tuned using small amounts of out-of-distribution (OOD) data.

Evaluation and results

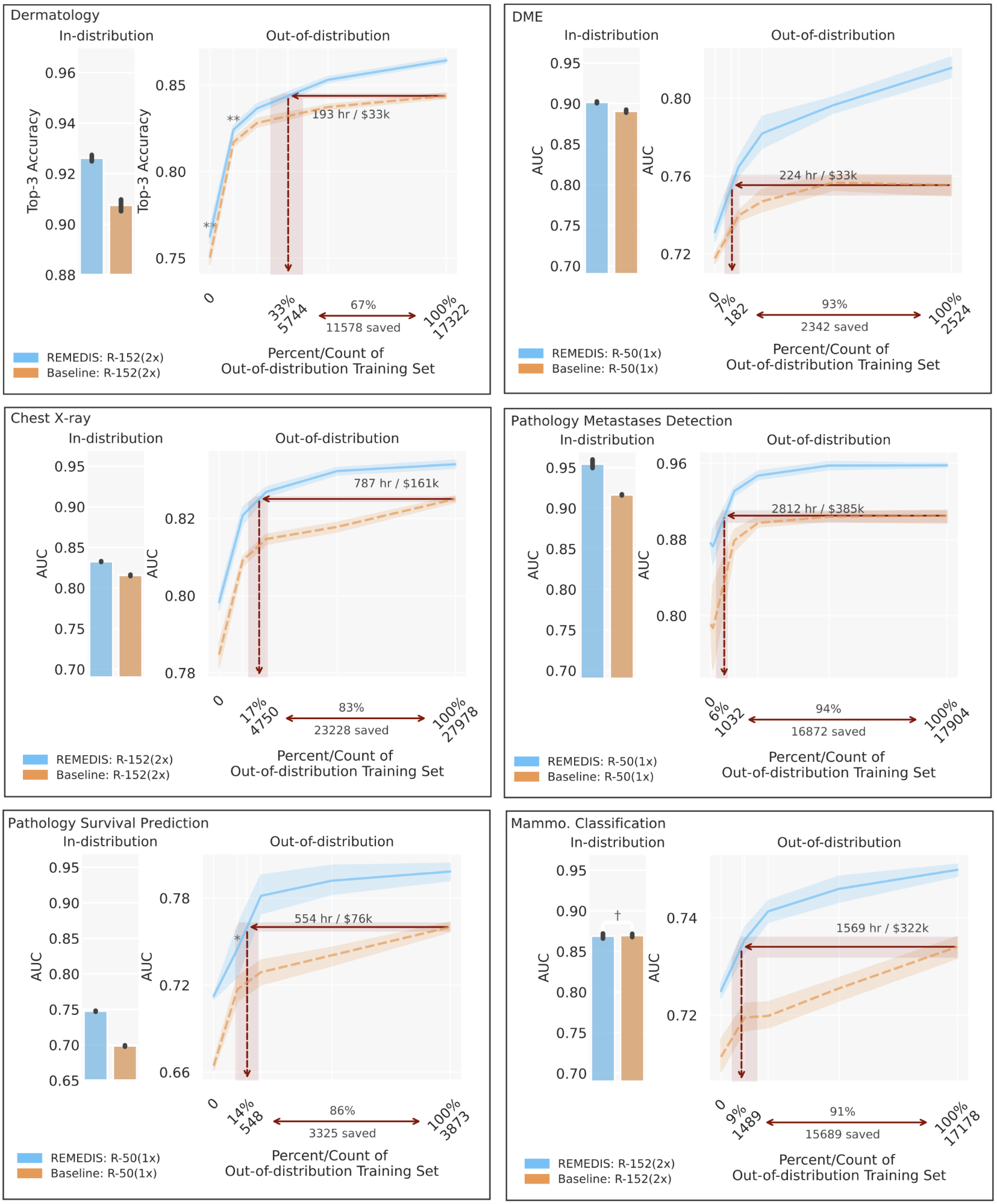

To evaluate the REMEDIS model’s performance, we simulate realistic scenarios using retrospective de-identified data across a broad range of medical imaging tasks and modalities, including dermatology, retinal imaging, chest X-ray interpretation, pathology and mammography. We further introduce the notion of data-efficient generalization, capturing the model’s ability to generalize to new deployment distributions with a significantly reduced need for expert annotated data from the new clinical setting. In-distribution performance is measured as (1) improvement in zero-shot generalization to OOD settings (assessing performance in an OOD evaluation set, with zero access to training data from the OOD dataset) and (2) significant reduction in the need for annotated data from the OOD settings to reach performance equivalent to clinical experts (or threshold demonstrating clinical utility). REMEDIS exhibits significantly improved in-distribution performance with up to 11.5% relative improvement in diagnostic accuracy over a strongly supervised baseline.

More importantly, our strategy leads to data-efficient generalization of medical imaging models, matching strong supervised baselines resulting in a 3–100x reduction in the need for retraining data. While SimCLR is the primary self-supervised learning approach used in the study, we also show that REMEDIS is compatible with other approaches, such as MoCo-V2, RELIC and Barlow Twins. Furthermore, the approach works across model architecture sizes.

|

| REMEDIS is compatible with MoCo-V2, RELIC and Barlow Twins as alternate self-supervised learning strategies. All the REMEDIS variants lead to data-efficient generalization improvements over the strong supervised baseline for dermatology condition classification (T1), diabetic macular edema classification (T2), and chest X-ray condition classification (T3). The gray shaded area indicates the performance of the strong supervised baseline pre-trained on JFT. |

Medical AI Research Foundations

Building on REMEDIS, we are excited to announce Medical AI Research Foundations, an expansion of the public release of chest X-ray Foundations in 2022. Medical AI Research Foundations is a repository of open-source medical foundation models hosted by PhysioNet. This expands the previous API-based approach to also encompass non-diagnostic models, to help researchers and developers accelerate their medical AI research. We believe that REMEDIS and the release of the Medical AI Research Foundations are a step toward building medical models that can generalize across healthcare settings and tasks.

We are seeding Medical AI Research Foundations with REMEDIS models for chest X-ray and pathology (with related code). Whereas the existing chest X-ray Foundation approach focuses on providing frozen embeddings for application-specific fine tuning from a model trained on several large private datasets, the REMEDIS models (trained on public datasets) enable users to fine-tune end-to-end for their application, and to run on local devices. We recommend users test different approaches based on their unique needs for their desired application. We expect to add more models and resources for training medical foundation models such as datasets and benchmarks in the future. We also welcome the medical AI research community to contribute to this.

Conclusion

These results suggest that REMEDIS has the potential to significantly accelerate the development of ML systems for medical imaging, which can preserve their strong performance when deployed in a variety of changing contexts. We believe this is an important step forward for medical imaging AI to deliver a broad impact. Beyond the experimental results presented, the approach and insights described here have been integrated into several of Google’s medical imaging research projects, such as dermatology, mammography and radiology among others. We’re using a similar self-supervised learning approach with our non-imaging foundation model efforts, such as Med-PaLM and Med-PaLM 2.

With REMEDIS, we demonstrated the potential of foundation models for medical imaging applications. Such models hold exciting possibilities in medical applications with the opportunity of multimodal representation learning. The practice of medicine is inherently multimodal and incorporates information from images, electronic health records, sensors, wearables, genomics and more. We believe ML systems that leverage these data at scale using self-supervised learning with careful consideration of privacy, safety, fairness and ethics will help lay the groundwork for the next generation of learning health systems that scale world-class healthcare to everyone.

Acknowledgements

This work involved extensive collaborative efforts from a multidisciplinary team of researchers, software engineers, clinicians, and cross-functional contributors across Google Health AI and Google Brain. In particular, we would like to thank our first co-author Jan Freyberg and our lead senior authors of these projects, Vivek Natarajan, Alan Karthikesalingam, Mohammad Norouzi and Neil Houlsby for their invaluable contributions and support. We also thank Lauren Winer, Sami Lachgar, Yun Liu and Karan Singhal for their feedback on this post and Tom Small for support in creating the visuals. Finally, we also thank the PhysioNet team for their support on hosting Medical AI Research Foundations. Users with questions can reach out to medical-ai-research-foundations at google.com.