The International Conference on Learning Representations (ICLR) 2020 is being hosted virtually from April 26th – May 1st. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

Hierarchical Foresight: Self-Supervised Learning of Long-Horizon Tasks via Visual Subgoal Generation

paper

Suraj Nair, Chelsea Finn | contact: surajn@stanford.edu

keywords: visual planning; reinforcement learning; robotics

Active World Model Learning with Progress Curiosity

paper

Kuno Kim, Megumi Sano, Julian De Freitas, Nick Haber, Dan Yamins | contact: khkim@cs.stanford.edu

keywords: curiosity, reinforcement learning, cognitive science

Kaleidoscope: An Efficient, Learnable Representation For All Structured Linear Maps

paper | blog post

Tri Dao, Nimit Sohoni, Albert Gu, Matthew Eichhorn, Amit Blonder, Megan Leszczynski, Atri Rudra, Christopher Ré | contact: trid@stanford.edu

keywords: structured matrices, efficient ml, algorithms, butterfly matrices, arithmetic circuits

Weakly Supervised Disentanglement with Guarantees

paper

Rui Shu, Yining Chen, Abhishek Kumar, Stefano Ermon, Ben Poole | contact: ruishu@stanford.edu

keywords: disentanglement, generative models, weak supervision, representation learning, theory

Depth width tradeoffs for Relu networks via Sharkovsky’s theorem

paper

Vaggos Chatziafratis, Sai Ganesh Nagarajan, Ioannis Panageas, Xiao Wang | contact: vaggos@cs.stanford.edu

keywords: dynamical systems, benefits of depth, expressivity

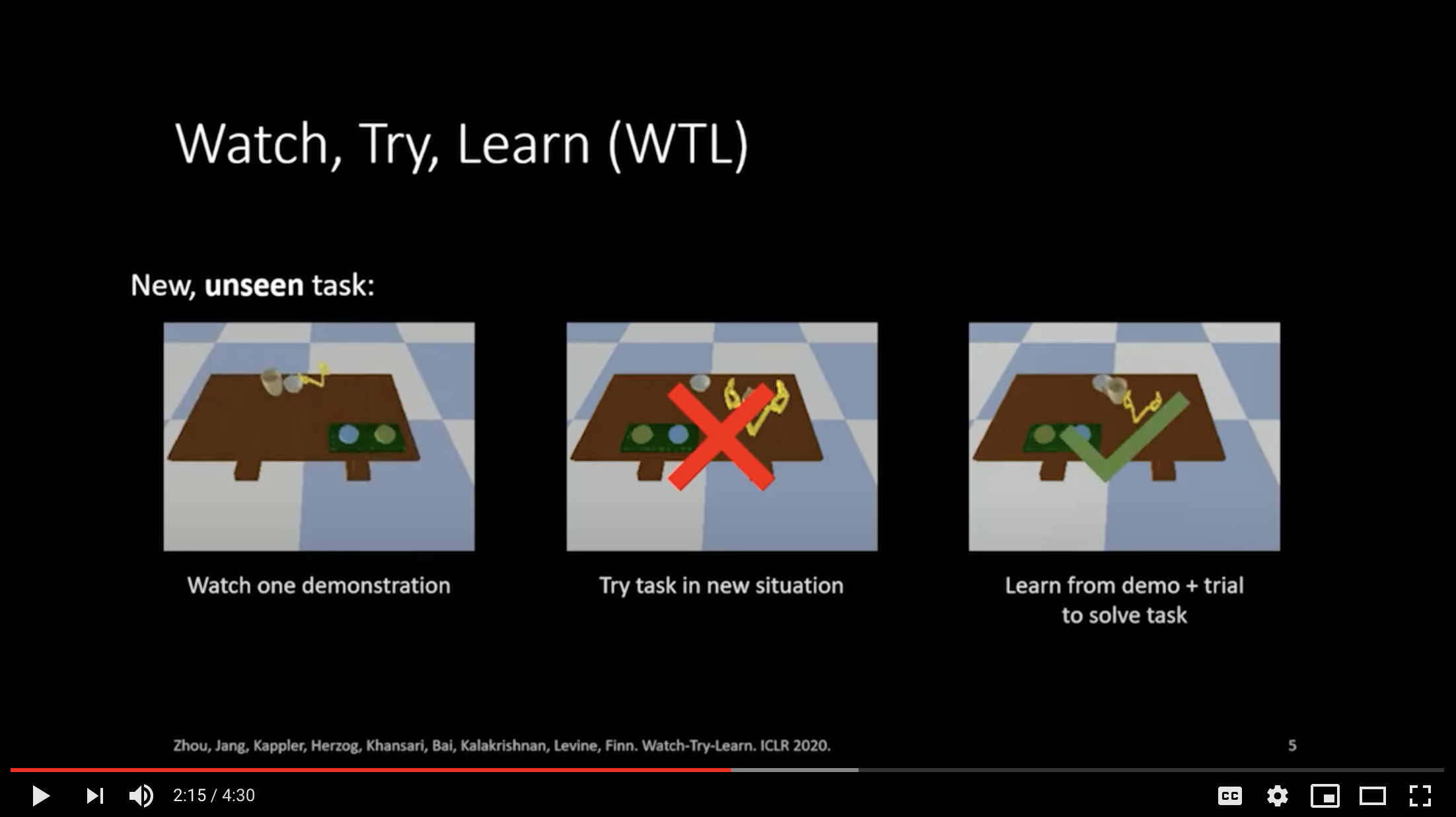

Watch, Try, Learn: Meta-Learning from Demonstrations and Reward

paper

Allan Zhou, Eric Jang, Daniel Kappler, Alex Herzog, Mohi Khansari, Paul Wohlhart, Yunfei Bai, Mrinal Kalakrishnan, Sergey Levine, Chelsea Finn | contact: ayz@stanford.edu

keywords: imitation learning, meta-learning, reinforcement learning

Assessing robustness to noise: low-cost head CT triage

paper

Sarah Hooper, Jared Dunnmon, Matthew Lungren, Sanjiv Sam Gambhir, Christopher Ré, Adam Wang, Bhavik Patel | contact: smhooper@stanford.edu

keywords: ai for affordable healthcare workshop, medical imaging, sinogram, ct, image noise

Learning transport cost from subset correspondence

paper

Ruishan Liu, Akshay Balsubramani, James Zou | contact: ruishan@stanford.edu

keywords: optimal transport, data alignment, metric learning

Generalization through Memorization: Nearest Neighbor Language Models

paper

Urvashi Khandelwal, Omer Levy, Dan Jurafsky, Luke Zettlemoyer, Mike Lewis | contact: urvashik@stanford.edu

keywords: language models, k-nearest neighbors

Distributionally Robust Neural Networks for Group Shifts: On the Importance of Regularization for Worst-Case Generalization

paper

Shiori Sagawa, Pang Wei Koh, Tatsunori B. Hashimoto, Percy Liang | contact: ssagawa@cs.stanford.edu

keywords: distributionally robust optimization, deep learning, robustness, generalization, regularization

Phase Transitions for the Information Bottleneck in Representation Learning

paper

Tailin Wu, Ian Fischer | contact: tailin@cs.stanford.edu

keywords: information theory, representation learning, phase transition

Improving Neural Language Generation with Spectrum Control

paper

Lingxiao Wang, Jing Huang, Kevin Huang, Ziniu Hu, Guangtao Wang, Quanquan Gu | contact: jhuang18@stanford.edu

keywords: neural language generation, pre-trained language model, spectrum control

Understanding and Improving Information Transfer in Multi-Task Learning

paper | blog post

Sen Wu, Hongyang Zhang, Christopher Ré | contact: senwu@cs.stanford.edu

keywords: multi-task learning

Strategies for Pre-training Graph Neural Networks

paper | blog post

Weihua Hu, Bowen Liu, Joseph Gomes, Marinka Zitnik, Percy Liang, Vijay Pande, Jure Leskovec | contact: weihuahu@cs.stanford.edu

keywords: pre-training, transfer learning, graph neural networks

Query2box: Reasoning over Knowledge Graphs in Vector Space using Box Embeddings

paper

Hongyu Ren, Weihua Hu, Jure Leskovec | contact: hyren@cs.stanford.edu

keywords: knowledge graph embeddings

Learning Self-Correctable Policies and Value Functions from Demonstrations with Negative Sampling

paper

Yuping Luo, Huazhe Xu, Tengyu Ma | contact: roosephu@gmail.com

keywords: imitation learning, model-based imitation learning, model-based rl, behavior cloning, covariate shift

Improved Sample Complexities for Deep Neural Networks and Robust Classification via an All-Layer Margin

paper

Colin Wei, Tengyu Ma | contact: colinwei@stanford.edu

keywords: deep learning theory, generalization bounds, adversarially robust generalization, data-dependent generalization bounds

Selection via Proxy: Efficient Data Selection for Deep Learning

paper | blog post | code

Cody Coleman, Christopher Yeh, Stephen Mussmann, Baharan Mirzasoleiman, Peter Bailis, Percy Liang, Jure Leskovec, Matei Zaharia | contact: cody@cs.stanford.edu

keywords: active learning, data selection, deep learning

We look forward to seeing you at ICLR!