If you want to create a world-class recommendation system, follow this recipe from a global team of experts: Blend a big helping of GPU-accelerated AI with a dash of old-fashioned cleverness.

The proof was in the pudding for a team from NVIDIA that won this year’s ACM RecSys Challenge. The competition is a highlight of an annual gathering of more than 500 experts who present the latest research in recommendation systems, the engines that deliver personalized suggestions for everything from restaurants to real estate.

At the Sept. 22-26 online event, the team will describe its dish, already available as open source code. They’re also sharing lessons learned with colleagues who build NVIDIA products like RAPIDS and Merlin, so customers can enjoy the fruits of their labor.

In an effort to bring more people to the table, NVIDIA will donate the contest’s $15,000 cash prize to Black in AI, a nonprofit dedicated to mentoring the next generation of Black specialists in machine learning.

GPU Server Doles Out Recommendations

This year’s contest, sponsored by Twitter, asked researchers to comb through a dataset of 146 million tweets to predict which ones a user would like, reply or retweet. The NVIDIA team’s work led a field of 34 competitors, thanks in part to a system with four NVIDIA V100 Tensor Core GPUs that cranked through hundreds of thousands of options.

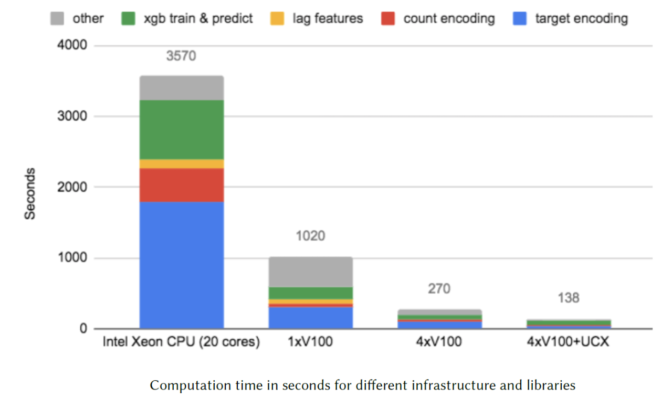

Their numbers were eye-popping. GPU-accelerated software engineered in less than a minute features that required nearly an hour on a CPU, a 500x speedup. The four-GPU system trained the team’s AI models 120x faster than a CPU. And GPUs gave the group’s end-to-end solution a 280x speedup compared to an initial implementation on a CPU.

“I’m still blown away when we pull off something like a 500x speedup in feature engineering,” said Even Oldridge, a Ph.D. in machine learning who in the past year quadrupled the size of his group that designs NVIDIA Merlin, a framework for recommendation systems.

Competition Sparks Ideas for Software Upgrades

The competition spawned work on data transformations that could enhance future versions of NVTabular, a Merlin library that eases engineering new features with the spreadsheet-like tables that are the basis of recommendation systems.

“We won in part because we could prototype fast,” said Benedikt Schifferer, one of three specialists in recommendation systems on the team that won the prize.

Schifferer also credits two existing tools. DASK, an open-source scheduling tool, let the team split memory-hungry jobs across multiple GPUs. And cuDF, part of NVIDIA’s RAPIDS framework for accelerated data science, let the group run the equivalent of the popular Pandas library on GPUs.

“Searching for features in the data using Pandas on CPUs took hours for each new feature,” said Chris Deotte, one of a handful of data scientists on the team who have earned the title Kaggle grandmaster for their prowess in competitions.

“When we converted our code to RAPIDS, we could explore features in minutes. It was life changing, we could search hundreds of features and that eventually led to discoveries that won that competition,” said Deotte, one of only two grandmasters who hold that title in all four Kaggle categories.

More enhancements for recommendation systems are on the way. For example, customers can look forward to improvements in text handling on GPUs, a key data type for recommendation systems.

An Aha! Moment Fuels the Race

Deotte credits a colleague in Brazil, Gilberto Titericz, with an insight that drove the team forward.

“He tracked changes in Twitter followers over time which turned out to be a feature that really fueled our accuracy — it was incredibly effective,” Deotte said.

“I saw patterns changing over time, so I made several plots of them,” said Titericz, who ranked as the top Kaggle grandmaster worldwide for a couple years.

“When I saw a really great result, I thought I made a mistake, but I took a chance, submitted it and to my surprise it scored high on the leaderboard, so my intuition was right,” he added.

In the end, the team used a mix of complementary AI models designed by Titericz, Schifferer and a colleague in Japan, Kazuki Onodera, all based on XGBoost, an algorithm well suited for recommendation systems.

Several members of the team are part of an elite group of Kaggle grandmasters that NVIDIA founder and CEO Jensen Huang dubbed KGMON, a playful takeoff on Pokemon. The team won dozens of competitions in the last four years.

Recommenders Getting Traction in B2C

For many members, including team leader Jean-Francois Puget in southern France, it’s more than a 9-to-5 job.

“We spend nights and weekends in competitions, too, trying to be the best in the world,” said Puget, who earned his Ph.D. in machine learning two decades before deep learning took off commercially.

Now the technology is spreading fast.

This year’s ACM RecSys includes three dozen papers and talks from companies like Amazon and Netflix that helped establish the field with recommenders that help people find books and movies. Now, consumer companies of all stripes are getting into the act including IKEA and Etsy, which are presenting at ACM RecSys this year.

“For the last three or four years, it’s more focused on delivering a personalized experience, really understanding what users want,” said Schifferer. It’s a cycle where “customers’ choices influence the training data, so some companies retrain their AI models every four hours, and some say they continuously train,” he added.

That’s why the team works hard to create frameworks like Merlin to make recommendation systems run easily and fast at scale on GPUs. Other members of NVIDIA’s winning team were Christof Henkel (Germany), Jiwei Liu and Bojan Tunguz (U.S.), Gabriel De Souza Pereira Moreira (Brazil) and Ahmet Erdem (Netherlands).

To get tips on how to design recommendation systems from the winning team, tune in to an online tutorial here on Friday, Sept. 25.

The post The Great AI Bake-Off: Recommendation Systems on the Rise appeared first on The Official NVIDIA Blog.