What is the metaverse? The metaverse is a shared virtual 3D world, or worlds, that are interactive, immersive, and collaborative.

Just as the physical universe is a collection of worlds that are connected in space, the metaverse can be thought of as a bunch of worlds, too.

Massive online social games, like battle royale juggernaut Fortnite and user-created virtual worlds like Minecraft and Roblox, reflect some elements of the idea.

Video-conferencing tools, which link far-flung colleagues together amidst the global COVID pandemic, are another hint at what’s to come.

But the vision laid out by Neal Stephenson’s 1992 classic novel “Snow Crash” goes well beyond any single game or video-conferencing app.

The metaverse will become a platform that’s not tied to any one app or any single place — digital or real, explains Rev Lebaredian, vice president of simulation technology at NVIDIA.

And just as virtual places will be persistent, so will the objects and identities of those moving through them, allowing digital goods and identities to move from one virtual world to another, and even into our world, with augmented reality.

“Ultimately we’re talking about creating another reality, another world, that’s as rich as the real world,” Lebaredian says.

Those ideas are already being put to work with NVIDIA Omniverse, which, simply put, is a platform for connecting 3D worlds into a shared virtual universe.

Omniverse is in use across a growing number of industries for projects such as design collaboration and creating “digital twins,” simulations of real-world buildings and factories.

How NVIDIA Omniverse Creates, Connects Worlds Within the Metaverse

So how does Omniverse work? We can break it down into three big parts.

The first is Omniverse Nucleus. It’s a database engine that connects users and enables the interchange of 3D assets and scene descriptions.

Once connected, designers doing modeling, layout, shading, animation, lighting, special effects or rendering can collaborate to create a scene.

Omniverse Nucleus relies on USD, or Universal Scene Description, an interchange framework invented by Pixar in 2012.

Released as open-source software in 2016, USD provides a rich, common language for defining, packaging, assembling and editing 3D data for a growing array of industries and applications.

Lebardian and others say USD is to the emerging metaverse what hyper-text markup language, or HTML, was to the web — a common language that can be used, and advanced, to support the metaverse.

Multiple users can connect to Nucleus, transmitting and receiving changes to their world as USD snippets.

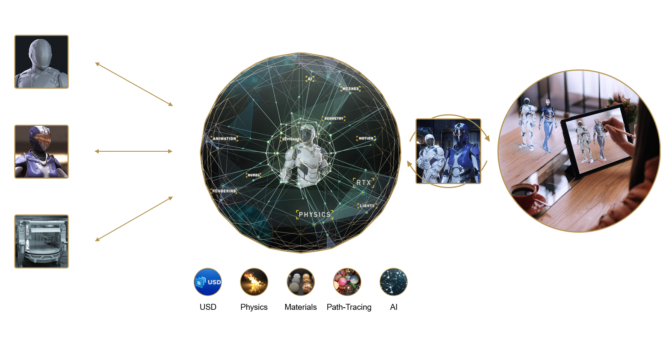

The second part of Omniverse is the composition, rendering and animation engine — the simulation of the virtual world.

Omniverse is a platform built from the ground up to be physically based. Thanks to NVIDIA RTX graphics technologies, it is fully path traced, simulating how each ray of light bounces around a virtual world in real-time.

Omniverse simulates physics with NVIDIA PhysX. It simulates materials with NVIDIA MDL, or material definition language.

And Omniverse is fully integrated with NVIDIA AI (which is key to advancing robotics, more on that later).

Omniverse is cloud-native, scales across multiple GPUs, runs on any RTX platform and streams remotely to any device.

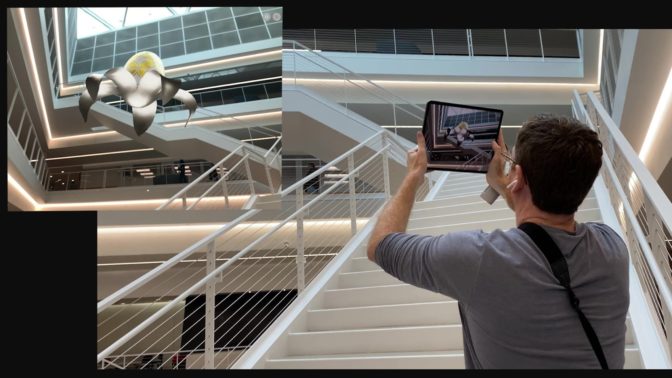

The third part is NVIDIA CloudXR, which includes client and server software for streaming extended reality content from OpenVR applications to Android and Windows devices, allowing users to portal into and out of Omniverse.

You can teleport into Omniverse with virtual reality, and AIs can teleport out of Omniverse with augmented reality.

Metaverses Made Real

NVIDIA released Omniverse to open beta in December, and NVIDIA Omniverse Enterprise in April. Professionals in a wide variety of industries quickly put it to work.

At Foster + Partners, the legendary design and architecture firm that designed Apple’s headquarters and London’s famed 30 St Mary Axe office tower — better known as “the Gherkin” — designers in 14 countries worldwide create buildings together in their Omniverse shared virtual space.

Visual effects pioneer Industrial Light & Magic is testing Omniverse to bring together internal and external tool pipelines from multiple studios. Omniverse lets them collaborate, render final shots in real-time and create massive virtual sets like holodecks.

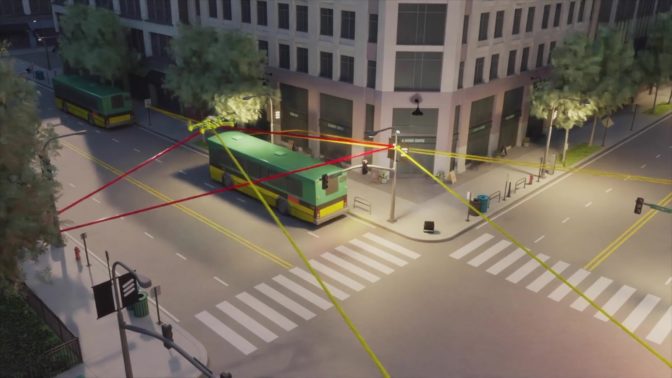

Multinational networking and telecommunications company Ericsson uses Omniverse to simulate 5G wave propagation in real-time, minimizing multi-path interference in dense city environments.

Infrastructure engineering software company Bentley Systems is using Omniverse to create a suite of applications on the platform. Bentley’s iTwin platform creates a 4D infrastructure digital twin to simulate an infrastructure asset’s construction, then monitor and optimize its performance throughout its lifecycle.

The Metaverse Can Help Humans and Robots Collaborate

These virtual worlds are ideal for training robots.

One of the essential features of NVIDIA Omniverse is that it obeys the laws of physics. Omniverse can simulate particles and fluids, materials and even machines, right down to their springs and cables.

Modeling the natural world in a virtual one is a fundamental capability for robotics.

It allows users to create a virtual world where robots — powered by AI brains that can learn from their real or digital environments — can train.

Once the minds of these robots are trained in the Omniverse, roboticists can load those brains onto a NVIDIA Jetson, and connect it to a real robot.

Those robots will come in all sizes and shapes — box movers, pick-and-place arms, forklifts, cars, trucks and even buildings.

In the future, a factory will be a robot, orchestrating many robots inside, building cars that are robots themselves.

How the Metaverse, and NVIDIA Omniverse, Enable Digital Twins

NVIDIA Omniverse provides a description for these shared worlds that people and robots can connect to — and collaborate in — to better work together.

It’s an idea that automaker BMW Group is already putting to work.

The automaker produces more than 2 million cars a year. In its most advanced factory, the company makes a car every minute. And each vehicle is customized differently.

BMW Group is using NVIDIA Omniverse to create a future factory, a perfect “digital twin.” It’s designed entirely in digital and simulated from beginning to end in Omniverse.

The Omniverse-enabled factory can connect to enterprise resource planning systems, simulating the factory’s throughput. It can simulate new plant layouts. It can even become the dashboard for factory employees, who can uplink into a robot to teleoperate it.

The AI and software that run the virtual factory are the same as what will run the physical one. In other words, the virtual and physical factories and their robots will operate in a loop. They’re twins.

No Longer Science Fiction

Omniverse is the “plumbing,” on which metaverses can be built.

It’s an open platform with USD universal 3D interchange, connecting them into a large network of users. NVIDIA has 12 Omniverse Connectors to major design tools already, with another 40 on the way. The Omniverse Connector SDK sample code, for developers to write their own Connectors, is available for download now.

The most important design tool platforms are signed up. NVIDIA has already enlisted partners from the world’s largest industries — media and entertainment; gaming; architecture, engineering and construction; manufacturing; telecommunications; infrastructure; and automotive.

And the hardware needed to run it is here now.

Computer makers worldwide are building NVIDIA-Certified workstations, notebooks and servers, which all have been validated for running GPU-accelerated workloads with optimum performance, reliability and scale. And starting later this year, Omniverse Enterprise will be available for enterprise license via subscription from the NVIDIA Partner Network.

Thanks to NVIDIA Omniverse, the metaverse is no longer science fiction.

Back to the Future

So what’s next?

Humans have been exploiting how we perceive the world for thousands of years, NVIDIA’s Lebaredian points out. We’ve been hacking our senses to construct virtual realities through music, art and literature for millennia.

Next, add interactivity and the ability to collaborate, he says. Better screens, head-mounted displays like the Oculus Quest, and mixed-reality devices like Microsoft’s Hololens are all steps toward fuller immersion.

All these pieces will evolve. But the most important one is here already: a high-fidelity simulation of our virtual world to feed the display. That’s NVIDIA Omniverse.

To steal a line from science-fiction master William Gibson: the future is already here; it’s just not very evenly distributed.

The metaverse is the means through which we can distribute those experiences more evenly. Brought to life by NVIDIA Omniverse, the metaverse promises to weave humans, AI and robots together in fantastic new worlds.

The post What Is the Metaverse? appeared first on The Official NVIDIA Blog.